Migrate from Java Business Host to PEX

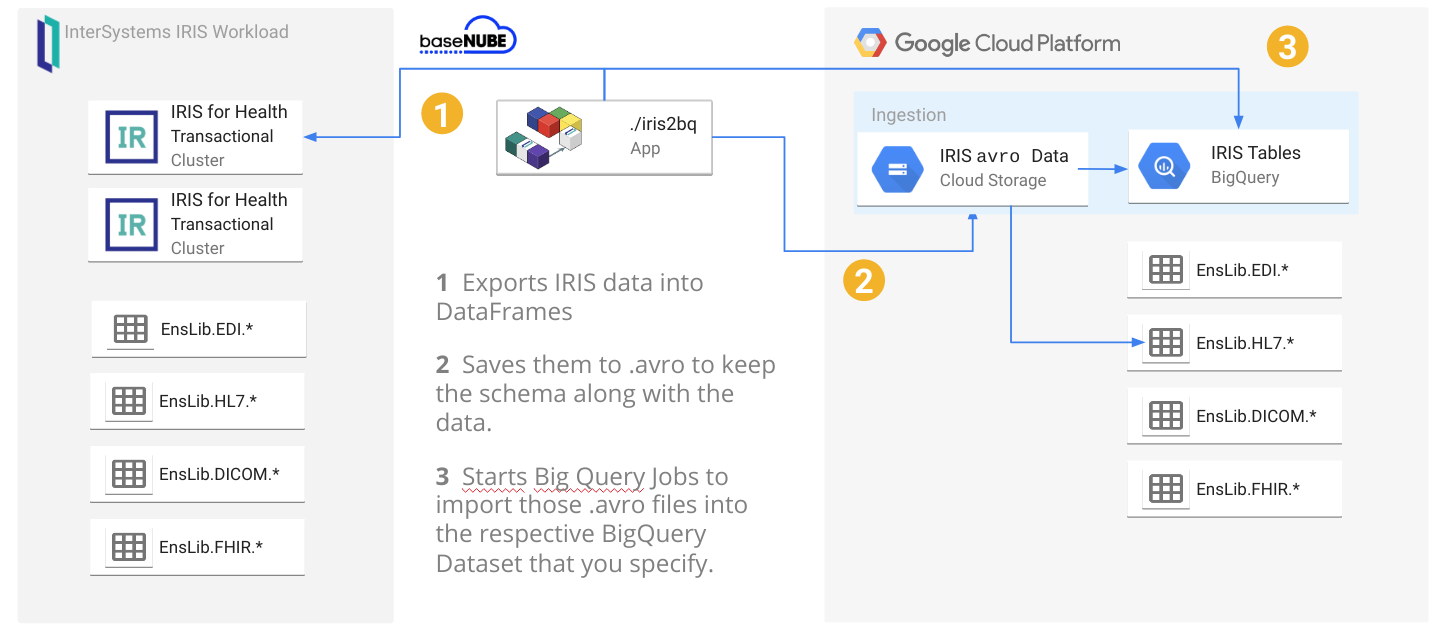

With the release PEX in InterSystems IRIS 2020.1 and InterSystems IRIS for Health 2020.1, customers have a better way to build Java into productions than the Java Business Host. PEX provides a complete set of APIs for building interoperability components and is available in both Java and .NET. The Java Business Host has been deprecated and will be retired in a future release.

Advantages of PEX