As applications grow, every database eventually hits scaling limits. Whether it's storage capacity, concurrent users, query throughput, or I/O bandwidth, single-server architectures have inherent constraints. This guide explains fundamental approaches to database scalability and shows how InterSystems IRIS implements these patterns to support enterprise-scale workloads.

We'll explore two complementary scaling strategies: horizontal scaling for user volume (distributing computational load) and sharding for data volume (partitioning datasets). Understanding the general principles behind these approaches will help you make informed decisions about when and how to scale your IRIS applications.

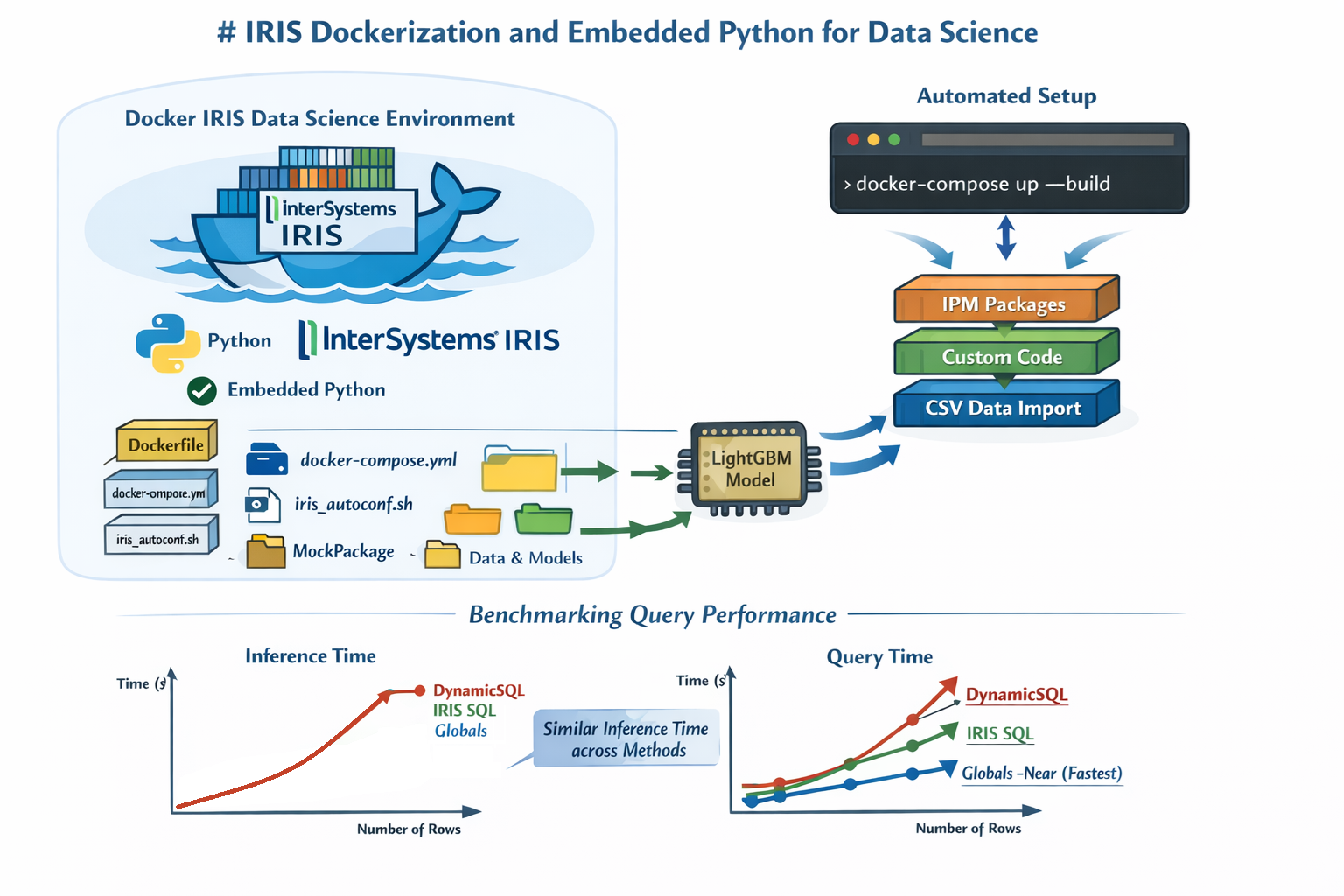

The examples in this guide use InterSystems IRIS in Docker containers.

.png)