Hi colleagues!

Often when we collaborate to someone's repo in GitHub we do the following cycle:

Fork-Clone-Change-Commit-Push-Pull-Request-Merge to the original repo.

This is all great and works fine!

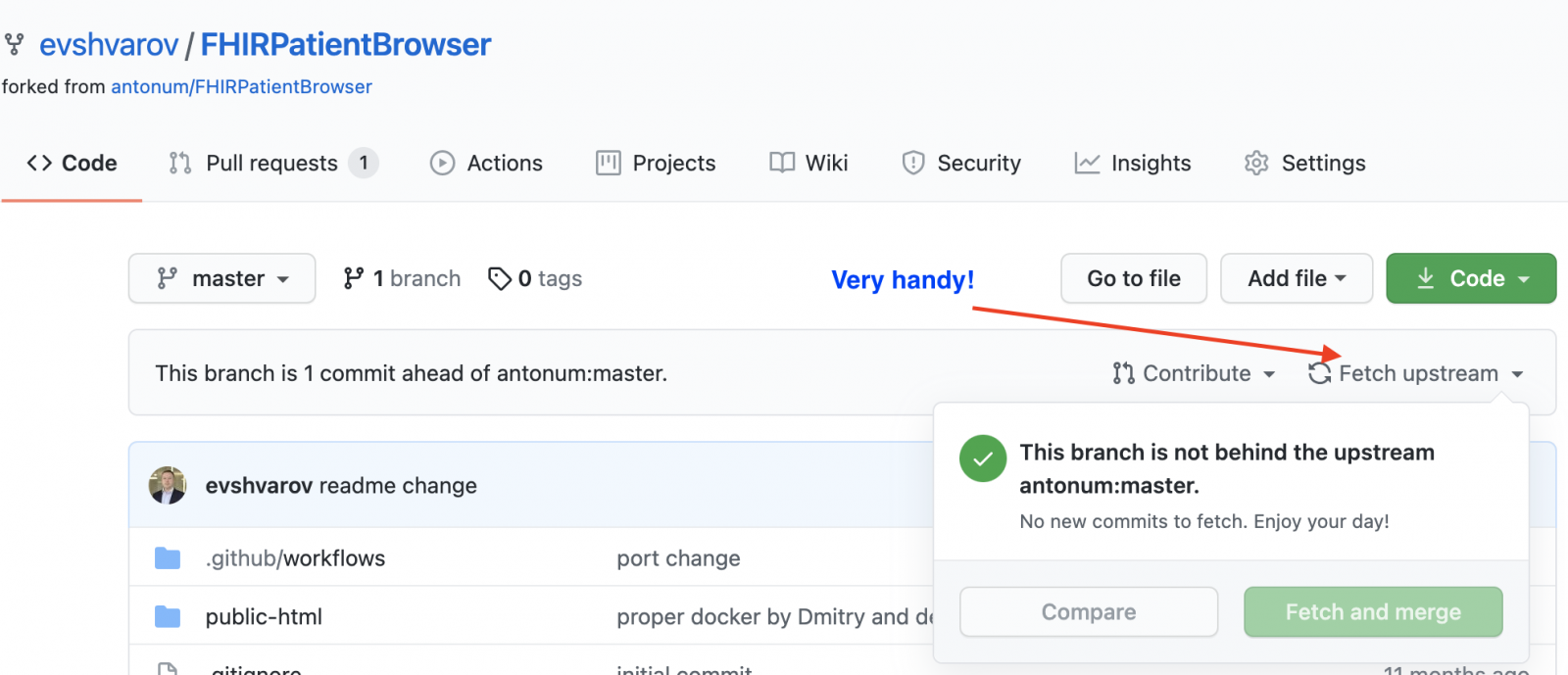

And if we want to make a second collaboration right after the merge you need to perform "Fetch upstream" to your forked repo first to "ingest" your own Pull-request in the original repo.

Geeky git-professionals do it with ease but this was always a headache for me so I usually simply deleted the fork and created a new one.

And today I figured that Github added a new UI feature that I can easily fetch-upstream for my fork with the original one and make it up to date and capable for pull-requests.

Here is where the button is:

This is a relief! )

Wanted to share this relief and productivity tip with you!

Bring more collaborations to Github repos!

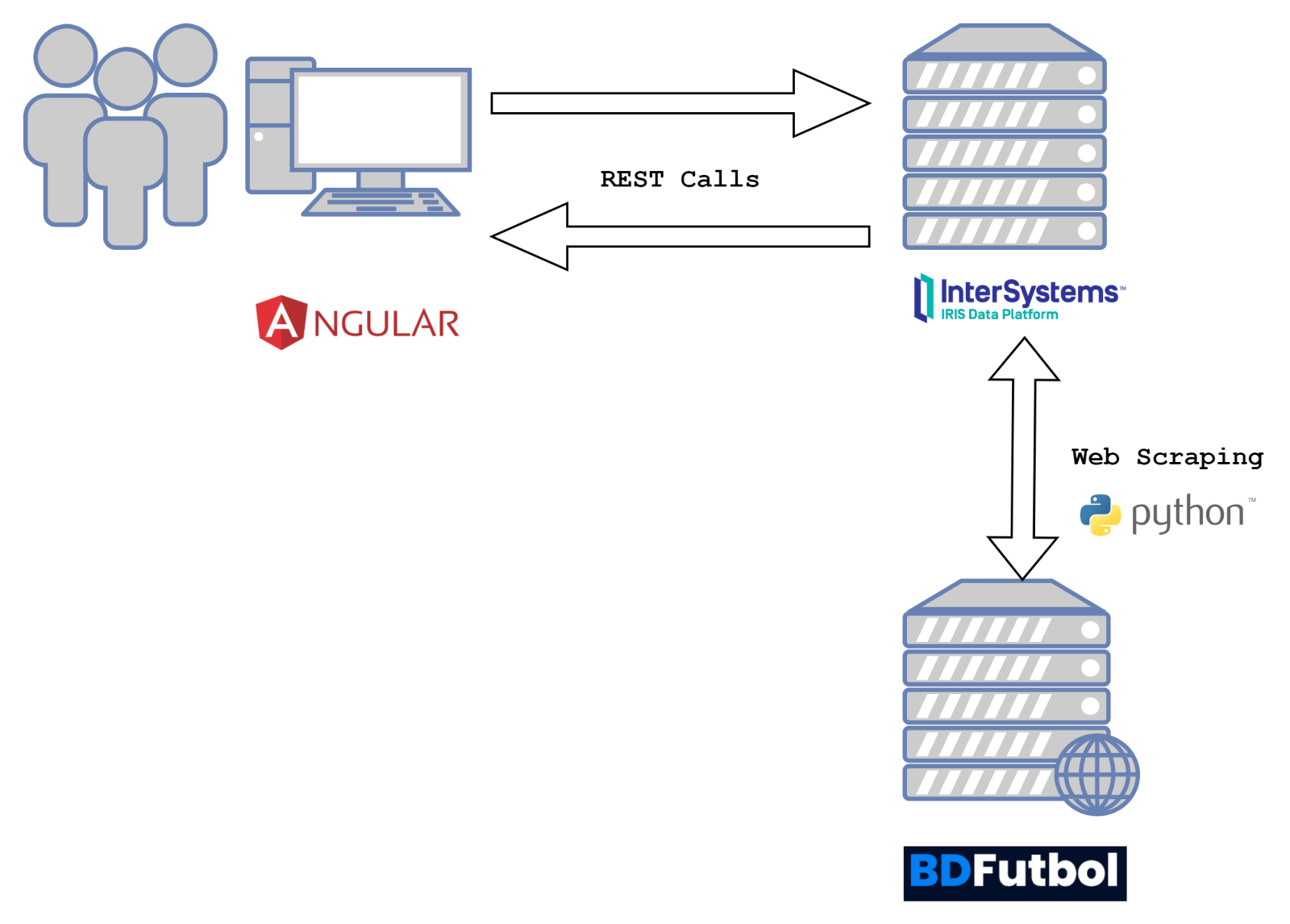

And speaking of PR - I just made a PR with docker to Google Cloud Run deployment for the FHIRaaS demo made by @Anton Umnikov for the current FHIR Contest! Looking for more of your contributions!

) .

) .

.png)