We all know that having a set of proper test data before deploying an application to production is crucial for ensuring its reliability and performance. It allows to simulate real-world scenarios and identify potential issues or bugs before they impact end-users. Moreover, testing with representative data sets allows to optimize performance, identify bottlenecks, and fine-tune algorithms or processes as needed. Ultimately, having a comprehensive set of test data helps to deliver a higher quality product, reducing the likelihood of post-production issues and enhancing the overall user experience.

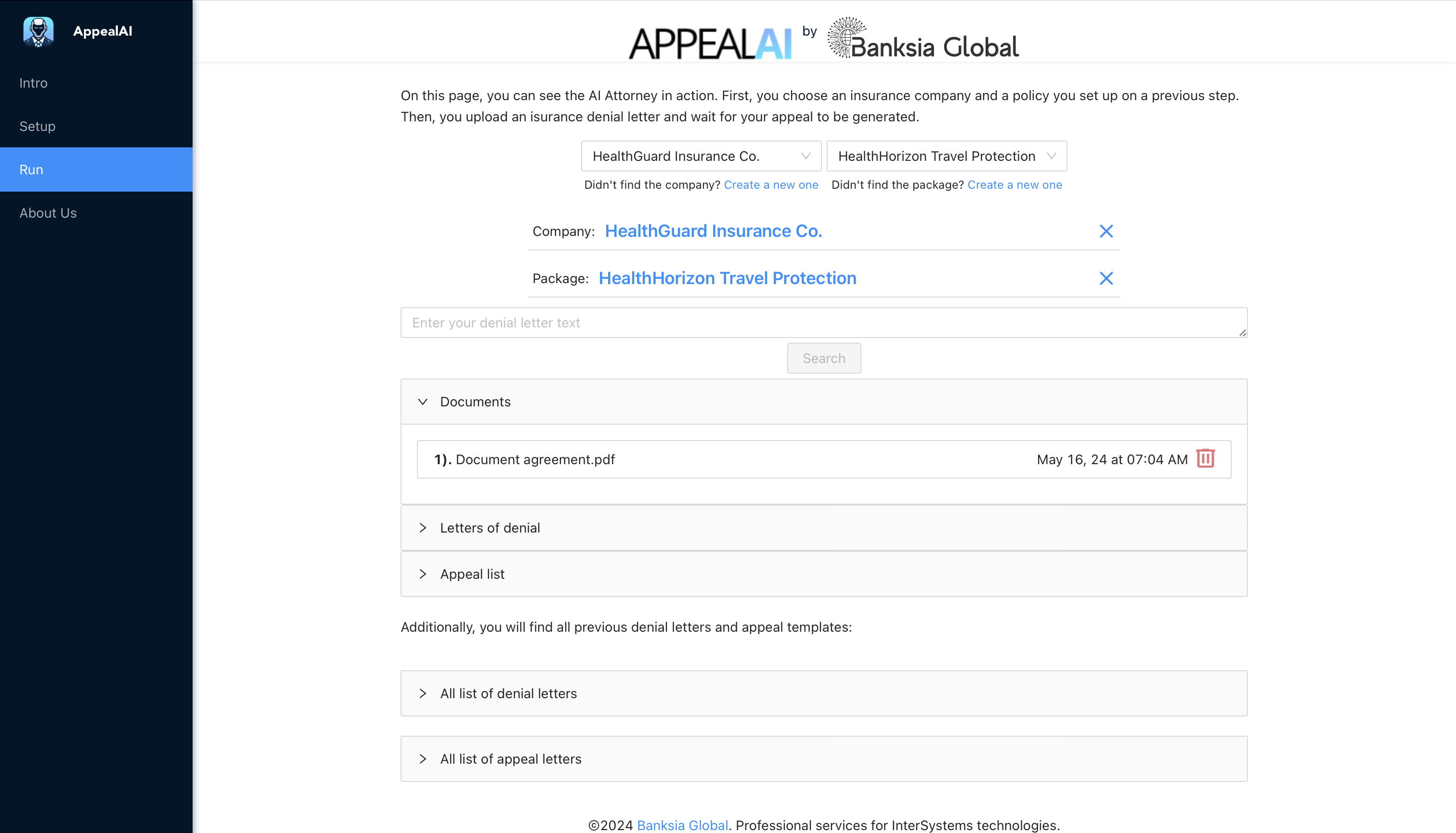

In this article, let's look at how one can use generative AI, namely Gemini by Google, to generate (hopefully) meaningful data for the properties of multiple objects. To do this, I will use the RESTful service to generate data in a JSON format and then use the received data to create objects.

.png)