HI Dev's

I dont work for Intersystems but i have found this training course very helpful and wanted to make sure others know about it.

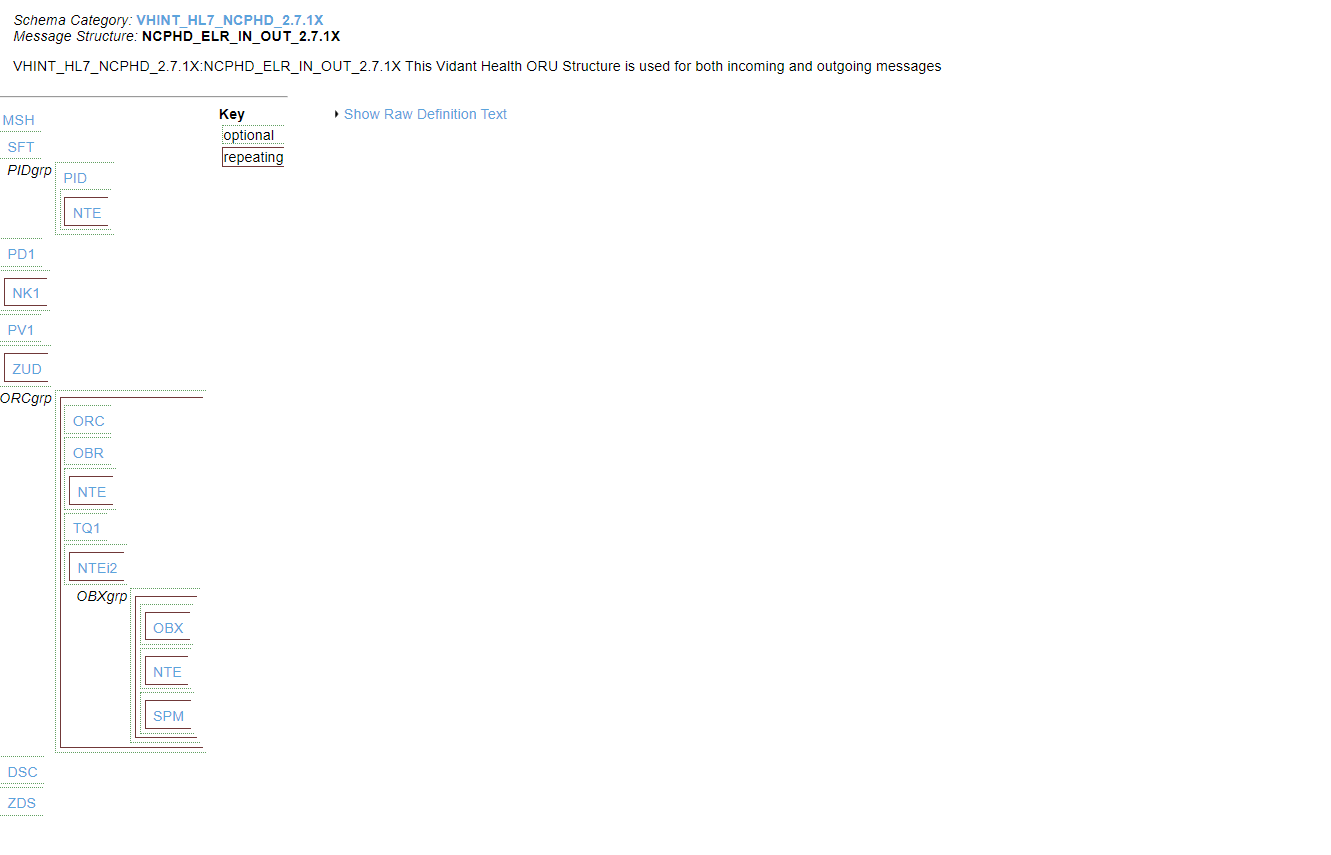

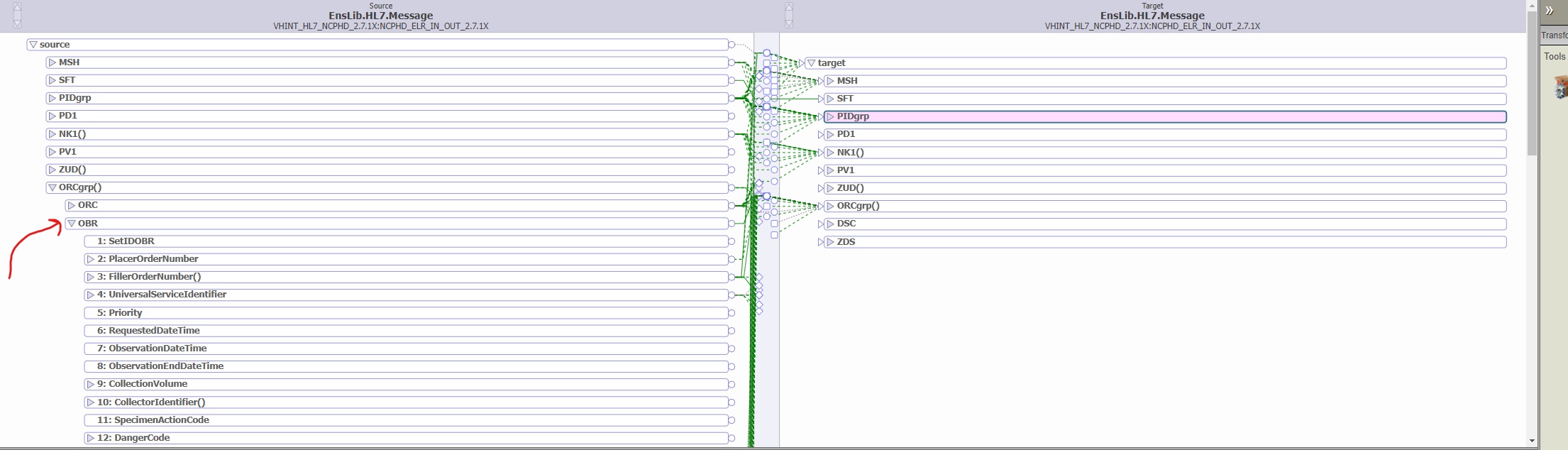

Advanced Data Transformations

Add a for each loop.

Create and use utility functions.

Create and use lookup tables.

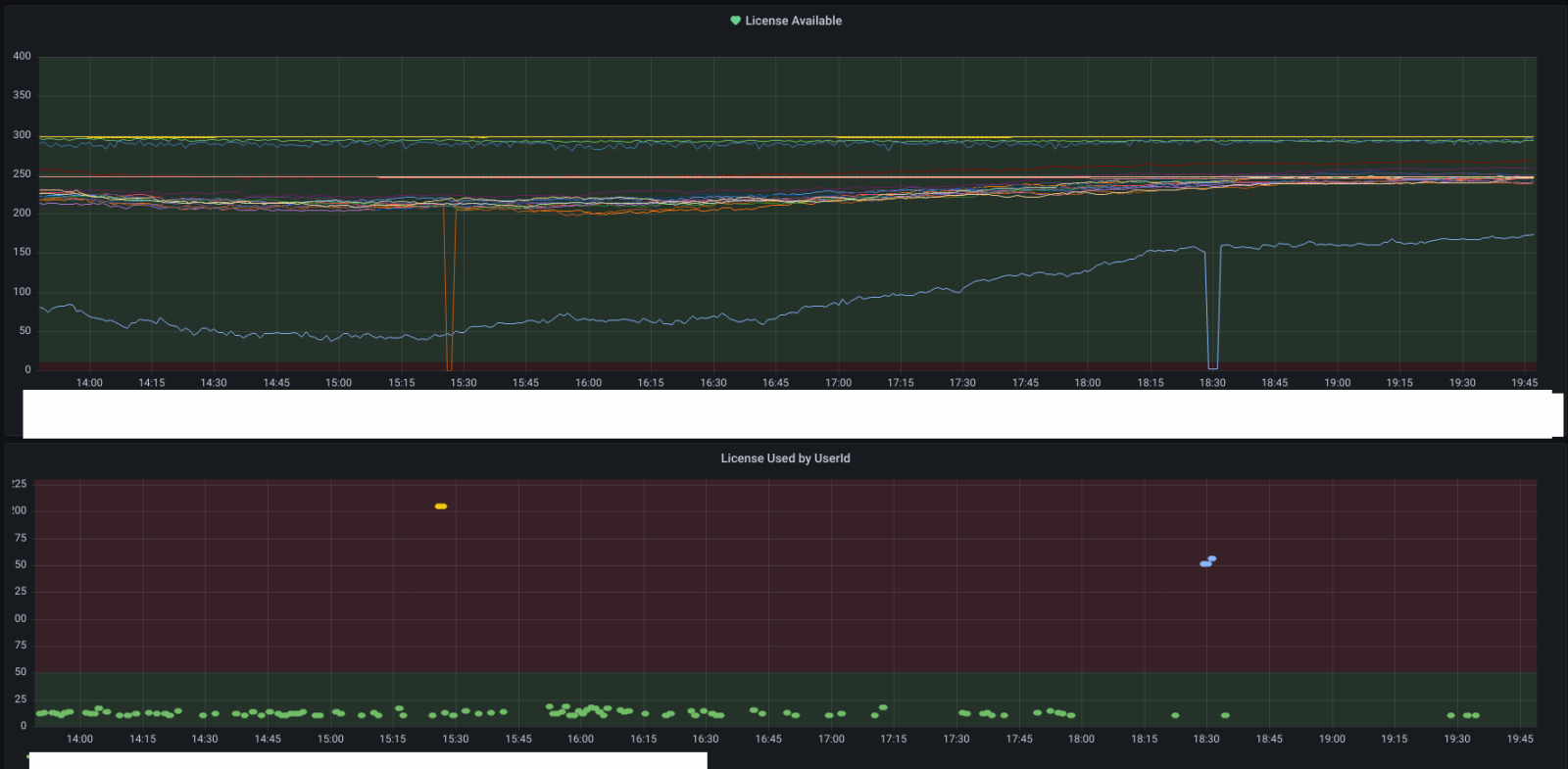

Use counting.

Create and implement a subtransformation.

Add a code action.

Add a group of actions to organize relevant actions.

.png)

.png)

.png)