This time I want to talk about something not specific to InterSystems IRIS, but that I think is important if you want to work with Docker and your server at work is a PC or laptop with Windows 10 Pro or Enterprise.

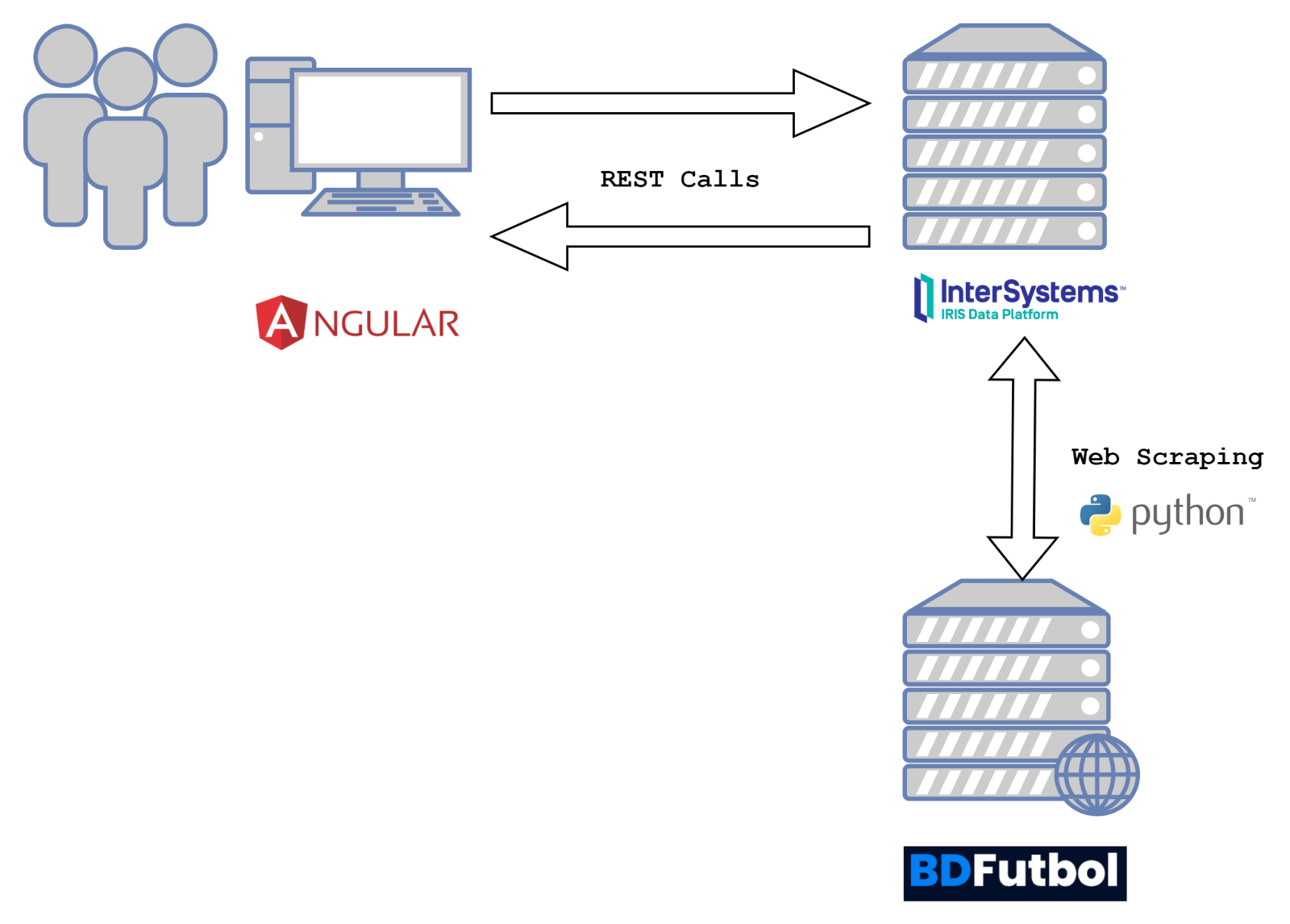

As you likely know, containers technology comes basically from Linux world and, nowadays, is on Linux hosts were it shows maximum potential. Those who use Windows on a normal basis see that both, Microsoft and Docker, have done important efforts during these last years that allow us to run containers based on Linux images on our Windows system in a really easy way... but it's something not supported for production systems and, this is the big problem, is not reliable if we want to keep persistent data outside of containers, in the host system,... mostly due to the big differences between Windows and Linux file systems. In the end, Docker for Windows itself uses a small linux virtual machine (MobiLinux) to run the containers... it does it transparently for the windows user... and it works perfectly well if, as I said, you don't require that your databases survive longer than the container...

Well,...let's get to the point,... the point is that many times, to avoid issues and simplify, we need a full Linux system and, if our server is based on Windows, the only way of having it is through a virtual machine. At least till WSL2 in Windows is released, but that will be another story and sure it'll take a bit of time to become robust enough.

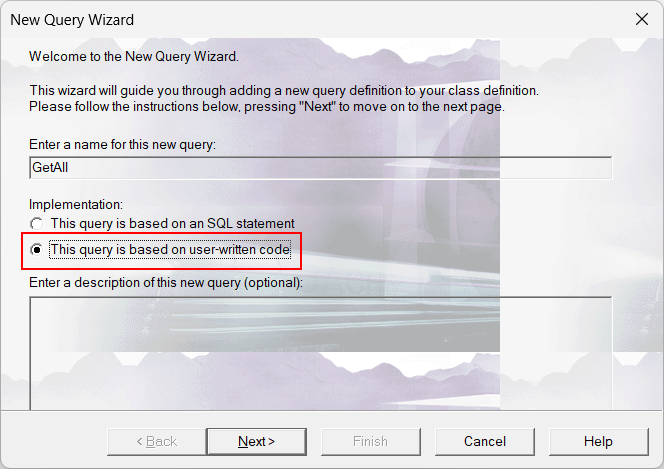

In this article, I'll tell you, step by step, how to install an environment where you'll be able to work, if you need it, with Docker containers on an Ubuntu system in your Windows server. Let's go...