Some time ago, I put together a small example to quickly deploy InterSystems IRIS instances connected via ECP using Docker.

Time passed and, like everything, it needed a bit of a refresh.

High availability (HA) refers to the goal of keeping a system or application operational and available to users a very high percentage of the time, minimizing both planned and unplanned downtime.

Some time ago, I put together a small example to quickly deploy InterSystems IRIS instances connected via ECP using Docker.

Time passed and, like everything, it needed a bit of a refresh.

.png)

If you're running IRIS in a mirrored IrisCluster for HA in Kubernetes, the question of providing a Mirror VIP (Virtual IP) becomes relevant. Virtual IP offers a way for downstream systems to interact with IRIS using one IP address. Even when a failover happens, downstream systems can reconnect to the same IP address and continue working.

The lead in above was stolen (gaffled, jacked, pilfered) from techniques shared to the community for vips across public clouds with IRIS by @Eduard Lebedyuk ...

Articles: ☁ vip-aws | vip-gcp | vip-azure

This version strives to solve the same challenges for IRIS on Kubernetes when being deployed via MAAS, on prem, and possibly yet to be realized using cloud mechanics with Manged Kubernetes Services.

.png)

You may have noticed that to configure a mirror for InterSystems IRIS for Health™ and HealthShare® Health Connect there is a special requirement. I wanted to go through it step by step in this article.

This assumes you have already configured the second failover member and confirmed a successful failover member status in the mirror monitor:

Step 1:Enable HS_Services user (on backup and primary) .png)

Step 2: Switch to Namespace HSSYS and go to Interoperability > Configure > Credentials. Enter the Password for your predefined HS_Services user (on backup and primary)

.png)

If you are in the business of building a robust High Availability, Disaster Recovery or Stamping multiple environments rapidly and in a consistent manner Karmada may just be the engine powering your Cloning Facility..png)

One of the recommendations when deploying InterSystems Technologies for production is to set up High Availability. The recommended API Manager for these InterSystems Technologies is the InterSystems API Manager (IAM). IAM (essentially Kong Gateway) has multiple deployment topologies.

If you are looking for high availability you could use:

a) Kong Traditional Mode: Multiple Node Clusters

b) Hybrid Mode

c) DB-less Mode

Before we break them down let's first understand the out of the box deployment that is provided by InterSystems: Installing IAM Version 3.10.

Kong Traditional Mode

Are you familiar with SQL databases, but not familiar with IRIS? Then read on...

About a year ago I joined InterSystems, and that is how IRIS got on my radar. I've been using databases for over 40 years—much of that time for database vendors—and assumed IRIS would be largely the same as the other databases I knew. However I was surprised to find that IRIS is in several ways quite unlike other databases, often much better. With this, my first article in the Dev Community, I'll give a high-level overview of IRIS for people that are already familiar with the other databases such as Oracle, SQL Server, Snowflake, PostgeSQL, etc. I hope I can make things clearer and simpler for you and save you some time getting started.

Regardless of whether an instance of IRIS is in the cloud or not, high availability and disaster recovery are always important considerations. While IKO already allows for the use of NodeSelectors to enforce the scheduling of IRISCluster nodes across multiple zones, multi-region k8s clusters are generally not recommended or even supported in the major CSP's managed Kubernetes solutions. However, when discussing HA and DR for IRIS, we may want to have an async member in a completely separate region, or even in a different cloud provider altogether. With additional options added to IKO in the

As an IT and cloud team manager with 18 years of experience with InterSystems technologies, I recently led our team in the transformation of our traditional on-premises ERP system to a cloud-based solution. We embarked on deploying InterSystems IRIS within a Kubernetes environment on AWS EKS, aiming to achieve a scalable, performant, and secure system. Central to this endeavor was the utilization of the AWS Application Load Balancer (ALB) as our ingress controller.

All pods are assigned a Quality of Service (QoS). These are 3 levels of priority pods are assigned within a node.

The levels are as following:

1) Guaranteed: High Priority

2) Burstable: Medium Priority

3) BestEffort: Low Priority

It is a way of telling the kubelet what your priorities are on a certain node if resources need to be reclaimed. This great GIF below by Anvesh Muppeda explains it.

Hi there,I'm discovering IRIS and I need to POC the solution, with a constraint: containerization.I'm used to deploy my apps in a Swarm cluster, and all my bind volumes are written on a GlusterFS volume.The problem here, when I start my stack, the first log is:[WARN] ISC_DATA_DIRECTORY is located on a mount of type 'fuse.glusterfs' which is not supported, consider a named volume for '/iris_conf'And of course the deployment fails.Any idea? How can I provide my data on all my cluster nodes? I read this article: https://community.intersystems.com/post/deploying-sharded-cluster-docke…

ISCAgent is automatically installed with Cache, runs as a service and can be configured tostart with the system. This is fine – but the complication comes when this is on VCS clusters withMirroring on. When installing a Single Instance of Cache in a Cluster, point number 2. Says “Createa link from /usr/local/etc/cachesys to the shared disk. This forces the Caché registry and allsupporting files to be stored on the shared disk resource you have configured as part of theservice group.”So on the second passive node – this statement makes ISCAgent startup with the system, nor manuallystarting it

Has anyone noticed that when IRIS is forced down that the EnsLib.JavaGateway.Services do not properly shut down and release the ports? While we can write a shell script to kill the processes at the OS level, I was wondering if anyone experienced this issue.

We are working on our Mirroring setup/failover and had the team testing forcing the Primary down to make the Backup to become the Primary Server. When this happened and we failed back, IRIS could not restart the JavaGateway.Services because the ports were still in use.

Hi All! You may be interested to hear we've just released a new podcast episode all about mirroring, featuring conversations with @Chad Severtson from InterSystems and Greg King from J2. Take a listen and chime in on the conversation here if you have thoughts!

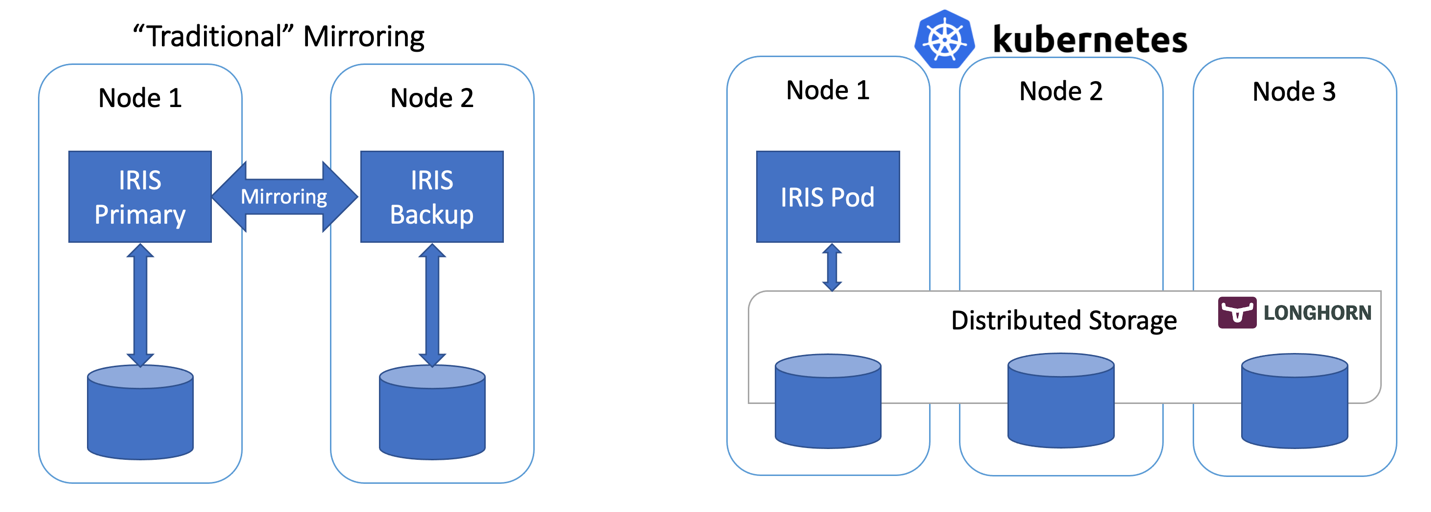

In this article, we’ll build a highly available IRIS configuration using Kubernetes Deployments with distributed persistent storage instead of the “traditional” IRIS mirror pair. This deployment would be able to tolerate infrastructure-related failures, such as node, storage and Availability Zone failures. The described approach greatly reduces the complexity of the deployment at the expense of slightly extended RTO.

The Amazon Web Services (AWS) Cloud provides a broad set of infrastructure services, such as compute resources, storage options, and networking that are delivered as a utility: on-demand, available in seconds, with pay-as-you-go pricing. New services can be provisioned quickly, without upfront capital expense. This allows enterprises, start-ups, small and medium-sized businesses, and customers in the public sector to access the building blocks they need to respond quickly to changing business requirements.

Updated: 10-Jan, 2023

A common need for our customers is to configure both HealthShare HealthConnect and IRIS in high availability mode.

It's common for other integration engines on the market to be advertised as having "high availability" configurations, but that's not really true. In general, these solutions work with external databases and therefore, if these are not configured in high availability, when a database crash occurs or the connection to it is lost, the entire integration tool it becomes unusable.

I am looking into creating a ZSTOP as you probably have seen from my previous posts, is there a way to capture the type of shutdown that occurred? So say if there was an unknown hardware failure (forced), vs a user shutdown? Mainly looking for user or system shutdown when we force another destination to become the primary in the mirror. So if a user shutdown the production to do.,... Task A, Task B etc..

Thanks

Scott

I am new to setting up a mirror environment....

We will have a Arbiter, Two Failover members (A,B), and a Async (DR) member (C). I have the two failover members in sync and are configured for Arbiter Control.

My question is about the Async member, when I initially set it up I pointed it to the mirror on the primary node A.

Is that correct?

We are trying to script a High Availability Shutdown/Start script in case we need to fail over to one of our other servers we can be back up within mins. Is there a way to configure the startup procedure to Automatically Stop/Start the JDBC server when shutting down or starting up cache? is there an auto setting we can change?

Thanks

Scott Roth

The Ohio State University Wexner Medical Center

1. A deployment may consist of two high availability instances and two disaster recovery instances in a different data center.

The corresponding UAT environment could replicate this giving a total of 8 instances. How do you confirm CPF and Scheduled task alignment across ALL instances.

In this post, I am going to detail how to set up a mirror using SSL, including generating the certificates and keys via the Public Key Infrastructure built in to InterSystems IRIS Data Platform. I did a similar post in the past for Caché, so feel free to check that out here if you are not running InterSystems IRIS. Much like the original, the goal of this is to take you from new installations to a working mirror with SSL, including a primary, backup, and DR async member, along with a mirrored database. I will not go into security recommendations or restricting access to the files. This is meant to just simply get a mirror up and running. Example screenshots are taken on a 2018.1.1 version of IRIS, so yours may look slightly different.

Hi Community,

We're pleased to invite you to the upcoming webinar in Spanish called "Deployments in Kubernetes with High Availability".

Date & Time: October 20, 4:00 PM CEST

Speaker: @Alberto Fuentes, Sales Engineer, InterSystems Iberia

For those that, at some point, need to test what means that of ECP for horizontal escalability (computing power and/or users and processes concurrency), but they're lazy o have no much time to build the environment, configure the server nodes, etc..., I've just published in Open Exchange the app/sample OPNEx-ECP Deployment .

I have described my efforts to optimize IRIS Mirror deployment in AWS ElasticContainer Service (ECS) in my prior article.

IRIS Mirror in the cloud (AWS) | InterSystems Developer Community | AWS

I have come to the opinion that IRIS Mirror is not as reliable as needed when deployed in ECS. The root of the problem is the fact that ECS randomly assigns one of the available IP addresses to each EC2 host or Fargate task it starts.

These get stored in iris.cpf file in MapMirrors section as shown here:

[MapMirrors.IRISMIRROR]

I have been working on redesigning a Health Connect production which runs on a mirrored instance of Healthshare 2019. We were told to take advantage of containers. We got to work on IRIS 2020.1 and split the database part from the Interoperability part. We had the IRIS mirror running on EC2 instances and used containers to run IRIS interoperability application. Eventually we decided to run the data tier in containers as well. Later we switched from using EC2 instances to Fargate “server-less” compute. A challenge was that each time AWS Elastic Container Service started a new container, it

All source code to the article is available at: https://github.com/antonum/ha-iris-k8s

In the previous article, we discussed how to set up IRIS on k8s cluster with high availability, based on the distributed storage, instead of traditional mirroring. As an example, that article used the Azure AKS cluster. In this one, we'll continue to explore highly available configurations on k8s. This time, based on Amazon EKS (AWS managed Kubernetes service) and would include an option for doing database backup and restore, based on Kubernetes Snapshot.

We are seeing more and more customers being lured with latest infrastructure technologies, particularly Composable Infrastructure. Coming with all sorts of data center consolidations and costs savings.

Question is: are there any concerns for HealthShare/TrakCare being run on these platforms or things to look out for? Anyone out there, already on these platforms?

To be more specific this is HPe Synergy with 480 Compute blades booting as bare metal.

Regards;

Anzelem.

If I set up a Mirror and add a new database to the mirror, do I need to create the new database on every single member of the mirror or will it automatically appear on Mirror members? How about HSCUSTOM database, which already got created when installing IRIS?

In Episode 5 of Data Points, @Bob Binstock joins us to talk about mirroring databases for high availability in InterSystems products! Most of the discussion centers around this process in InterSystems IRIS, with a few notes about the differences when tackling it in HealthShare. Take a listen and let us know your thoughts!