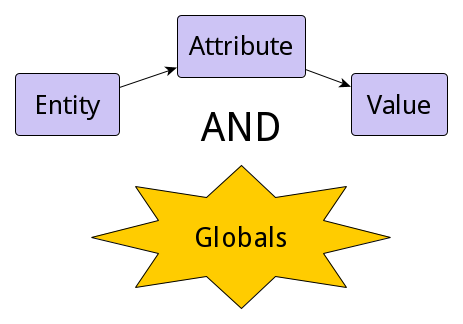

I would like to know if there is a way of having a callback or something similar, on persistent classes that is always called after the execution of the operation (failed or successfull).

%OnOpen is executed prior to the action, and there is no post callback

%OnAfterDelete and %OnAfterSave are executed after a successfull operation