Keywords: Python, JDBC, SQL, IRIS, Jupyter Notebook, Pandas, Numpy, and Machine Learning

1. Purpose

This is another 5-minute simple note on invoking the IRIS JDBC driver via Python 3 within i.e. a Jupyter Notebook, to read from and write data into an IRIS database instance via SQL syntax, for demo purpose.

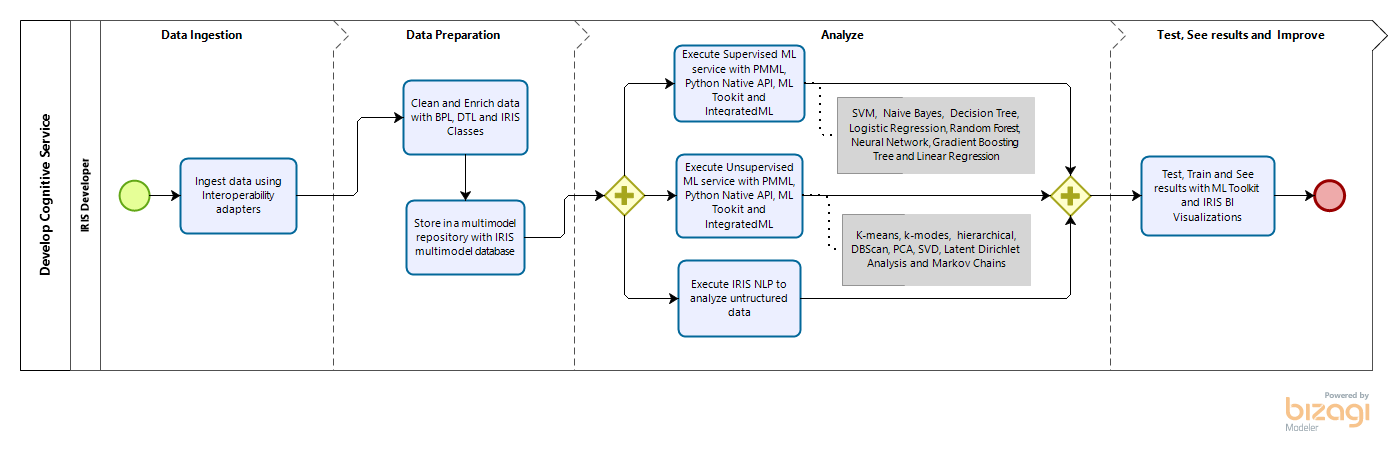

.png)

.png)

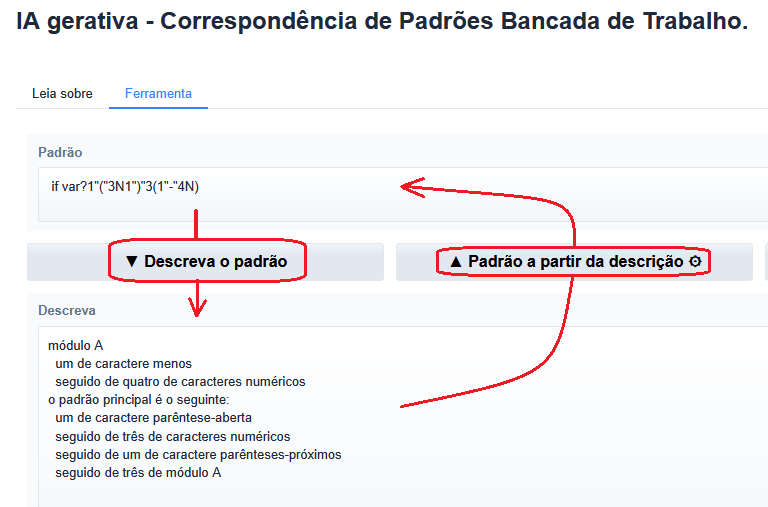

.png)