Dear Community,

I've noticed that some of my friends and colleagues are using the Developer Community app on their Android devices. Could someone please help me with the exe file or guide me on how to get it?

Dear Community,

I've noticed that some of my friends and colleagues are using the Developer Community app on their Android devices. Could someone please help me with the exe file or guide me on how to get it?

Ready to elevate your Business Intelligence game?

We've got an engaging video, perfect for all skill levels.

Discover how to get started with IRIS BI, optimize cube performance, and drive analytics adoption. Plus, get a sneak peek at what's next!

Hi, Community!

Have you thought about becoming a subject matter expert (SME) for InterSystems Certification?

The benefits are many—but you can hear directly from five members of our SME community as they discuss:

How did participating in exam development boost your own expertise?

Hey Community,

Enjoy the new video on InterSystems Developers YouTube:

These are the strategic plans of my example for the External Languages Contest 2025

.png)

Hey Community,

Enjoy the new video on InterSystems Developers YouTube:

Hi Community,

We're super excited and thankful to our 52 InterSystems colleagues who participated in the 📺 Demo Games for InterSystems Sales Engineers 📺 and created 19 amazing videos.

Our colleagues worldwide put their creativity, technical know-how, and demo magic to the test. What started as an internal contest to showcase technical skill and demo excellence became a true stage for imagination with some entries looking less like demos and more like short films, complete with acting, storytelling, and even a cinematic touch.

Also, we'd like to thank all the members of the Community who took the time to watch them and vote for their favourites!

%20(3)(1).jpg)

And now it's time to announce the winners!

Hey Community,

We're excited to invite you to the next InterSystems UKI Tech Talk webinar:

👉AI Vector Search Technology in InterSystems IRIS

⏱ Date & Time: Thursday, September 25, 2025 10:30-11:30 UK

Speakers:

👨🏫 @Saurav Gupta, Data Platform Team Leader, InterSystems

👨🏫 @Ruby Howard, Sales Engineer, InterSystems

Hi Team,

I've basic learning in Ensemble. I want to create a code as per following request. Please help in clearing following questions

We have a business process-AA. In this business process, we have onRequest method, here after performing some logic, I have to call a method ProcessAAlogic.

In ProcessAAlogic method, after initial processing, we have to call a business operation BB asynchronously (which will give a flag "AACompleted" having value 1 or 0) in pResponse object.

Now based on this AACompleted flag, I have to call other methods which are part of onRequest method i.e.

Hey Community!

We're happy to share the next video in the "Code to Care" series on our InterSystems Developers YouTube:

As the title says, I've noticed that files that gets saved to the disk where the database lies (.DAT file) in the stream directory, does not get purged. Is this expected and do we need to create our own schedule task to clean this folder up?

I could only find old answers that say this, however I find it a bit odd if that is the case because they are considered temporary files. Perhaps I do not handle the streams correctly in the code?

set fileToDisk = ##class(%Stream.FileBinary).%New()

set fileToDisk.Filename = fileDestinationPath

set copyStatus = fileToDisk.CopyFromAndSave(response.Data)Hi everyone,

I'm currently seeking a new position related to InterSystems technologies. My major skill is app integration and data flow development on the InterSystems IRIS platform (Interoperability). I develop high-load, mission-critical software. My coding background on Cache ObjectScript includes hundreds of data flows, thousands of Production business hosts, dozens of APIs, and other solutions on InterSystems IRIS. I worked to integrate such applications as SAP S/4HANA, Oracle Siebel, SAP Commerce Cloud, many custom apps, and all that can be integrated. I have also gained experience in various areas, including SQL, Java, Docker, IRIS administration, Apache Kafka, and Flutter (Dart).

Visual Studio Code releases new updates every month with new features and bug fixes, and the August 2025 release is now available.

This release features smarter AI model selection, enhanced security for sensitive edits and terminal commands, and productivity enhancements such as streamlined chat editing and customizable context with AGENTS.md.

If you’re migrating from InterSystems Studio to VS Code, or want to deepen your knowledge, check out the VS Code training courses from George James Software.

Updates in version 1.

Actually, I want to know specific production component status. I used "Ens.Config.Production" to get the status of component. But it will return only the component enabled/disabled. Anyone is having any Idea to get the specific production component status?

My problem was separating HL7 messages by message type. I had to create multiple File Operations. So I with custom code I am able to use 1 File Adapter for 1 interface and multiple message types. I did experiment pulling the MSH 4 out of the raw content to further access dynamic information, but that may open a need for more robust error checking / lookup default actions.

Using the recommended naming convention of "To_FILE_<IntegrationName>"

I decided to use a generic file name and path in the default settings.

I created a custom class that extended the EnsLib.File.

I've been trying to set up a script I can run after installing an instance to enable IPM across all namespaces. I have been able to install IPM successfully using

set r = ##class(%Net.HttpRequest).%New(),

r.Server="pm.community.intersystems.com",

r.SSLConfiguration="ISC.FeatureTracker.SSL.Config"

d r.Get("/packages/zpm/latest/installer"),

$system.OBJ.LoadStream(r.HttpResponse.Data,"c")and then running the zpm commands

repo -r -n registry -url https://pm.community.intersystems.

Hey Community,

We're pleased to invite all the developers to the upcoming kick-off webinar for the InterSystems .Net, Java, Python, and JavaScript Contest!

During the webinar, you will discover the exciting challenges and opportunities that await developers in this contest. We will also discuss the topics we would like the participants to cover and show you how to develop, build, and deploy applications using the InterSystems IRIS data platform.

Date & Time: Wednesday, September 24 – 11:30 am EDT | 5:30 pm CEST

Due to MySQL's interpretation of SCHEMA differing from the common SQL understanding (as seen in IRIS/SQL Server/Oracle), our automated Linked Table Wizard may encounter errors when attempting to retrieve metadata information to build the Linked Table.

(This also applies to Linked Procedures and Views)

When attempting to create a Linked Table through the Wizard, you will encounter an error that looks something like this:

ERROR #5535: SQL Gateway catalog table error in 'SQLPrimaryKeys'. Error: ' SQLState: (HY000) NativeError: [0] Message: [MySQL][ODBC 8.3(a) Driver][mysqld-5.5.5-10.4.

In the previous article, we saw how to build a customer service AI agent with smolagents and InterSystems IRIS, combining SQL, RAG with vector search, and interoperability.

In that case, we used cloud models (OpenAI) for the LLM and embeddings.

This time, we’ll take it one step further: running the same agent, but with local models thanks to Ollama.

Hi,

so we introduced GIT in our workflow and we exported all files with $SYSTEM.OBJ.ExportUDL

Everything fine so far. But for some reason the export adds an extra line for classes (Routines are OK as far as I can see):

.png)

On Serverside it isn't there

.png)

The Problem is now that when we checkout a branch and a class changed we automatically compile it from the repository to a namespace that is made for the developer. E.g. DEV_001, DEV_002 and so on.

We are excited to announce the general availability of JediSoft IRISsync®, our new synchronization and comparison solution built on InterSystems IRIS technology. IRISsync makes it easy to synchronize and compare IRIS instances.

IRISsync was voted runner-up in the "Most Likely to Use" category at InterSystems READY 2025 Demos and Drinks.

A huge thanks to everyone who supported us — we’re thrilled to see IRISsync resonating with the InterSystems user community!

IRISsync provides clear visibility into differences between environments.

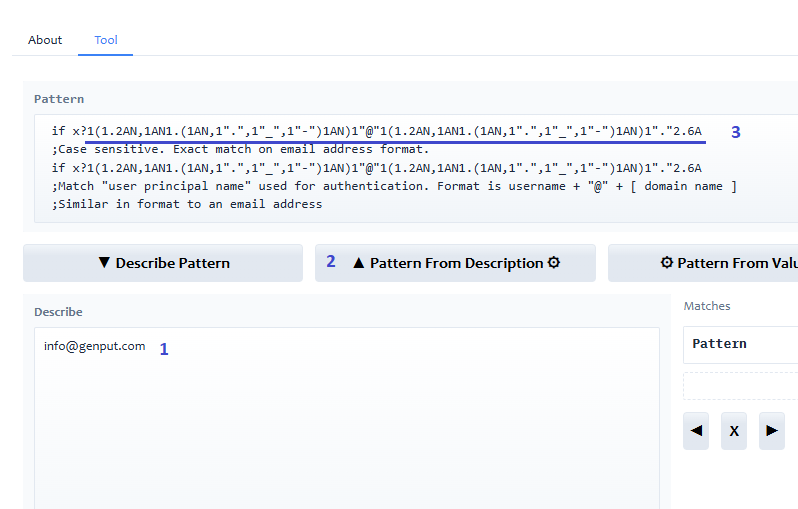

Article to announce pre-built pattern expressions are available from demo application.

AI deducing patterns require ten and more sample values to get warmed up.

The entry of a single value for a pattern has therefore been repurposed for retrieving pre-built patterns.

Paste an sample value for example an email address in description and press "Pattern from Description".

The sample is tested against available built-in patterns and any matching patterns and descriptions are displayed.

Patterns can also be retrieved by Keyword.

Using OpenEHR with InterSystems IRIS

Occasionally. we get questions about using OpenEHR with InterSystems. Typically, these discussions focus on why and how an organization wants to implement OpenEHR in building applications. Here’s a brief guide:

I am struggling in understanding how FDN works within Health Share, in particular those that are related to Provider Directory within Health Share. Does anyone have examples of using FDN that they would be able share?

#InterSystems Demo Games entry

A text-to-sql demo on mqtt data analytics with RAG.

🗣 Presenter: @Jeff Liu, Sales Engineer, InterSystems

You’ve probably already seen that the September Article Bounty is in full swing! 🚀

You can either submit an existing up-to-date article on one of the topics and earn 30 points,

or write a brand-new article from scratch and get a bounty of 🏆 5,000 points once it’s approved! 🎉

I would like to learn about the binary and document reference FHIR Resources. For the PDF data stored in those resources. But I think Binary Resource for the Document PDF stored in FHIR, so this resource is best for it. So sometimes Large PDF 15-page (~35md) data converts into base64 then data length is ~50 lac charecters length of base64binary data. this data store in Binary Resource on data field https://www.hl7.org/fhir/R4/binary.html follow this url this resource used in my case. so it's support the 50 lac charecter of the base64 length? This resource can be Insert into IRIS?

Hi Team, Cahe system is not starting while starting one of our software application and getting an above error

Hello everyone!

We won’t lie to you, we’re really looking forward to this webinar. Because of its topic, speaker, and everything we’ll learn from it. We invite you to this webinar in Spanish: “Connecting Sensors with InterSystems IRIS” on Thursday, October 2, at 4:00 PM (CEST).