OMOP Odyssey - Vanna AI ( The Underworld )

.png)

Vanna.AI - Personalized AI InterSystems OMOP Agent

.png)

Along this OMOP Journey, from the OHDSI book to Achilles, you can begin to understand the power of the OMOP Common Data Model when you see the mix of well written R and SQL deriving results for large scale analytics that are shareable across organizations. I however do not have a third normal form brain and about a month ago on the Journey we employed Databricks Genie to generate sql for us utilizing InterSystems OMOP and Python interoperability. This was fantastic, but left some magic under the hood in Databricks on how the RAG "model" was being constructed and the LLM in use to pull it off.

This point in the OMOP Journey we met Vanna.ai on the same beaten path...

Vanna is a Python package that uses retrieval augmentation to help you generate accurate SQL queries for your database using LLMs. Vanna works in two easy steps - train a RAG “model” on your data, and then ask questions which will return SQL queries that can be set up to automatically run on your database.

Vanna exposes all the pieces to do it ourselves with more control and our own stack against the OMOP Common Data Model.

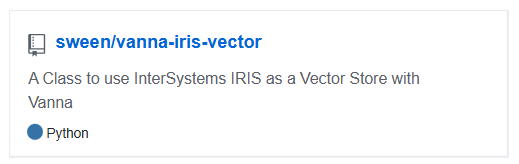

The approach from the Vanna camp I found particularly fantastic, and conceptually it felt like a magic trick was being performed, and one could certainly argue that was exactly what was happening.

Vanna needs 3 choices to pull of its magic trick, a sql database, a vector database, and an LLM. Just envision a dealer handing you out three piles and making you choose from each one.

So if its not obvious, our sql database is InterSystems OMOP implementing the Common Data Model, our LLM of choice is Gemini, and for the quick and dirty evaluation we are using Chroma DB for a vector to get to the point quickly in python.

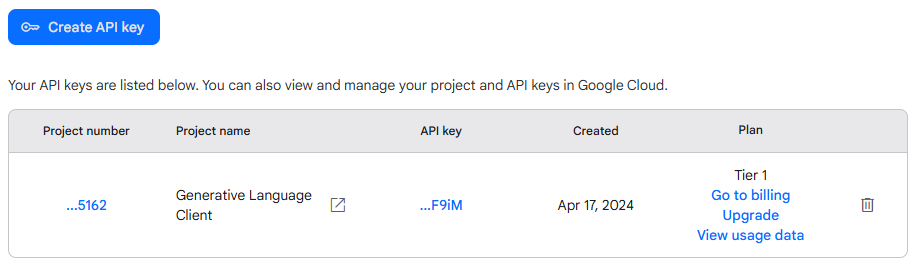

Gemini

So I cut a quick key and grew up a little bit and actually paid for it, I tried the free route with the rate limits of 50 prompts a day, and 1 per minute and it was unsettling... I may be happier being completely broke anyway, so we will see.

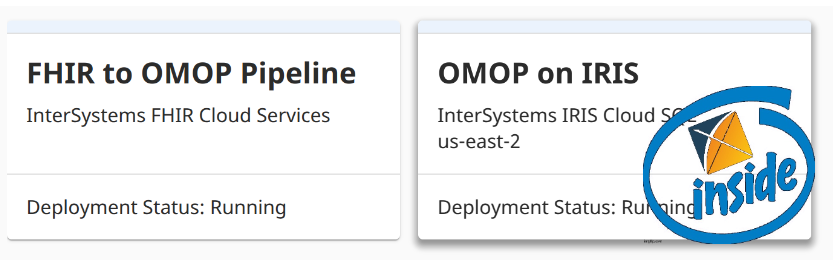

InterSystems OMOP

I am using my same fading trial as the [other posts](https://community.intersystems.com/smartsearch?search=OMOP+Journey). The CDM is loaded with about 100 patient pop per United State region with the pracs and orgs to boot.

Vanna

Let's turn the letters (get it?) notebook style and spin the wheel (get it again?) and put Vanna to work...pip3 install 'vanna[chromadb,gemini,sqlalchemy-iris]'

Lets organize our pythons.

from vanna.chromadb import ChromaDB_VectorStore

from vanna.google import GoogleGeminiChat

from sqlalchemy import create_engine

import pandas as pd

import ssl

from sqlalchemy import create_engine

import time

Initialize the star of our show and introduce her to our model. Kind of weird right, Vanna (White) is a model.

class MyVanna(ChromaDB_VectorStore, GoogleGeminiChat):

def __init__(self, config=None):

ChromaDB_VectorStore.__init__(self, config=config)

GoogleGeminiChat.__init__(self, config={'api_key': "shaazButt", 'model': "gemini-2.0-flash"})

vn = MyVanna()

Let's connect to our InterSystems OMOP Cloud deployment using sqlalchemy-iris from @caretdev. The work done with this dialect is quickly becoming the key ingredient for modern data interoperability of iris products in the data world.

engine = create_engine("iris://SQLAdmin:LordFauntleroy!!!@k8s-0a6bc2ca-adb040ad-c7bf2ee7c6-e6b05ee242f76bf2.elb.us-east-1.amazonaws.com:443/USER", connect_args={"sslcontext": context})

context = ssl.SSLContext(ssl.PROTOCOL_TLS_CLIENT)

context.verify_mode=ssl.CERT_OPTIONAL

context.check_hostname = False

context.load_verify_locations("vanna-omop.pem")

conn = engine.connect()

You define a function that takes in a SQL query as a string and returns a pandas dataframe. This gives Vanna a function that it can use to run the SQL on the OMOP Common Data Model.

def run_sql(sql: str) -> pd.DataFrame:

df = pd.read_sql_query(sql, conn)

return df

vn.run_sql = run_sql

vn.run_sql_is_set = True

Feeding the Model with a Menu

The information schema query may need some tweaking depending on your database. This is a good starting point. This will break up the information schema into bite-sized chunks that can be referenced by the LLM... If you like the plan, then uncomment this and run it to train Vanna.

df_information_schema = vn.run_sql("SELECT * FROM INFORMATION_SCHEMA.COLUMNS")

plan = vn.get_training_plan_generic(df_information_schema)

plan

vn.train(plan=plan)

Training

The following are methods for adding training data. Make sure you modify the examples to match your database. DDL statements are powerful because they specify table names, column names, types, and potentially relationships. These ddl's are generated with the now supported DataBaseConnector as outlined in this [post](https://community.intersystems.com/post/omop-odyssey-celebration-house-hades).vn.train(ddl="""

--iris CDM DDL Specification for OMOP Common Data Model 5.4

--HINT DISTRIBUTE ON KEY (person_id)

CREATE TABLE omopcdm54.person (

person_id integer NOT NULL,

gender_concept_id integer NOT NULL,

year_of_birth integer NOT NULL,

month_of_birth integer NULL,

day_of_birth integer NULL,

birth_datetime datetime NULL,

race_source_concept_id integer NULL,

ethnicity_source_value varchar(50) NULL,

ethnicity_source_concept_id integer NULL );

--HINT DISTRIBUTE ON KEY (person_id)

CREATE TABLE omopcdm54.observation_period (

observation_period_id integer NOT NULL,

person_id integer NOT NULL,

observation_period_start_date date NOT NULL,

observation_period_end_date date NOT NULL,

period_type_concept_id integer NOT NULL );

--HINT DISTRIBUTE ON KEY (person_id)

CREATE TABLE omopcdm54.visit_occurrence (

visit_occurrence_id integer NOT NULL,

discharged_to_source_value varchar(50) NULL,

preceding_visit_occurrence_id integer NULL );

--HINT DISTRIBUTE ON KEY (person_id)

CREATE TABLE omopcdm54.visit_detail (

visit_detail_id integer NOT NULL,

person_id integer NOT NULL,

visit_detail_concept_id integer NOT NULL,

provider_id integer NULL,

care_site_id integer NULL,

visit_detail_source_value varchar(50) NULL,

visit_detail_source_concept_id Integer NULL,

--HINT DISTRIBUTE ON KEY (person_id)

CREATE TABLE omopcdm54.condition_occurrence (

condition_occurrence_id integer NOT NULL,

person_id integer NOT NULL,

visit_detail_id integer NULL,

condition_source_value varchar(50) NULL,

condition_source_concept_id integer NULL,

condition_status_source_value varchar(50) NULL );

--HINT DISTRIBUTE ON KEY (person_id)

CREATE TABLE omopcdm54.drug_exposure (

drug_exposure_id integer NOT NULL,

person_id integer NOT NULL,

dose_unit_source_value varchar(50) NULL );

--HINT DISTRIBUTE ON KEY (person_id)

CREATE TABLE omopcdm54.procedure_occurrence (

procedure_occurrence_id integer NOT NULL,

person_id integer NOT NULL,

procedure_concept_id integer NOT NULL,

procedure_date date NOT NULL,

procedure_source_concept_id integer NULL,

modifier_source_value varchar(50) NULL );

--HINT DISTRIBUTE ON KEY (person_id)

CREATE TABLE omopcdm54.device_exposure (

device_exposure_id integer NOT NULL,

person_id integer NOT NULL,

device_concept_id integer NOT NULL,

unit_source_value varchar(50) NULL,

unit_source_concept_id integer NULL );

--HINT DISTRIBUTE ON KEY (person_id)

CREATE TABLE omopcdm54.observation (

observation_id integer NOT NULL,

person_id integer NOT NULL,

observation_concept_id integer NOT NULL,

observation_date date NOT NULL,

observation_datetime datetime NULL,

<SNIP>

""")

Sometimes you may want to add documentation about your business terminology or definitions, here I like to add the resource names from FHIR that were transformed to OMOP.

vn.train(documentation="Our business is to provide tools for generating evicence in the OHDSI community from the CDM")

vn.train(documentation="Another word for care_site is organization.")

vn.train(documentation="Another word for provider is practitioner.")

Now lets add all the data from the InterSystems OMOP Common Data Model, probably a better way to do this, but I get paid by the byte.

cdmtables = ["care_site", "cdm_source", "cohort", "cohort_definition", "concept", "concept_ancestor", "concept_class", "concept_relationship", "concept_synonym", "condition_era", "condition_occurrence", "cost", "death", "device_exposure", "domain", "dose_era", "drug_era", "drug_exposure", "drug_strength", "episode", "episode_event", "fact_relationship", "location", "measurement", "metadata", "note", "note_nlp", "observation", "observation_period", "payer_plan_period", "person", "procedure_occurrence", "provider", "relationship", "source_to_concept_map", "specimen", "visit_detail", "visit_occurrence", "vocabulary"]

for table in cdmtables:

vn.train(sql="SELECT * FROM WHERE OMOPCDM54." + table)

time.sleep(60)

I added the ability for Gemini to see the data here, ensure you want to do this in your travels or give Google your OMOP data with slight of hand.

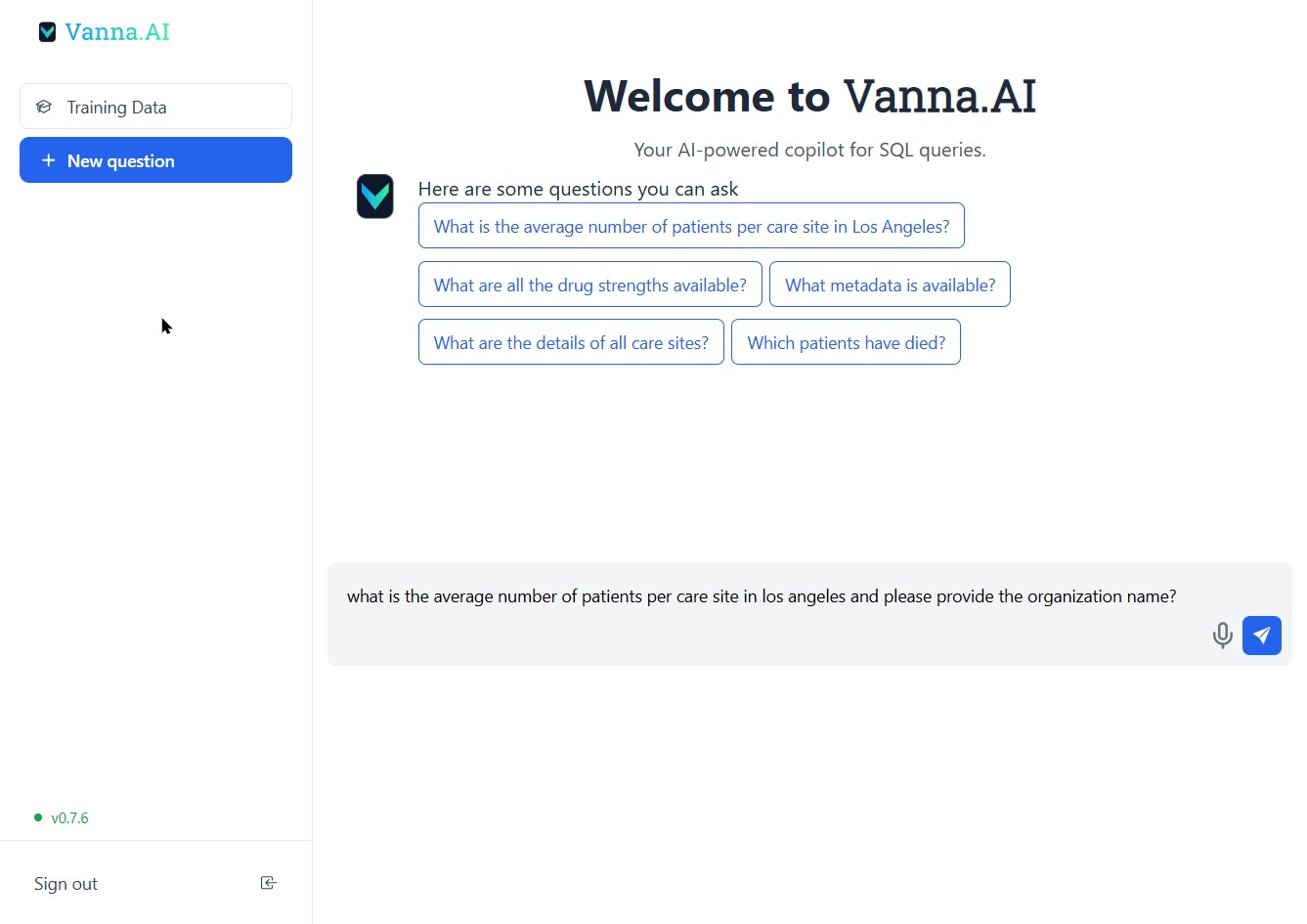

Lets do our best Pat Sajak, and boot the shiny Vanna app.

from vanna.flask import VannaFlaskApp

app = VannaFlaskApp(vn,allow_llm_to_see_data=True, debug=False)

app.run()

Skynet!

This is a bit hackish, but really where I want to go with AI future forward integrating with apps, here we ask in natural language a question, which returns a sql query, then we immediately use that query against the InterSystems OMOP deployment using sqlalchemy-iris.

while True:

import io

import sys

old_stdout = sys.stdout

sys.stdout = io.StringIO() # Redirect stdout to a dummy stream

question = 'How Many Care Sites are there in Los Angeles?'

sys.stdout = old_stdout

sql_query = vn.ask(question)

print("Ask Vanna to generate a query from a question of the OMOP database...")

#print(type(sql_query))

raw_sql_to_send_to_sqlalchemy_iris = sql_query[0]

print("Vanna returns the query to use against the database.")

gar = raw_sql_to_send_to_sqlalchemy_iris.replace("FROM care_site","FROM OMOPCDM54.care_site")

print(gar)

print("Now use sqlalchemy-iris with the generated query back to the OMOP database...")

result = conn.exec_driver_sql(gar)

#print(result)

for row in result:

print(row[0])

time.sleep(3)

Utilities

At any time you can inspect what OMOP data the Vanna package is able to reference. You can also remove training data if there's obsolete/incorrect information (you can do this through the UI too).training_data = vn.get_training_data()

training_data

vn.remove_training_data(id='omop-ddl')

About Using IRIS Vectors

Wish me luck here, if I manage to crush all the things to crush and resist the sun coming out, Ill implement iris vectors in vanna with the following repo.Comments

💡 This article is considered InterSystems Data Platform Best Practice.