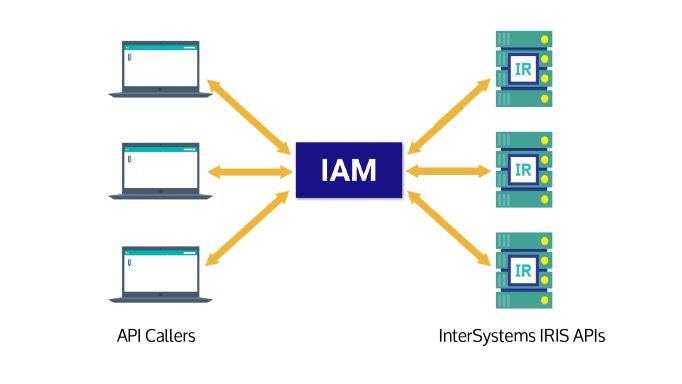

We have a rule to disable a user account if they have not logged in for a certain number of days. IRIS Audit database logs many events such as login failures for example. It can be configured to log successful logins as well. We have IRIS clusters with many IRIS instances. I like to run queries against audit data from ALL IRIS instances and identify user accounts which have not logged into ANY IRIS instance.

Instead of running queries against many IRIS instances, I developed audit-consolidator to consolidate audit data from many IRIS instances into ONE database table to run queries against the

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)