Hey Community,

Enjoy the new video on InterSystems Developers YouTube:

Hey Community,

Enjoy the new video on InterSystems Developers YouTube:

Hello all,

I'm trying to build a cube based on a linked table but seems that IRIS is not able to do it :O

Long story short, I have a linked table in IRIS that sources a Microsoft SQL table (using standard linked feature from the portal). It works fine, I can access it using SQL as many other times. On top of that, I've created in DeepSee (ok, Analytics) a cube that uses this class as source. It compiles correctly, no errors given. When I build it with 100 records, all goes well and using Analyzer I can see results.

Existing cube deleted.

Hi,

We have some concerns on how to implement FHIR, do we do facade?, how do we get resources?, what is a resource?, is a resource "Patient" or an instance of a patient?, How can we have a FHIR repository AND send the patient details to healthshare MPI?

Has anyone correctly created a FHIR server that has custom methods?, handles "normal" methods and forwards onto Healthshare MPI , processing the response and creates a accurate FHIR response back to the calling system.

Hi experts

I'm trying to configure an IRIS ODBC connection with "Windows NT authentication using the network login ID". I have created the System DSN as below:

.png)

and user (PROD\test) in the SQL Gateway connection

.png)

However, as the error message suggests, IRIS is trying to connect with PROD\svc_mist, rather than PROD\test configured above.

Is there anyway to configure the ODBC connection with specified account with Windows Auth method?

I was facing the same issue as Jerry faced when connecting IRIS to SQL server. My ODBC connection is configured to authenticate via windows authentication.

Configure IRIS ODBC connection with Windows authentication using a

How I fixed it for myself?

<ORGNAME>\<ASSETID>$.Folks!

Recently I found several one-line long ObjectScript commands on DC and think that it'd be great not to lose it and to collect more!

So I decided to gather a few first cases, put in one OEX project, and share them with you!

And here is how you can use them.

1. Create client SSL configuration.

set $namespace="%SYS", name="DefaultSSL" do:'##class(Security.SSLConfigs).Exists(name) ##class(Security.SSLConfigs).Create(name)Useful if you need to read content from an URL.

Don't forget to return to a previous namespace. Or add

n $namespacebefore the call.

Hi community.

I have a query:

SELECT

nameField,

dateField,

anotherDateField

FROM

(

SELECT

MIN(someDate) as dateField,

nameField,

anotherDateField

FROM $$$SOURCE

WHERE $$$RESTRICT

GROUP by someOtherField

)

WHERE dateField >= anotherDateFieldThis query should filter the data by the minimum value of the somDate field, but it doesn't. It displays all values together, regardless of the external filter. The exact same query (without the $$$ tokens, of course) works fine in a regular SQL runtime.

My guess is that the $$$RESTRICT does this

WHERE source.If you start with InterSystems ObjectScript, you will meet the XECUTE command.

And beginners may ask: Where and Why may I need to use this ?

The official documentation has a rich collection of code snippets. No practical case.

Just recently, I met a use case that I'd like to share with you.

When you build an IRIS container with Docker, then, in most cases,

you run the initialization script

iris session iris < iris.script

This means you open a terminal session and feed your input line-by-line from the script.

And that's fine and easy if you call methods, or functions, or commands.

.png)

.png)

This part of the OMOP Journey, we reflect before attempting to challenge Scylla on how fortunate we are that InterSystems OMOP transform is built on the Bulk FHIR Export as the source payload. This opens up hands off interoperability with the InterSystems OMOP transform across several FHIR® vendors, this time with the Google Cloud Healthcare API.

Overview

This release focuses on upgrade reliability, security expansion, and support experience improvements across multiple InterSystems Cloud Services. With this version, all major offerings—including FHIR Server, InterSystems Data Fabric Studio (IDS), IDS with Supply Chain, and IRIS Managed Services—now support Advanced Security, providing a unified and enhanced security posture.

.png)

Here is an option for your headspace if you are designing an multi-cluster architecture and the Operator is an FTE to the design. You can run the Operator from a central Kubernetes cluster (A), and point it to another Kubernetes cluster (B), so that when the apply an IrisCluster to B the Operator works remotely on A and plans the cluster accordingly on B. This design keeps some resource heat off the actual workload cluster, spares us some serviceaccounts/rbac and gives us only one operator deployment to worry about so we can concentrate on the IRIS workloads.

.png)

I am regularly contacted by customers about memory sizing when they get alerts that free memory is below a threshold, or they observe that free memory has dropped suddenly. Is there a problem? Will their application stop working because it has run out of memory for running system and application processes? Nearly always, the answer is no, there is nothing to worry about. But that simple answer is usually not enough. What's going on?

Consider the chart below. It is showing the output of the free metric in vmstat.

Hi all,

This is a quick tip about how to use case insensitive URL in REST API.

If you have a class that extends from %CSP.REST and Ens.BusinessService, to create a REST API service, and you have defined your WebApplication in lowercase

.png)

XData UrlMap [ XMLNamespace = "http://www.intersystems.com/urlmap" ]

{

<Routes>

<Route Url="/user" Method="POST" Call="User"/>

<Route Url="/login" Method="POST" Call="Login"/>

</Routes>

}Only accepts the url in lowercase, i.e. http://myserver/myproduction/user

If you have any uppercase character, the url doesn't work.

.png)

If you are in the business of building a robust High Availability, Disaster Recovery or Stamping multiple environments rapidly and in a consistent manner Karmada may just be the engine powering your Cloning Facility..png)

It's been a while since the new UI for Productions and DTL was published as a preview and I would like to know your opinions about it.

WARNING: This is a personal opinion, totally personal and not related with InterSystems Corporation.

I'm going to start with the Interoperabilty screen:

.png)

The style is sober and without frills, following the line of cloud services design, I like it.

But, always a but...or maybe two:

In my opinion there is too much information, the left menu is superfluous, it's true that you can collapse it, but I don't want to do it each time that I use the

If one of your packages on OEX receives a review, you get notified by OEX only of YOUR own package.

The rating reflects the experience of the reviewer with the status found at the time of review.

It is kind of a snapshot and might have changed meanwhile.

Reviews by other members of the community are marked by * in the last column.

I also placed a bunch of Pull Requests on GitHub when I found a problem I could fix.

Some were accepted and merged, and some were just ignored.

So if you made a major change and expect a changed review, just let me know.

Hi,

I'm trying to run some scripting in Windows. I'm using an instance of the IRISHealth community.

I'm just trying to run a simple sequence of commands so I can run irissession in a non-interactive mode, like:

irissession.exe IRISHEALTH -U "%SYS" < "myprogram.iris"

The contents of my program.iris are, for instance, these ones, to run an online backup:

set $namespace="%SYS"

set cbk = "C:\Test1.cbk"

set log = "C:\Test1.Hello. I need to transform a message

FROM:

MSH|^~\&|

SCH||61490||

PID|1||

RGS|1||1

AIS|1||

AIS|2||

AIS|3||

AIL|1||

AIP|1||

TO:

MSH|^~\&|

SCH||61490||

PID|1||

RGS|1||1

AIS|1||

AIL|1||

AIP|1||

RGS|1||1

AIS|2||

AIL|1||

AIP|1||

RGS|1||1

AIS|3||

AIL|1||

AIP|1||

The RGS, AIS, AIL and AIP are all under the RGS group. The one RGS segment that comes in will be copied across the group. If 3 AIS segments come in then I need 3 RGS groups, if 2 I need 2 RGS groups etc.

In my DTL (screenshot below) I have currently hardcoded the RGS index (1,2,3) but this will not be sufficient incase 4 AIS segments are sent in.

The 2025.1.2 and 2024.1.5 maintenance releases of InterSystems IRIS® data platform, InterSystems IRIS® for HealthTM, and HealthShare® Health Connect are now Generally Available (GA).

Hello community,

I wanted to share my experience about working on Large Data projects. Over the years, I have had the opportunity to handle massive patient data, payor data and transactional logs while working in an hospital industry. I have had the chance to build huge reports which had to be written using advanced logics fetching data across multiple tables whose indexing was not helping me write efficient code.

Here is what I have learned about managing large data efficiently.

Choosing the right data access method.

As we all here in the community are aware of, IRIS provides multiple ways to access data. Choosing the right method, depends on the requirement.

Set ToDate=+H

Set FromDate=+$H-1 For Set FromDate=$O(^PatientD("Date",FromDate)) Quit:FromDate>ToDate Do

. Set PatId="" For Set PatId=$Order(^PatientD("Date",FromDate,PatID)) Quit:PatId="" Do

. . Write $Get(^PatientD("Date",FromDate,PatID)),!Hi all,

Recently we were experimenting with having a variable target on a routing rule and noticed some interesting behaviour, code below.

<rule name="My Rule" disabled="false">

<constraint name="docCategory" value="Generic.2.3.1"></constraint>

<when condition="((Document.{MSH:MessageType.triggerevent}="A43"))">

<send transform="" target="To" _(pContext.Document.GetValueAt("MSH:SendingFacility"))_"FromServiceTCPOpr"></send>

</when>

</rule>

This code compiles and works.

Our application has a ZEN report that accepts a sorting parameter and a fundraiser parameter. Sorting tells us which order the pages are to be in and the fundraiser limits the data shown (e.g. which user has requested the report)

Property fundraiser As %ZEN.Datatype.string(ZENURL = "FID");

Property SortOrder As %ZEN.Datatype.string(ZENURL = "SORTME") [ InitialExpression = "WorkId" ];

/// This XML defines the logical contents of this report.

XData ReportDefinition [ XMLNamespace = "http://www.intersystems.

.png)

Rancher Government Hauler streamlines deploying and maintaining InterSystems container workloads in air-gapped environments by simplifying how you package and move required assets. It treats container images, Helm charts, and other files as content and collections, letting you fetch, store, and distribute them declaratively or via CLI — without changing your existing workflows. Meaning your charts and what have yous, can have conditionals on your pull locations in Helm values, etc.

If you have been tracking how HealthShare is being deployed via IPM Packages, you can certainly appreciate the adoption of OCI compliance storage for the packages themselves using ORAS... which is core to the Hauler solution.

.png)

Hello InterSystems Community,

I hope you're all doing well. I'm reaching out to ask if there's any way to enable a dark theme or dark mode for the HealthShare Management Portal.

I have a visual impairment (amblyopia/lazy eye) which means I'm nearly blind in one eye. Like many people with visual difficulties, I find that bright white backgrounds and interfaces cause significant eye strain and fatigue. This makes it challenging to work with the Management Portal for extended periods.

Hi Community,

Enjoy the new video on InterSystems Developers YouTube:

Starting out with ObjectScript, it is really exciting, but it can also feel a little unusual if you're used to other languages. Many beginners trip over the same hurdles, so here are a few "gotchas" you'll want to watch out for. (Also few friendly tips to avoid them)

NAMING THINGS RANDOMLY

We have all been guilty of naming something Test1 or MyClass just to move on quickly. But once your project grows, these names become a nightmare.

➡ Pick clear, consistent names from the start. Think of it as leaving breadcrumbs for your future self and your teammates.

Businesses often use in-memory databases or key-value stores (caching layers) when applications require extremely high performance. However, in-memory databases incur a high total cost of ownership and have hard scalability limits, incurring reliability problems and restart delays when memory limits are exceeded. In-memory key-value stores share these limitations and introduce architectural complexity and network latency as well.

This article explains why InterSystems IRIS™ data platform is a superior alternative to in-memory databases and key-value stores for highperformance SQL and NoSQL applications.

InterSystems IRIS is the only persistent database that can match or beat the performance of in-memory databases and caching layers for concurrent data ingestion and analytics processing. It can process incoming transactions, persist the data to disk, and index it for analytics in under one microsecond on commercially available hardware without introducing network latency.

The superior ingest performance of InterSystems IRIS results in part from its multi-dimensional data engine, which allows efficient and compact storage in a rich data structure. Using an efficient, multi-dimensional data model with sparse storage techniques instead of two-dimensional tables, random data access and updates are accomplished with very high performance, fewer resources and less disk capacity. It also provides in-memory, in-process APIs in addition to traditional TCP/IP access APIs to optimize ingest performance.

Hi Community,

It's time to announce the winners of the InterSystems .Net, Java, Python, and JavaScript Contest!

Thanks to all our amazing participants who submitted 11 applications 🔥

%20(5).jpg)

Now it's time to announce the winners!

Not sure where to ask for some helpful hints about IRIS 2019.1 in 2025, but here goes.

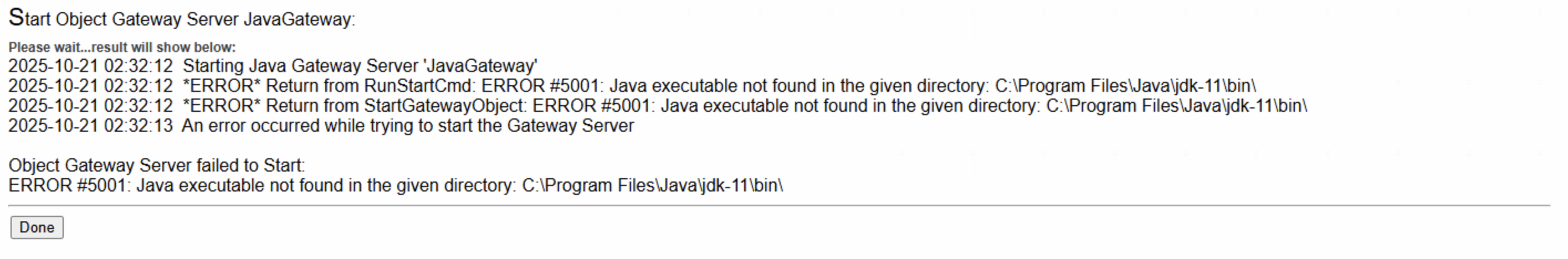

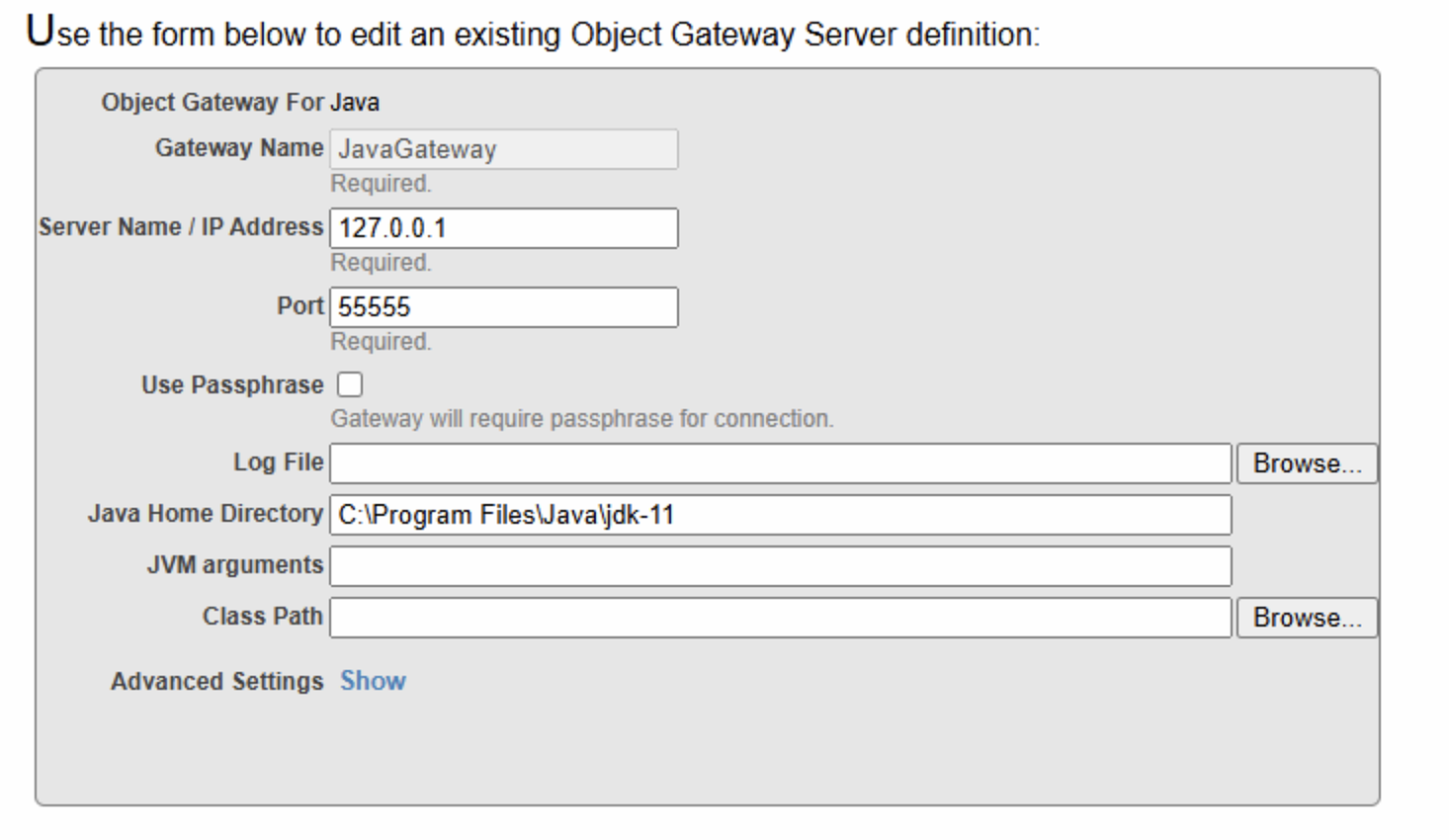

I'm trying to create a new Java gateway to run some Java code through ObjectScript, but I keep getting an error saying "Java executable not found in the given directory":

This is my Object Gateway Server definition:

This is my Object Gateway Server definition:

I am not sure what is wrong, whether I am defining this gateway wrong, because I can verify that the executable exists in the provided directory.

I am not sure what is wrong, whether I am defining this gateway wrong, because I can verify that the executable exists in the provided directory.

Any help or hint is greatly appreciated!

Hi community,

I have a service that uses EnsLib.RecordMap.Service.FTPService to capture files in an FTP directory.

Instead of uploading them all at once, I would need to do so one at a time.

I have a class that extends this class because it preprocesses, saves everything in the RecordMap class, and then processes all the records at once.

When I invoke the BP, it does so through the method set tStatus = ..SendRequest(message, 1).

I've set the SynchronousSend flag to 1, but it continues processing all the files at