Has anyone noticed weird behavior when upgrading to HealthShare Health Connect 2024.1?

Wednesday I upgraded our TEST environment from IRIS for UNIX (Red Hat Enterprise Linux 8 for x86-64) 2022.1.4 (Build 812_0_22913U) [HealthConnect:3.5.0-1.m1] [HealthConnect:3.5.0-1.m1] to IRIS for UNIX (Red Hat Enterprise Linux 8 for x86-64) 2024.1 (Build 267_2U) [HealthConnect:3.5.0-1.m1].

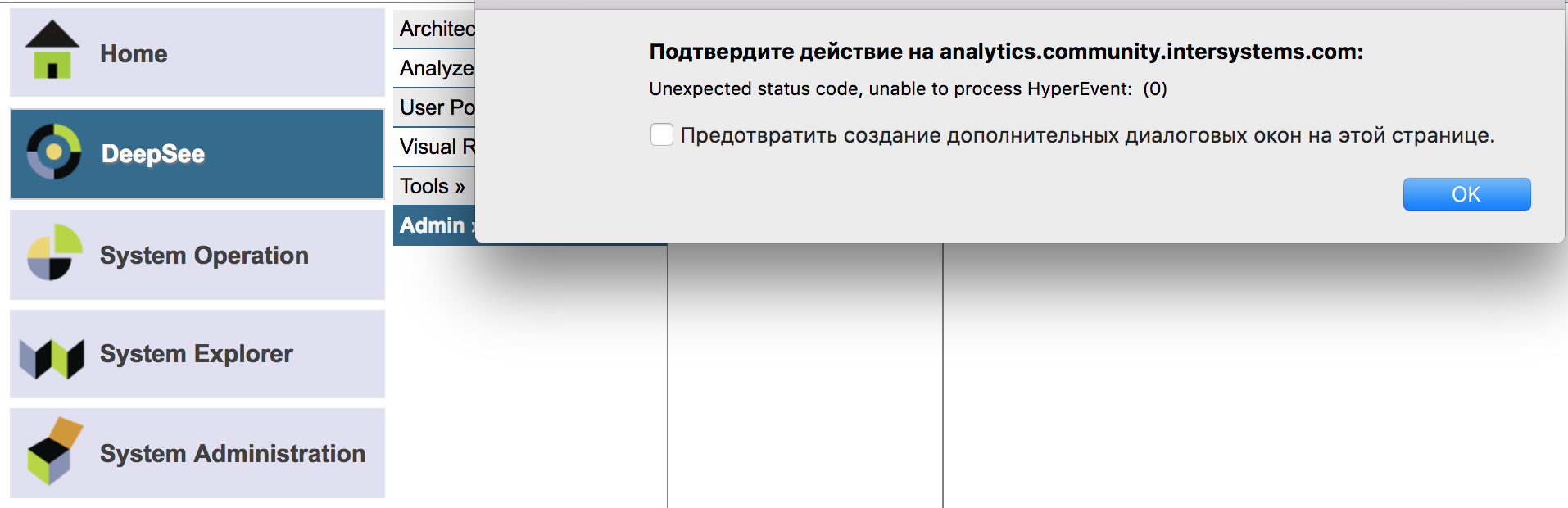

Some of our Business Processes have been throwing...

.png)