I'm trying to gather more data for one of my namespaces so I can do some analysis. However when I run .BuildIndex() nothing populates in the resulting SQL table. I've tried deleting the class and reimporting and compiling and still nothing. I feel like I'm doing something OBVIOUS that's wrong but I can't quite figure out what it is. Here's what I'm doing (Customer name redacted)

Class CUST***.System.Cerner.Hl7.SearchTable Extends EnsLib.HL7.SearchTable

{

Parameter DOCCLASS = "EnsLib.HL7.Message";Parameter EXTENTSIZE = 4000000;

XData SearchSpec [ XMLNamespace = "http://Hello Community,

I got below error while connecting the IRIS studio. However, executes Write ##class(%File).DirectoryExists("c:\intersystems\irishealthcomm\mgr\hscustom")returns true and terminal and SMP works

.png)

thanks!

Hey Developers,

Enjoy the new video on InterSystems Developers YouTube from our Tech Video Challenge:

I am receiving a FHIR response bundle back with a resource of patient. Using fromDao, I attempted to take the stream and put it into FHIRModel.R4.Patient but it is not mapping correctly. When I attempt to take FHIRModel.R4.Patient and write it out using toString(), all I am seeing is the resource

{"resourceType":"Patient"}

so the response is not mapping correctly to FHIRModel.R4.Patient. How have others handled this? Do I need to translate it to an SDA since it does fit the model format?

HealthShare Unified Care Record Fundamentals – In Person (Boston, MA)* July 28-August 1, 2025

*Please review the important prerequisite requirements for this class prior to registering.

- Learn the architecture, configuration, and management of HealthShare Unified Care Record.

- This 5-day course teaches HealthShare Unified Care Record users and integrators the HealthShare Unified Care Record architecture and administration tasks.

- The course also includes how to install HealthShare Unified Care Record.

- This course is intended for HealthShare Unified Care Record developers, integrators,

I am trying to get the value of a Submit button on a CSP object page.

<input type="Submit" value="Assign" name="action" id="action">

I tried getting it thru $GET(%Request.Data("action")), but it throws an Undefined error. Isnt $GET supposed to handle it if its undefined anyway?

How do I get the "value" of the button pushed when the form is set to POST?

Hi everyone,

I’ve set up a scheduled task in InterSystems IRIS to run every night at 2:00 AM, but it doesn’t seem to trigger consistently. Some nights it works as expected, but other times it just skips without any error in the logs.

The task is marked as enabled, and there are no conflicts in the Task Manager. I’ve double-checked the system time and time zone settings all looks fine.

Could it be a cache issue or something to do with system load?

Thanks!

Hey Community,

We're pleased to invite all the developers to the upcoming kick-off webinar for the InterSystems Developer Tools Contest!

Date & Time: Monday, July 14 – 11 am EDT | 5 pm CEST

When accessing management portal through IIS the page is not fully rendered and the buttons/links that are displayed don't work.

Management Portal works fine through private web-server.

Have just set up IIS/CSP Gateway to access Ensemble, and accessing the CSP Gateway configuration pages through IIS works fine (screenshot at end of post).

This is the view when accessing management portal through IIS (port 80) - missing images, links don't work, not all content displayed:

.png)

And this is the (top of the) view when accessing through the PWS (port 57772):

.png)

I've been following @Kyle Baxter 's helpful

You've probably encountered the terms Data Lake, Data Warehouse, and Data Fabric everywhere over the last 10-15 years. Everything can be solved with one of these three things, or a combination of them ( here and here are a couple of articles from our official website in case you have any doubts about what each of these terms means). If we had to summarize the purpose of all these terms visually, we could say that they all try to solve situations like this:

Our organizations are like that room, a multitude of drawers filled with data everywhere, in which we are unable to find anything we need,

Developing with InterSystems Objects and SQL – VirtualJuly 28-August 1, 2025

- This 5-day course teaches programmers how to use the tools and techniques within the InterSystems® development environment.

- Students develop a database application using object-oriented design, building different types of IRIS classes.

- They learn how to store and retrieve data using Objects or SQL, and decide which approach is best for different use cases.

- They write code using ObjectScript, Python, and SQL, with most exercises offering the choice between ObjectScript and Python, and some exercises requiring

Hey Community!

Here's the recap of the final half-day of the InterSystems Ready 2025! It was the last chance to see everyone and say farewell until next time.

It was a warm and energetic closing, with great conversations, smiles, and unforgettable memories!

The final Ready 2025 moment with our amazing team!

I have built a REST operation to submit a JSON Request Body, and in the JSON Response Object, I need to pull out certain values like pureID, portalURL, and under the identifiers array the ClassifiedID that has a term."en_US" = "Scopus Author ID"

{

"count": 1,

"pageInformation": {

"offset": 0,

"size": 10

},

"items": [

{

"pureId": 0000000000000,

"uuid": "xxxxxxxxxxxxxxxxxxxxx",

"createdBy": "root",

"createdDate": "2024-11-18T22:01:07.853Z",

"modifiedBy": "root",

"modifiedDaHello again,

We are still seeking feedback on our two new HealthShare Unified Care Record certification exam designs. This is your opportunity to tell us what knowledge, skills, and abilities are important for Certified HealthShare Unified Care Record Specialists.

The feedback surveys are open until July 20th, 2025. All participants are eligible to receive 7000 Global Masters points for each survey they complete!

Interested in sharing your opinions? See the original post for more details on how to weigh-in on the exam topics.

Note: The surveys will be unavailable from July 11, 5pm - July 13th, 10

In IRIS, every time a request need to be processed, a specific IRIS process (IRISDB.EXE) need to be assigned to handle that request.

If there is no spare IRIS process at that time, process will need to be created (and later destroyed). On some Windows systems (especially with security/antimalware solutions being active) creating new processes can be slow, which can results in delays during peak times (due to inrush of requests).

Because of that, it's sometimes useful to have some a certain number of processes already created and idling, for handling requests, especially during peak times (eg:

- I like the Application Error Log functionality a lot. However, it becomes time consuming to inspect it date by date and directory by directory on a multidirectory server. Ideally, I would use an existing error class to write a custom error report by date, selected namespaces, etc. Does such a system class actually exist? Not that I found. The detail level on the screenshot below is enough.

- Some Application Error Logs go back a couple of years and load for a long while.Is there any programmatic way to clean them?

Hi Community

I'm working on a complex piece of rules that needs to check each OBX and evaluate if 3 of the fields match certain criteria. I've tried to achieve this using a Foreach loop, which does work, however it is not as clean as I wanted it. I was hoping I could assign temp variables inside the foreach loop to make the rules more easier to read, for example @testCode @resultText. This is not support as you can only assign inside a when condition. Before I raise this as an idea, i wondered if there was an alternative way to achieve the same thing?

.png)

I am seeking work with InterSystems HealthConnect/Cache. My experience is working with Rules and DTLs, plus message search, and export of components.With 10 years of dedicated expertise in SOA and ESB platform development, I have successfully designed and implemented enterprise-level integration solutions for large organizations, driving significant improvements in system interoperability and business efficiency.I have actively developed and supported HL7 V2/V3 、FHIR、XML/JSON interfaces.I reside in China. Available for full-time or contract positions, with remote work options preferred.

The

I seem to remember making this work before, but I'm not having any luck digging up examples.

I've defined some custom properties for a business operation that could definitely benefit from having popup descriptions available in the Production Configuration. I have triple-slash comments before each property that do just that in the source. I thought those provided the text for the popup descriptions when clicking on the property name, but apparently not.

Any thoughts?

is there a way to include a pdf in a zen report in a stream field?

something somewhat similar to what is done for images

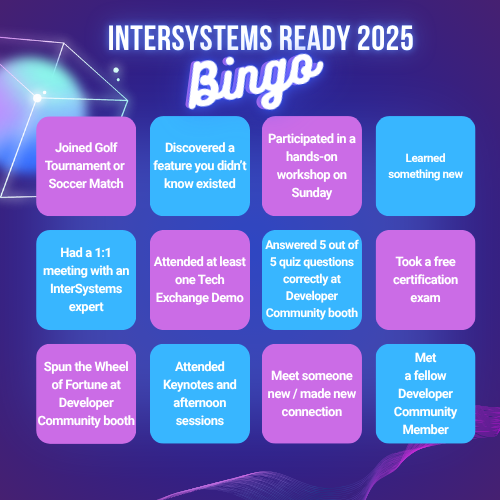

How was your READY experience?

We’ve prepared a bingo card — take a look and see how many boxes you can check off!

Cross off the ones that match your experience or list them in the comments.

And if something memorable happened that’s not on the card — we’d love to hear about it! ✨

Hi Community,

Watch this video to learn about Shift's new Data Platform architecture, combined with the InterSystems IRIS roadmap for data management:

⏯ Shift Data Platform: The IRIS Roadmap Applied @ Global Summit 2024

My last assignment ended in June and I am again in job search.

Seeking positions that need experience in HealthConnect, modifying Rules and DTLs.

My background is in HL7 interface development and support.

Located in the greater Boston area, open to remote or hybrid as well.

Good morning,

We would need help, thanks in advance for your help. 🙂

We have been trying to debug the following error, when sending a DICOM from a "EnsLib.DICOM.Operation.TCP" to a PACs:

| ERROR <EnsDICOM>NoCompatibleTransferSyntaxFound: No negotiated transfer syntax for SOP class '1.2.840.10008.5.1.4.1.1.4' is compatible with document transfer syntax '1.2.840.10008.1.2.4.70' |

.png)

We use the following AET titles at the Operation:

LocalAET: ESBSSCC-DCM

RemoteAET: HUNSCESBGWT

.png)

We have observed that we do have the transfer syntax "1.2.840.10008.5.1.4.1.1.4" with the document syntax

I am looking to create a Python virtual environment (venv) so that my imported/installed python packages can be separate on different namespaces in IRIS. I am able to go and create an environment, activate it, and install packages, but I am not sure how to ensure that Embedded Python methods actually point to this virtual environment.

Is the best solution to just load the virtual environment at runtime, in each method? That seems like a bad solution. Has anyone run into this and found a good solution?

Hey Community,

It's time for the new batch of #KeyQuestions from the previous month.

Hi Community,

Enjoy the new video on InterSystems Developers YouTube from our Tech Video Challenge:

⏯ Genes in DNA Sequences as vector representation using IRIS vector database

Hello Community,

Is there a programmatic method or specific property to differentiate system-defined (/csp/altelier , /api/mgmnt and so on) or user-defined web applications in IRIS?

Thanks!