Hello community!

After reading a lot near InterSystems's Jobs and Terminal I/O documentation sections multiple times, I still does not understand one concept.

Maybe this question will be kind of incorrect, but please take it as is: is it possible to change the current device to be terminal device?

Let me explain what I want to achieve.

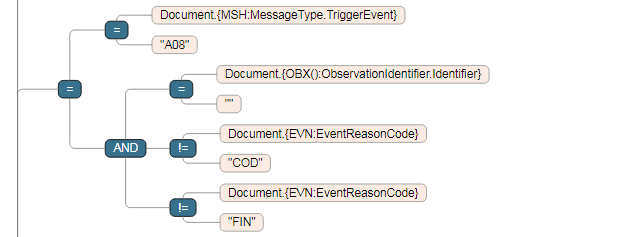

.png)

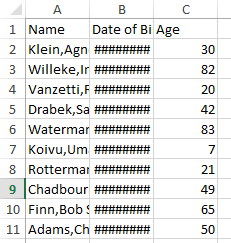

.png)