Hi Developers,

Watch the latest video on InterSystems Developers YouTube:

Hi Developers,

Watch the latest video on InterSystems Developers YouTube:

my login does not have WRC Direct access.

How can I get this access to download the Intersystems IRIS kit?

Hi all,

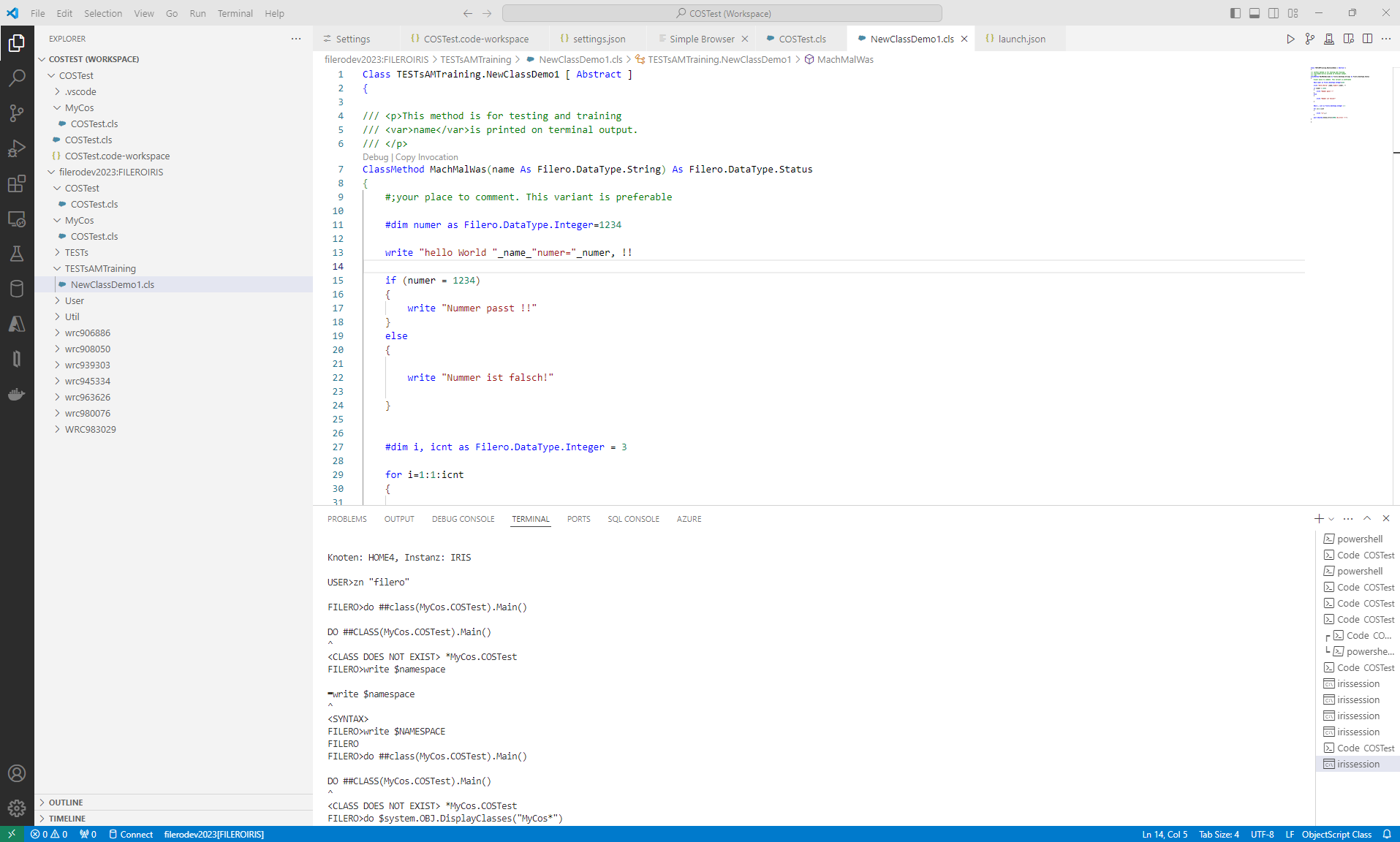

I was wondering if there is a way to modify the results you get in the code assist in Visual Studio Code. Ideally even per class or package.

For example: the code below is for a custom component of a framework. From all the options listed I'm only interested in my own property "Title" and I don't want to see any %-methods or auto-generated methods like "TitleSet" and "TitleGet".

.png)

I have been looking at the %Api.Atelier classes, but it seems that it's only calling a list of deprecated methods when opening this list. So far I haven't been able to find any call where the rest of this

With the advent of Embedded Python, a myriad of use cases are now possible from within IRIS directly using Python libraries for more complex operations. One such operation is the use of natural language processing tools such as textual similarity comparison.

Note: For this article, I will be using a Linux system with IRIS installed. Some of the processes for using Embedded Python with Windows, such as installing libraries, may be a bit different from Linux to Windows so please refer to the IRIS documentation for the prope

.png)

In vscode objectscript extension, when you push "Ctrl + Slash" in the editor window, the comment delimiter "#;" is inserted. This feature helps me a lot to write comments in sources. But currently it does not have any options to change "#;" to other characters.

.png)

Some customers say "we prefer another comment style like //, so want // be put into the source in stead of #; when we push Ctrl + Slash". It makes sense to me.

I put a new request on Github "[Request] want to change the comment style from "#;" which is inserted by "Ctrl + Slash".

What do you think? Agree? Disagree? If you support my

Hi! I am planning to move my Arbiter from a Unix server to a container(again on linux). To do this, I need the ISCAgent tar.gz file to configure Arbiter for our mirrored servers. I have tried searching for it on the Intersystems help forums but couldn't find it. Is it possible for someone to redirect me to the correct website to download it?

Thank you in advance!!

Hi everyone,

I have this global with 2 informations in it: Reference (ex: 1329) and Code (ex: JMMK-G1D6).

^DataTest = 3

^DataTest(1) = $lb("","1329","JMMK-G1D6")

^DataTest(2) = $lb("","1516","AMEV-GVPF")

^DataTest(3) = $lb("","2333","4QC6-4HW3")

With ObjectScript, i want to test if Reference 1516 exists in the global.

In the InterSystems portal, i can do it with SQL (SELECT count(*) FROM DataTest where Reference = '1516'), but can we do the same in ObjectScript without SQL and manipulating the global directly ?

Thanks for help.

Hi Developers!

Here're the technology bonuses for the InterSystems Vector Search, GenAI, and ML contest 2024 that will give you extra points in the voting:

See the details below.

I recently set up InterSystems in the Azure cloud environment. So far, no issues. Recently, I was tasked with uploading the IRIS.dat file (FoIA VISTA) into the instance. I was guided by my teammates to do the following: System Administration > Configuration > Local Databases. The problem is that I need to transfer the .dat file to the instance, because, as it is running on a local machine, it cannot retrieve files from my local drive. Is there a reliable solution, such as a transfer method or way of using the Web Gateway endpoint, one can provide to assist? Thank you for your time.

Hi all,

As part of the InterSystems Solutionathon we would like to distribute a very quick survey on your thoughts on using AI-driven code documentation for ObjectScript.

https://forms.office.com/r/nSGevGpbTy

Please let us know if you have any further questions!

Hello everyone,

I'm currently working on a business operation that employs a retry mechanism with a FailureTimeout = -1. So, this BO attempts to resend the message at the end of a RetryInterval of n seconds (n is configurable).

What I would like to achieve is to set a timer that runs in parallel with the sending mechanism so that, If I don't receive a response within m seconds (m also is configurable and m<=n) from the initial message send (with RetryCount = 1), an alert or something similar is triggered. The latter should initiate a second method to run concurrently with the first one (whic

When you run a routine in the terminal and an error occurs in the program, if you have not set the error trap properly, the program will enter debug mode as shown below.

USER>do^error1

write A

^

a+2^error1 *A

USER 2d0>From this state, enter the Quit command to return to the state before the routine was started.

USER 2d0>QuitIf a transaction is being processed within the routine where the error occurred, a prompt similar to the one below will appear.

USER>do^error1

write A

^

a+3^error1 *A

TL1:USER 2d0>q

TL1:USER>If TL+number is displayed at the beg

we are looking at replacing our VMS system with Linux :-( yes a sad day!

now the question has become should we go with Intel or AMD. personally I think Intel is the way to go for several reasons.

has anyone seen or heard of an AMD being slower or there is a definite performance of one over the other.

thanks for your feedback.

Paul

first our current environment which will be copied over to the new platform

Cache 2015.2 yes we are stuck here due to Vendor and no further support. with the upgrade we will be updating to a newer version of Cache and eventually IRIS when the vendor has certi

Hi everyone.

I have a function that may end up being called from a number of transformations at the same time, and within the function there's some Embedded SQL to first check if a local table has an entry, and then adds the entry if it doesn't exist.

To prevent a race condition where the function is called by two transformations and they both end up attempting to insert the same value, I'm looking to use the table hint "WITH TABLOCK" on the insert, but this seems to be failing the syntax checks within vscode.

Are table hints supported with embedded sql?

If not, is there a way to prevent t

I'm currently fighting with SoapUI to get the HS WSDLs imported.

My first issue is, the IRISUsername and IRISPassword parameters don't seem to work.

i've tried the following url:

https://ifwap0661.ad.klinik.xxxyyy.de/ucrdev/csp/healthshare/hsreposito…

result:

Error loading: org.apache.xmlbeans.XmlException: org.apache.xmlbeans.XmlException: error: The entity name must immediately follow the '&' in the entity reference.

not entirely sure what is happening here.

if i ent

Hi,

I'm currently working on configuring an Ens.Alert component within Ensemble 2018, and I'm looking to set up a system default settings for it.

Could anyone guide me through the process of setting up these default setting for the Ens.Alert component?

Thank you in advance!

Hi,

I was struggling with a procedure that was meant to receive a string and use it as a filter, I've found that since I want the procedure to do some data transformation and return a dataset, I needed to use objectScript language.

I've created the procedure using the SQL GUI in the portal, and everything works fine when calling the procedure from the SQL GUI but not through a JDBC connection here is the call "call spPatientOS('2024-04-07T12:35:32Z')"

the bottom line is that the procedure was created with the parameter defined as STRING(MAXLEN=1) which means that my parameter from the jdbc

Hi,

i have this simple json structure:

{

"nTypeTrigger": "ATR",

"sDate": "2024-04-17 15:29:16",

"tRefArray": [{"sID":"132"},{"sID":"151"},{"sID":"233"}],

"tCountries": []

}

I can't find an example to iterate on tRefArray.

I've tried to create a secondary iterator but it doesn't work. Here is my current code:

// extract json content from the request:

set dynRequestJsonPayload = {}.%FromJSON(%request.Content)

set JsonIterator = dynRequestJsonPayload.%GetIterator()

// iterate on json structure:

if dynRequestJsonPayload '= "" {

while JsonIterator.%GetNext(.key, .value) {

set NodeT

Can you assign the SessionId to a target field in a DTL. There is a %Ensemble("SessionId") variable that looks like it would be available to use.

Hello!

I wonder if anyone has a smart idea to extract an XML fragment inside a text document (incoming from a stream)?

The XML fragment is surrounded by plain text.

Example:

text...........

text...........

<?xml version="1.0" encoding="UTF-8 ?>

<Start>

...etc

</Start>

text...........

text...........

The XML is not represented by any class or object in the Namespace.

The XML can look different from time to time

Appreciated if anyone knows how to use Objectscript to extract the XML content.

Regards Michael

@Vicky Li

@Thanongsak Chamung

@Warlin Garcia

@Eduard Lebedyuk

@Enrico Parisi

@Luis Angel Pérez Ramos

We are getting this error. All the drivers, URL and credential are tested and existed. it works fine sometime but also getting this error more often. Please advise the root cause and its respective solution. The business service that is using out of the box Java service (classname: EnsLib.JavaGateway.Service adapter classname: EnsLib.JavaGateway.ServiceAdapter) is throwing this error.

The configured "SOMEDSN" has passed the JDBC Connection test successfully with the external database (ORACLE)

Type: Error

Tex

Hi

Some of our integrations use a system DSN to interact with our Data Warehouse (built on Microsoft SQL Server). We've recently migrated from SQL Server 2016 to 2022 and on the day of go-live we changed DNS cname records as a mechanism for redirecting everything to the new server. This worked great for users and reports, but for some reason we found that HealthShare clung onto the old server name even after several days.

We found when an ODBC connection references a DNS cname, the production hung onto and still queried the old server - we had to restart the production to resolve it.

When

Hey everyone,

I'm currently running into a very weird issue to where I am trying to connect with a 64 bit version of SQL Server Management Studio (SSMS) to a HealthShare instance. I have created a System DSN using the Drivers (image below) that were downloaded with the Client version of the install and I'm able to successfully connect using my credentials.

After I have my System DSN (image below) configured, I go into SSMS and add a Linked Server that is referencing the System DSN that was created. Also, I change the provider to be Microsoft OLE DB Provider for ODBC Drivers. I go into Secu

.png)

Hello everybody,

I've been experimenting with Embedded Python and have been following the steps outlined in this documentation: https://docs.intersystems.com/irislatest/csp/docbook/DocBook.UI.Page.cl…

I'm trying to convert a python dictionary into an objectscript array but there is an issue with the 'arrayref' function, that is not working as in the linked example.

This is a snapshoot of my IRIS terminal:

USER>do##class(%SYS.Python).Shell()

Python 3.10.12 (main, Nov 202023, 15:14:05) [GCC 11.4.0] on linux

Type quit() or C Hello all, i am new to IRIS in combination with VSCode, can anyone guide why this error appears ? Any suggestions are welcome. Thank you in advance.

Hello all, i am new to IRIS in combination with VSCode, can anyone guide why this error appears ? Any suggestions are welcome. Thank you in advance.

Hello developers!

We invite you to a new Spanish webinar "SMART on FHIR, extending the capabilities of HealthShare"

📅 Date & Time: Thursday, April 25th, at 4:00 PM (CEST).

🗣 Speaker: @Rubén Larenas , Sales Engineer at InterSystems

Hi Guys,

I'm new to IRIS and I'm converting from Ensemble 2018 to IRIS but not sure how to convert my cache.data file to IRIS.dat, I copied my cache.data to a new folder then went to IRIS management portal and created a new database and specified the directory to where my cache.data and saved and I thought that IRIS will automatically convert cache.dat to IRIS.dat but instead it created a new empty IRIS.dat, I guess I was wrong in my assumptions !?

also by leaving the New Volume Threshold size as zero is that mean that I always have only one IRIS.dat instead of having multipl

.png)

Hey Community,

Play the new video on InterSystems Developers YouTube:

⏯ Unleashing the Power of Machine Learning with InterSystems @ Global Summit 2023

Spoilers: Daily Integrity Checks are not only a best practice, but they also provide a snapshot of global sizes and density.

Update 2024-04-16: As of IRIS 2024.1, Many of the below utilities now offer a mode to estimate the size with <2% error on average with orders of magnitude improvements in performance and IO requirements. I continue to urge regular Integrity Checks, however there are situations where more urgent answers are needed.

EstimatedSize^%GSIZE- Runs %GSIZE in estimation mode. ##class(%Library.GlobalEdit).GetGlobalSize(directory, globalname, .allocated, .used. 2) - Estima