.png)

Hi Community,

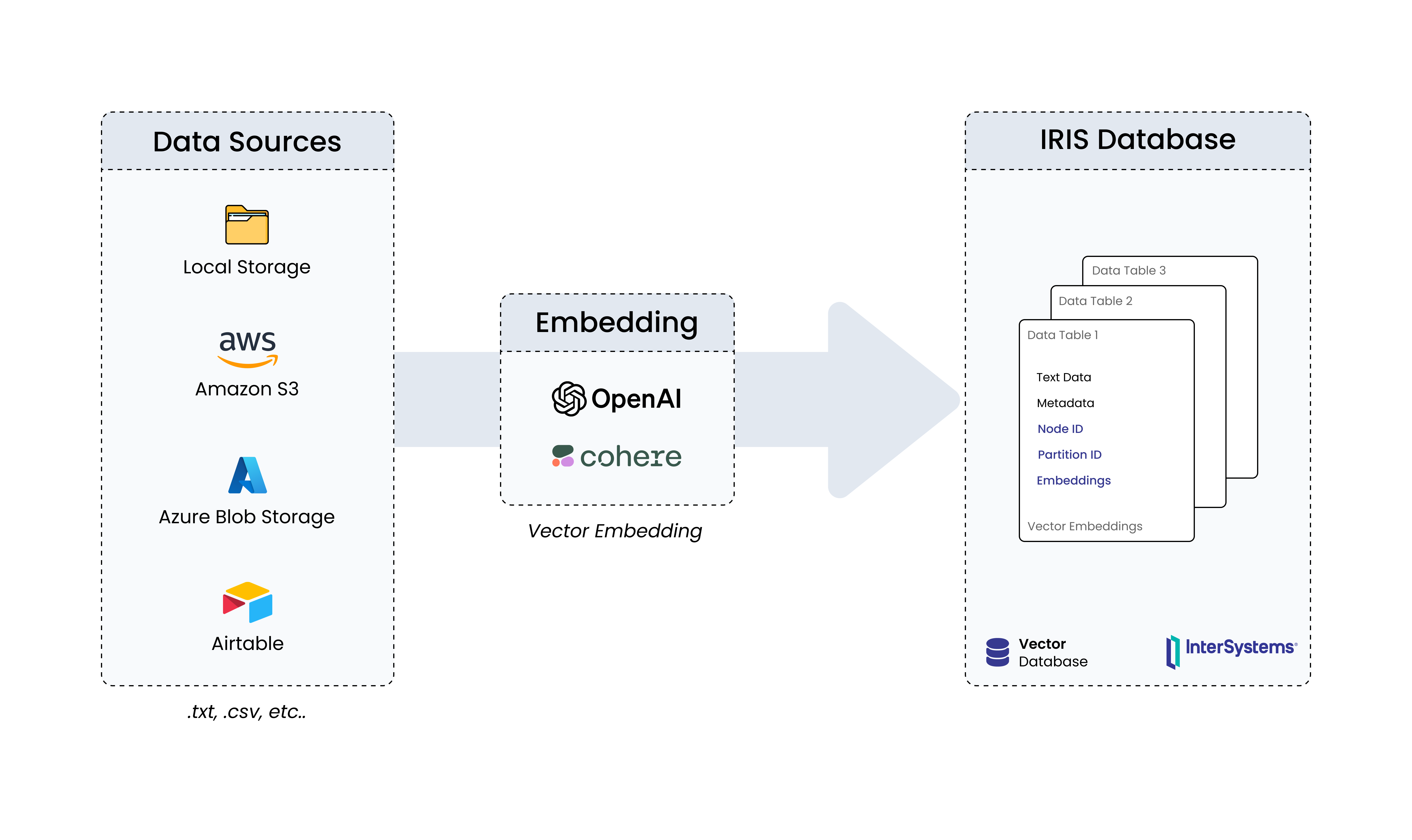

In this article, I will introduce my application iris-image-vector-search.

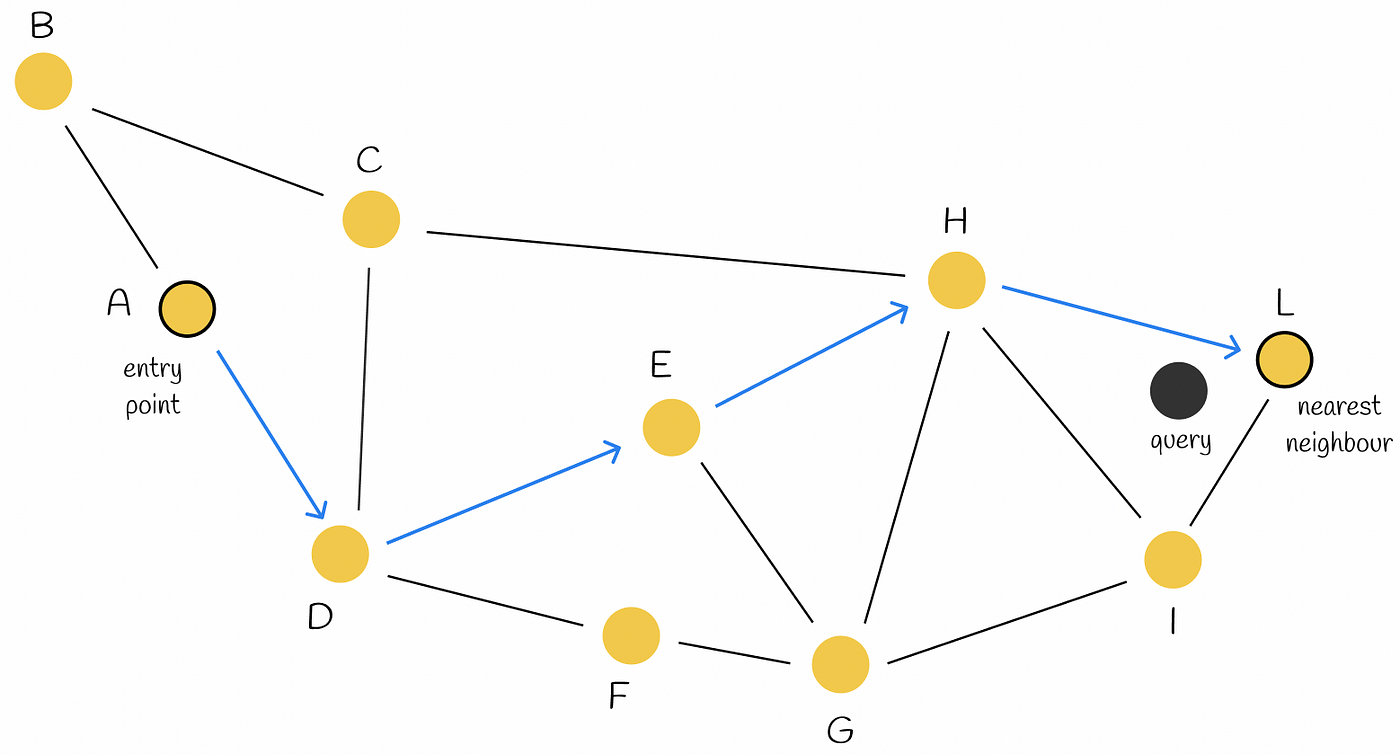

The image vector retrieval demo uses IRIS Embedded Python and OpenAI CLIP model to convert images into 512 dimensional vector data. Through the new feature of Vector Search, VECTOR-COSINE is used to calculate similarity and display high similarity images.

.png)

.jpg)

.png)

.png)