Hi Interoperability experts!

Recently noticed an interesting conceptual discussion in our Interoperability Discord channel to which I want to give more exposure.

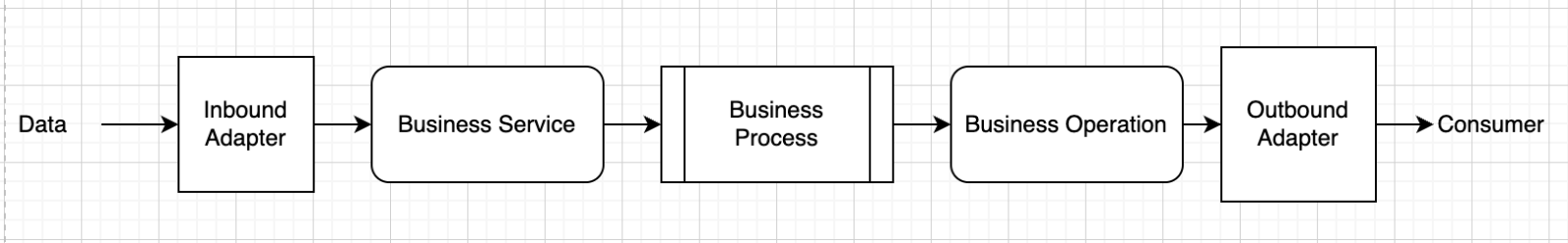

All we know that typical InterSystems Interoperability production consists of the following chain:

Inbound adapter->Business Service->Business Process->Business Operation->Outbound adapter.

And Business Process (BO) here is always considered as a passive "listener" either on port/folder/rest API for an incoming data.

.png)