Can you elaborate? How else can a session running on one IRIS instance invoke a class method on some other IRIS instance?

- Log in to post comments

Can you elaborate? How else can a session running on one IRIS instance invoke a class method on some other IRIS instance?

There are a number of limitations on the size of an SQL statement I think, maximum string length is one of those limitations. It is based on the normalized "signature", including the statement text, as a single string, even when you pass the source statement as an array. I haven't worked in this area for several years so I can't speak to the details.

Timothy Leavitt's excellent response notwithstanding, this is supported. I do fully embrace the option presented by Timothy Leavitt. The structures I demonstrate here actually produce a model very close to his and the index, since it includes both KEYS and ELEMENTS is projected to the child table projected from the addresses array. Of course, reversing KEYS and ELEMENTS in the index key specification would make the index more useful for searching on city name.

This definition:

Property addresses As array Of Sample.Address;

Index xA On (addresses(KEYS), addresses(ELEMENTS).City)Not only works but the filing code also recognizes the ability to fold both properties in the index into the same iterator:

If ('pIndexHandle)||($Ascii($Get(pIndexHandle("Sample.Person")),5)=1) {

set bsv26N1 = $Order(^Sample.PersonD(id,"addresses",""))

While bsv26N1 '= "" {

Set bsv0N8=$zu(28,##class(Sample.Address).%Open($select(^Sample.PersonD(id,"addresses",bsv26N1)="":"",1:$listbuild(^Sample.PersonD(id,"addresses",bsv26N1)_""))).City,7,32768)

Set ^Sample.PersonI("xA",bsv26N1,bsv0N8,id)=$listget(bsv0N2,1)

set bsv26N1 = $Order(^Sample.PersonD(id,"addresses",bsv26N1))

}

}And a quick test shows this structure is produced:

panther.local:XDBC:USER>d ##class(Sample.Person).Populate(10)

panther.local:XDBC:USER>zw ^Sample.PersonI("xA")

^Sample.PersonI("xA","A886"," GANSEVOORT",3)=""

^Sample.PersonI("xA","B350"," MIAMI",6)=""

^Sample.PersonI("xA","B748"," NEWTON",3)=""

^Sample.PersonI("xA","C135"," UKIAH",9)=""

^Sample.PersonI("xA","C261"," ALBANY",1)=""

^Sample.PersonI("xA","C883"," DENVER",2)=""

^Sample.PersonI("xA","D162"," ST LOUIS",4)=""

And this has been in the product since maybe 2010. I couldn't find the original release note for this but I did find a change that fixed a problem when consolidating the iterators and that fix is present in 2010.2.

There is much to be discovered regarding Object Persistence. The ability to specify an expression in place of a literal global name in any of the various LOCATION keywords is just one. For example, you can add a public variable to a LOCATION keyword and its value at object filing time will be used to form the global location where the filer will update the data. There is risk involved and these "features" are most likely not documented. I do not recommend using these in production systems unless you fully understand the ramifications of doing so.

That said, there are a number of features that may or may not be documented but are certainly not private. In the context of this message, two come to mind. First is the Object Journal. Override the parameter OBJJOURNAL in a persistent class and all other classes referenced by this class and filing events are journaled. The Object Journal records each filing event for classes with the OBJJOURNAL parameter set and another class, %ObjectJournalTransaction, can be used to view the versions of those objects that were filed. All this would be wonderful but for a bug that I just discovered while coming up with an example for this post. I did a bit of research and it seems this bug has been present for a very long time and never reported. That indicates nobody is aware that this feature exists. I fixed the bug temporarily for the example.

SAMPLES>set person = ##class(Sample.Person).%OpenId(10)

SAMPLES>write oldperson.Name

Uhles,Ralph W.

SAMPLES>set person.Home.State = "NY"

SAMPLES>w person.%Save()

1

SAMPLES>set person.Office.State = "FL"

SAMPLES>set person.Name = "Book, John J"

SAMPLES>write person.%Save()

1

SAMPLES>write person.Name

Book, John J

SAMPLES>set journal = ##class(%ObjectJournalRecord).IDKeyOpen(3,4)

SAMPLES>set oldperson = journal.OpenObjectVersion(.s)

SAMPLES>write oldperson.Name

Uhles,Ralph W.The second item of interest is something that has been around for a while as well and is definitely a fully documented and supported feature. This feature, Triggers, was previously an SQL only feature but it is now (and has been for several versions) available for Object filing as well. Using a save trigger for Objects and SQL allows access to the old and new values as well as a way to detect which values have been modified. I am happy to post an example if anyone is interested.

While the ability to specify an expression as a LOCATION keyword value and the use of Object Journal are not well known or mainstream features, Triggers are very much mainstream and can be quite useful.

The difference between runs can be caused simply by normal variations in system load. The difference between Concurrency = 0 and Concurrency = 1 is only present when the object is stored in multiple global nodes. There are five possible values that are described in the %Library.Persistent class documentation (viewable through the SMP). Concurrency = 1 is referred to as "atomic read". For single node objects there is no extra work required for atomic read.

The name of the <index>Open method is based on the name of the index. I suspect that in one case the name of the IDKEY index is "IDKEY" and in another it is "idkey". No <index>Open method is generated if the index is not unique, primary or id.

What I don't know is if you need SQL mapped storage because default storage doesn't work for your case. Inheritance of storage is not the same as for other class members. I wrote storage inheritance and I am intrigued by the case where there are common serializations shared by multiple classes. Steve Canzano's idea is really close to what I think is a good solution but introducing %SerialObject brings with it some complications. Interestingly enough, the idea of SQL Map inheritance was probably brought up first by Steve several years ago.

What is clear is that no storage is inherited from anything other than the primary super class. I would like to understand your need to see if that restriction is preventing you from doing as you wish. Relaxing that restriction is not a simple task so I would also like to know if there is an existing pattern that can address your need.

-Dan

I admit that when I first read this post I wasn't interested in commenting. I don't normally get involved in SQL mapping questions. @Brendan Bannon is the expert on that topic. As I read through this more, I became interested in what you didn't ask and I need to make sure that I'm on the right track.

Are you most interested in sharing the definition of a data node or do you have specific requirements that make you want to use SQL-mapped storage?

--Dan

That is a problem. SQL requires metadata to be provided at statement prepare time. Any SQL-Invokable-Routine (function - including Table-Valued Function - and procedure as invoked by CALL) must provide that metadata and that metadata is generated by the compiler. There is no dynamic prepare-time hook unfortunately.

There is syntax in standard SQL that allows you to provide "value to column binding" within the statement (late-schema binding) but we do not support that.

You didn't specify a version so I'll use IRIS 2020.1. This should also work in 2019 versions. My example uses a Table-Valued Function. Any query can potentially be used as a table valued function. %SQL.CustomQuery simply generates all the infrastructure for you. Custom query works quite simply and is very similar to an embedded SQL cursor - you implement Open, Fetch and Close yourself (%OpenCursor, %FetchCursor, %CloseCursor). You define properties that correspond to columns in your row. You define private properties to hold the cursor state. Open typically binds the source data to the instance of your custom query and close releases any resources. Fetch simply advances the cursor to the next row, populating the properties that represent columns in your table. Look at the class doc for %SQL.CustomQuery for more details.

select * from TVF_TEST('[["one",2,"three"],["first",0,"third"]]')

col1 |

col2 |

col3 |

|---|---|---|

one |

2 |

three |

first |

0 |

third |

The class is simple:

Class User.TVF Extends %SQL.CustomQuery [ Language = objectscript ]

{

Parameter SQLNAME As String = "TVF_TEST";

Property data As %Library.DynamicArray [ Private ];

Property iterator As %Iterator.Array [ Private ];

Property col1 As %String;

Property col2 As %Integer;

Property col3 As %String;

Method %OpenCursor(data As %Library.DynamicArray) [ Private ]

{

if $isobject(data) {

set ..data = data

} else {

set ..data = [].%FromJSON(data)

}

set ..iterator = ..data.%GetIterator()

}

Method %FetchCursor() As %Library.Integer

{

if ..iterator.%GetNext(.key,.value) {

set ..col1 = value.%Get(0)

set ..col2 = value.%Get(1)

set ..col3 = value.%Get(2)

return 1

} else {

set ..col1 = ""

set ..col2 = ""

set ..col3 = ""

}

return 0

}

}I believe the correct way to report an error from an SQL-Invokable Routine (procedure or function) is to throw an exception as Eduard demonstrated. Attempting to set local variables or retrieve them using an SQL function/procedure might produce invalid results.

As for reporting a nested error - I don't know.

A side note - it is not necessary to extend %RegisteredObject when a class contains only class methods. Not extending %RegisteredObject will result in smaller runtime footprints.

Perhaps this link will help - https://cedocs.intersystems.com/latest/csp/docbook/DocBook.UI.Page.cls?KEY=RSQL_from.

Look specifically for the paragraph on table-valued functions.

What isn't especially clear in this document is how to implement a custom query. The simplest and most portable way to implement a custom query is to extend %SQL.CustomQuery. Another way is to define a class query whose type is %Query, define a ROWSPEC, and implement the various functions. It is much simpler to extend %SQL.CustomQuery. There should be good documentation in that class.

There was a bug in 2017.1 that caused some problems with the seamless projection of a %SQL.CustomQuery subclass as an SQL function. That bug has since been fixed but I do not know if it is fixed in 2017.2.2.

If you need more information, please let me know.

-Dan

Hi,

Yes, SIR :) SQL Invokable routine. A procedure is invoked by CALL and a function is invoked using the function syntax. The primary difference between the two is in which statements you use to invoke the SIR. CALL invokes procedures, other DML statements can be used to invoke functions. The standard states that for every SQL Function there is an implicit procedure. That means that every SQL Function can also be invoked by CALL. The reverse is not necessarily true. IRIS SQL (and Caché SQL) does not enforce that restriction as even a procedure can be invoked using function syntax, as long as it returns a value. The issue with us doing that is that we do not restrict output-directed arguments and we do not restrict a Result Set Sequence (RSS) - strict SQL does not allow either in an SQL Function. Just be careful - invoking such a procedure (with output directed parameters and/or returnresultsets) as a function will ignore all but the return value.

That said, where the SQL Function is located in a DML statement matters. In order for an SQL Function to be referenced in the FROM clause of a SELECT statement it must implement the Table-Valued Function (TVF) interface. Queries do that (I don't recall in which version we added that capability) and %SQL.CustomQuery does. Refer to the class documentation for %SQL.CustomQuery for more information. If you still have questions then let me know!

Defining a class method as a stored procedure will not work as it does not implement the TVF interface.

-Dan

.

In reviewing the storage definition you present above, I realize that the list of properties has little to do with the SQL Map definitions it includes. So I will continue with my example, using the properties your example defines (they are present in the storage definition only for the purpose of computing statistics and other storage specific metadata).

Without altering the class definition, other than to add a couple of keywords to make life a bit simpler in the class header and the conversion of the storage to use SQL Mapped Storage (%CacheSQLStorage in your version), and adding %Populate - our test data generator - I came up with this example data storage:

USER>d ##class(Patient).Populate(10)

USER>zw ^User.PatientD

^User.PatientD=10

^User.PatientD(1)=":J5201:Z5211:58985:Isaacs,Michael A.:501759566:H2536:A8788:377-96-6394:J7857:G3137:R4692:42233"

^User.PatientD(2)=":S4498:Z4308:62077:Cooke,Mario C.:832248338:Z9867:V1891:859-50-1555:I9221:H3938:W7632:25909"

^User.PatientD(3)=":J8016:S3895:53889:Quilty,Nellie J.:150706592:J3845:B6691:998-18-8294:B482:D5191:R7961:59453"

^User.PatientD(4)=":H8837:T289:43380:Quince,Samantha U.:115923507:F6623:S6188:407-88-9788:R8672:Y1441:A9501:60822"

^User.PatientD(5)=":N1854:W4497:55465:North,Susan A.:784860058:Z8257:E2831:187-93-1523:T3167:U4316:A9955:34073"

^User.PatientD(6)=":A4324:Z7427:61318:Lopez,Will F.:133068033:K7869:R5254:302-73-2490:B2970:F1889:P8421:26050"

^User.PatientD(7)=":N4346:Y671:65137:Moon,Milhouse Z.:459189579:E6160:R3362:507-37-8752:L8401:R7909:F4245:60716"

^User.PatientD(8)=":N4328:V1682:47890:Zweifelhofer,Terry V.:360767849:B8856:E145:466-23-4632:K4269:X2839:S1937:49318"

^User.PatientD(9)=":M7370:B6086:49261:Rotterman,Lawrence S.:353537548:S6329:B9164:874-34-2035:D4140:U7504:N1456:66241"

^User.PatientD(10)=":W995:B5004:50613:Ironhorse,Barb I.:809117324:S6518:V1966:873-92-8543:Z9470:H6976:G2259:40210"

USER>zw ^User.PatientI

^User.PatientI("A"," A4324",6)=""

^User.PatientI("A"," H8837",4)=""

^User.PatientI("A"," J5201",1)=""

^User.PatientI("A"," J8016",3)=""

^User.PatientI("A"," M7370",9)=""

^User.PatientI("A"," N1854",5)=""

^User.PatientI("A"," N4328",8)=""

^User.PatientI("A"," N4346",7)=""

^User.PatientI("A"," S4498",2)=""

^User.PatientI("A"," W995",10)=""

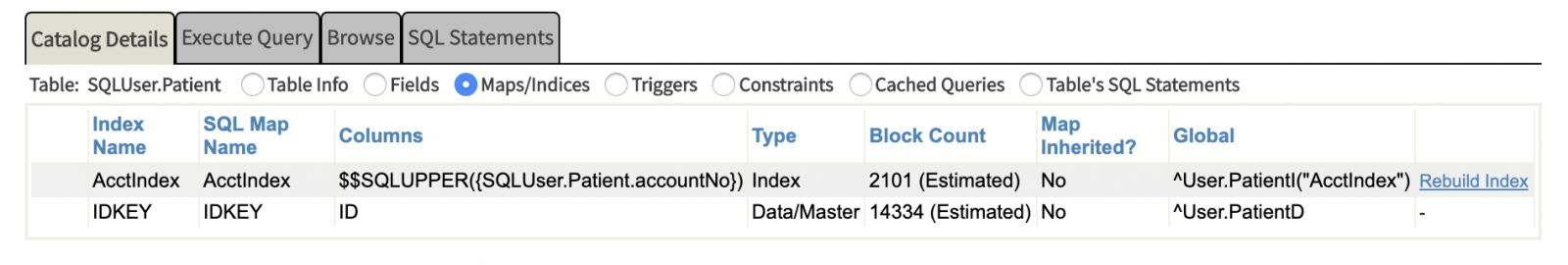

And this is the full definition of the class - with the index map defined as a new SQL Map Definition in the storage. I highlighted a few things to show the connections between the logical class definition and the corresponding items in the storage definition.

Class User.Patient Extends (%Persistent, %Populate) [ SqlRowIdName = Patient, StorageStrategy = SQLStorage ] { Index AcctIndex On accountNo; Property accountNo As %String; Property citySt As %String; Property dob As %Date; Property name As %String; Property patientNo As %Integer; Property rel2Guar As %String; Property sex As %String; Property ssn As %String; Property street1 As %String; Property street2 As %String; Property telephone As %String; Property zip As %String; Storage SQLStorage { <SqlIdExpression>$i(^User.PatientD)</SqlIdExpression> <SQLMap name="DataMasterMap"> <Data name="accountNo"> <Delimiter>":"</Delimiter> <Piece>2</Piece> </Data> <Data name="citySt"> <Delimiter>":"</Delimiter> <Piece>3</Piece> </Data> <Data name="dob"> <Delimiter>":"</Delimiter> <Piece>4</Piece> </Data> <Data name="name"> <Delimiter>":"</Delimiter> <Piece>5</Piece> </Data> <Data name="patientNo"> <Delimiter>":"</Delimiter> <Piece>6</Piece> </Data> <Data name="rel2Guar"> <Delimiter>":"</Delimiter> <Piece>7</Piece> </Data> <Data name="sex"> <Delimiter>":"</Delimiter> <Piece>8</Piece> </Data> <Data name="ssn"> <Delimiter>":"</Delimiter> <Piece>9</Piece> </Data> <Data name="street1"> <Delimiter>":"</Delimiter> <Piece>10</Piece> </Data> <Data name="street2"> <Delimiter>":"</Delimiter> <Piece>11</Piece> </Data> <Data name="telephone"> <Delimiter>":"</Delimiter> <Piece>12</Piece> </Data> <Data name="zip"> <Delimiter>":"</Delimiter> <Piece>13</Piece> </Data> <Global>^User.PatientD</Global> <Structure>list</Structure> <Subscript name="1"> <Expression>{Patient}</Expression> </Subscript> <Type>data</Type> </SQLMap> <SQLMap name="IndexNName"> <BlockCount>-4</BlockCount> <Global>^User.PatientI</Global> <Structure>list</Structure> <Subscript name="1"> <Expression>"A"</Expression> </Subscript> <Subscript name="2"> <Expression>$$SQLUPPER({accountNo})</Expression> </Subscript> <Subscript name="3"> <Expression>{Patient}</Expression> </Subscript> <Type>index</Type> </SQLMap> <StreamLocation>^User.PatientS</StreamLocation> <Type>%Storage.SQL</Type> } }

and this is how it appears in the SQL Manager display of the indexes:

Fair enough. Let's make this more fun! First of all, we need to understand the IDKEY index. InterSystems is somewhat unique in the market with this one. Most SQL vendors support indexes and most support "clustering" data with indexes to further improve the performance of certain queries. Think of our IDKEY index as the "Master Data Index" (sometimes referred to as the "Master Map" or "Data Map"). The "Master Data Index" (MDI) is simply the index that has every column "clustered" with it - all data values are present in the IDKEY index structure. Every table (persistent class) has an IDKEY index and every column (property in Object terms) is stored with it.

Any other index - not the MDI - is simply there to improve query performance. Each non-MDI index has an index key and perhaps some data clustered with it. InterSystems is not different from most other SQL implementations. You can create a new table and add indexes to it using standard DDL statements. We, like most vendors, have some non-standard syntax to support features unique to our product but it is mostly familiar syntax. You can also define a class directly using one of our IDE's or your favorite editor. And all of this will just work - no special effort required and your experience will be similar to that of using any other SQL.

Your example is different. We support low-level storage mapping to legacy data structures. There are two additional storage classes that support this, one of which is used by your example - %CacheSQLStorage. This storage class was introduced specifically to provide a bridge from legacy applications to Caché/InterSystems IRIS. Mapping legacy data structures can be a complicated task and it can be confusing. And this is where you are - you have an application that is using our support for bridging existing data structures to our DBMS.

And none of that helps you with your problem! So let's try this. Let's define your class without using the legacy data mapping. I'll choose some simply data types just to illustrate.

Class User.Patient Extends %Persistent

{

Index AcctIndex On accountNo;

Property accountNo As %String;

Property citySt As %String;

Property dob As %Date;

Property name As %String;

Property patientNo As %Integer;

Property rel2Guar As %String;

Property sex As %String;

Property ssn As %String;

Property street1 As %String;

Property street2 As %String;

Property telephone As %String;

Property zip As %String;

}

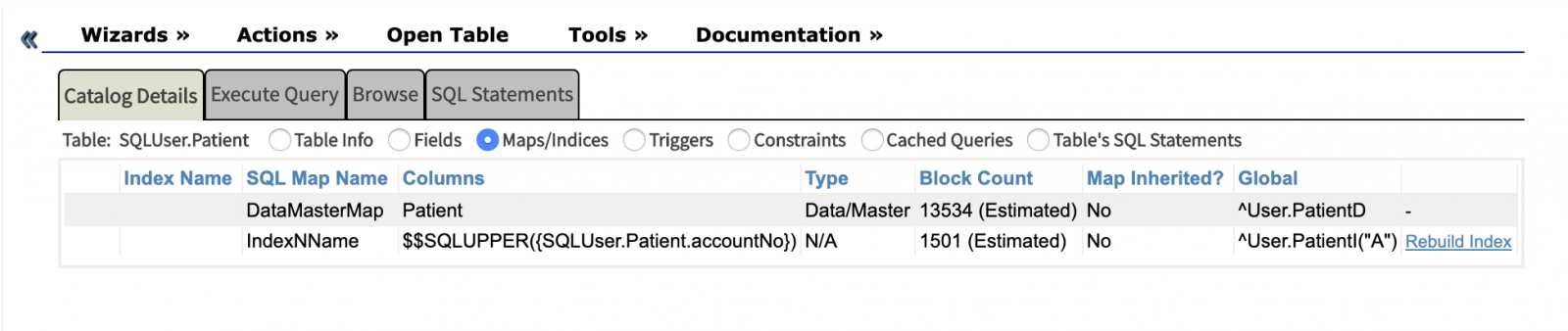

That's it - no special work involved, just a quick and easy class definition with an index on accountNo defined. This is what it looks like in the SQL Manager:

This is an example without the legacy global mapping. %BuildIndices is automatically generated and there is nothing else you would need to do to define, populate and maintain this index - other than to build it initially if it was added to a previously populated extent.

In my next comment (this one is getting long), I'll convert the default storage structure into a mapped storage structure using %CacheSQLStorage.

Hi Keith,

%BuildIndices/%PurgeIndices are generated for any class that primarily extends %Library.Persistent. When such a class defines one or more indexes and that class uses SQL Storage then the class must also define an index map in the storage definition. Without that the index simply won't be maintained - it doesn't exist as far as SQL is concerned. Your display in the original posting is a view of the SQL table definition. The SQL table definition is a projection of the class definition to SQL.

Your first example shows an index that is defined as the IDKEY index - that is the index definition that corresponds to the SQL Master Map (data map). I'm not surprised that it doesn't show up in the indexes display of the SQL Manager.

If WRC isn't an option then a good place to start is to work through just one of your classes. If you can choose one simple example perhaps we can suggest corrections for you.

-Dan

System assigned id's are projected to SQL as an integer as previously mentioned. The name of the column projected to SQL from a system-assigned id is 'ID' by default but it isn't guaranteed to always be 'ID'.

As for controlling how system-assigned ID's are defined - both name and type - I suggest using IDENTITY. I think your code will be happier. You can even name it 'ID' if you wish.

Class User.Person Extends (%Persistent, %Populate)

{

Property ID As %BigInt [ Identity ];

Property Name As %String;

}

And a sample run:

USER>set ^User.PersonD = 5983658923640

USER>d ##class(Person).Populate(50)

USER>do $system.SQL.Execute("select id,name from person where id = 5983658923646").%Display()

ID Name

5983658923646 Young,Imelda B.

1 Rows(s) AffectedThe reason it works when you specify a different dialect is because the type mappings are different for different dialects.

Amir,

If you want the results of your query to be usable by the outside world then you can easily do this by generating a custom query (subclass %SQL.CustomQuery), compile and execute it. I probably have some examples of doing this somewhere. %SQL.CustomQuery is a bit more convenient than defining a query in a class - it basically accomplishes the same thing. %SQL.CustomQuery will generate all of the relevant metadata from your property definitions and you do not have to be concerned with ROWSPEC, etc. It is fully compatible with SQL and can even be used as a TVF in other queries.

-Dan

Be careful. %Extends returns 1 | 0 but it is checking the entire SUPER value of your class, not just the primary superclass. Take a simple class that extends both MyLibrary.ParentClass and %Library.Populate - %Extends will return 1 (true) for both MyLibrary.ParentClass and %Library.Populate.

There is another method - %IsA(superclass) that returns 1 (true) only if the superclass is the primary super class (forms a %IsA() relationship with your current class).

Take a look at the %SQL.Util.Procedures class - specifically the CSVTOCLASS method. The interface isn't very nice and it isn't intuitive but it will do what you want - and more. You can invoke this directly or you can execute it as an SQL procedure. I worked up a very quick example using the data you included - I just created a simple file. In the file you can replace the column headers with a ROWTYPE (SQL standard defines this thing) or you can pass the ROWTYPE as an argument (that is what I did here).

USER>do ##class(%SQL.Util.Procedures).CSVTOCLASS(,"Name VARCHAR(50),Acc INT,Div INT","/Users/danp/projects/csvdemo/data.csv",,,1,"User.Customers")

USER>zw ^User.CustomersD

^User.CustomersD=3

^User.CustomersD(1)=$lb("","Eric",1234,567)

^User.CustomersD(2)=$lb("","John",1235,987)

^User.CustomersD(3)=$lb("","Peter",3214,879)

Alternatively, you can use the CSV() method to simply return a result set .

USER>do ##class(%SQL.Util.Procedures).CSV(,"Name VARCHAR(50),Acc INT,Div INT","/Users/danp/projects/csvdemo/data.csv")

USER>set result = %sqlcontext.%NextResult()

USER>w result.%Next()

1

USER>do result.%Print()

Name Acc division

USER>write result.%Next()

1

USER>write result.Name

Eric

USER>write result.Acc

1234

USER>write result.Div

567

-Dan

Sorry! I didn't mean "we should never" comment to refer to how you reported the problem!! Not at all!

I wrote the code that is reporting the <FUNCTION> error. What I meant was that my code is wrong, there is a bug in my code and this is a problem that I need to address! ( I think that I have already in a later version.)

I am sorry that I wasn't more clear about this. I do recommend that you open an issue through the WRC however. That way you can be updated as to the resolution of the issue and we can use it internally to track the resolution.

We should never report an error in this way. The Extent Manager can hold stale extent definitions that, over time, can become a bit unwieldy but <FUNCTION> errors are certainly never normal. All registered extents can be rebuilt from compiled class information. I agree with Kyle in that this should be brought to the attention of support so we can do a proper analysis of why this is happening.

As for the "unwieldy" comment, look into the /deleteextent qualifier. This can be optionally specified when deleting a class definition. It causes the Extent Manager information (the registered extent) to be deleted along with the class definition. It helps keep the Extent Manager a bit cleaner. It also causes the compiled class's extent (the data!!) to be deleted so be careful.

There is a more general way to describe this situation. First consider what is actually happening. When you open an Object (%Open/%OpenId) you are copying data from persistent storage into memory. Then some other actor is modifying the data in persistent storage so the in-memory data is no longer the same as the data in persistent storage. If you were to then copy the in-memory data back to persistent storage (%Save), assuming the same identifier is used, then the modifications from the other actor would be overwritten.

There are two ways that Objects/SQL Persistence will allow this to happen (I call the action performed by the other actor an underwrite). First is the case you present with your example (same process performs underwrite and overwrite). Second is when there are no or insufficient concurrency controls in place. The second case is easily resolved simply by employing proper concurrency control mechanisms to prevent under/over writes. The first case is more sinister because normal pessimistic concurrency mechanisms won't guard against this case. This case occurs when a process holds data in memory and then updates the data in persistent storage either using direct global set/kill or by using SQL. Optimistic concurrency controls will guard against only the SQL update case, direct global set/kill will go undetected unless those set/kill actions also update the version number of the data.

%Reload() was not intended to solve this problem. %Reload() is automatically invoked when the concurrency setting of an object in memory is updated from no-retained lock (value less than 3) to a retained lock (value 3 or 4). In this case, data is automatically sync'ed with data on disk to ensure that the now-lock protected data is the same in-memory and in persistent storage. However, %Reload() can be invoked directly to perform this same action should user code somehow detect it is necessary.

If the reason you want to %Reload() the data is because the persistent storage was modified by SQL then I have the same question as Fab asked above. Why would you use SQL to update the data? I can think of a few reasons why you might want to do that but there are other ways to accomplish the same task without having to worry about the state of data in memory vs. the state of data in persistent storage.

If you were to use optimistic concurrency controls (VERSIONPROPERTY) then %Save()/UPDATE will fail if the version numbers don't match and then the user code can go into a state where conflicts can be resolved.

There is an interesting dynamic SQL feature that will guard against the case where some objects may be in memory and the user code needs to SQL to update data. I am happy to provide an example of this technique if someone is interested.

-Dan

Nicely done!

Another option that abstracts the caller completely from the quoting requirements is to use a parameter. Parameters in Dynamic SQL are positional. Also, keep in mind that literals are replaced automatically in dynamic SQL statements so using a parameter for this will not add any overhead to the processing.

set statement = ##class(%SQL.Statement).%New()

do statement.prepare("insert into Stats(Country) VALUES (?)")

set result = statement.execute(CountryId)

Keep in mind the lowercase form of prepare and execute work as their %Prepare/%Execute counterparts but they throw exceptions instead of using %Status interfaces.

If you have flexibility with the schema definition then there are certainly other models that would make a query such as this simpler.

With an array collection that is stored as a serialized list you will have to either write a procedure, define some computed properties, or you will have to use some of the InterSystems collection extensions that may not work very well with collections of objects.

Our projection of serial types (classes that extend %SerialObject) uses a "flattening model" and we don't use that for serial collections stored as "listnode" structures. We do use it in the specific case of an array of serial objects stored using a "subnode" structure - the default.

I know this all sounds a bit scary but it doesn't need to be. When you get back to this we can walk through the options.

If the default array projection for PET.ImageStudy.Injection is used then there will be an SQL table projected from the array of objects. By default, the name of this table will be PET.ImageStudy_Injection. This projected table will define a column referencing the PET.ImageStudy table. I believe this column will be named ImageStudy. There will also be a column named Injection_RadioTracer in this table and that column will be a reference to the RadioTracer table.

If the array, Injection, in the PET.ImageStudy class is not stored using defaults then this becomes a more complex problem - still solvable but more difficult.

Let me know if array storage defaults are used and then I can help compose a query - or perhaps you can do it with the information I provided above. You can verify this information using the System Explorer->SQL to view the table definitions.

-Dan

I should update the CSV and CSV2CLASS utility procedures to take advantage of table-valued functions. Then you could select JSON_ARRAYAGG() from CSVTVF(<arguments go here>). Of course you would have to supply the constructor for the objects, probably using JSON_OBJECT.

Have you considered using $xecute?

Version:1.0 StartHTML:00000092 EndHTML:00001371 StartFragment:00000172 EndFragment:00001337 CachÙ Studio clip Class User.Exec [ Abstract ]{ClassMethod test(STATUS = 1) As %String{set greeting = $xecute("(STATUS) { return $S(STATUS:""HELLO"", 1:""GOODBYE"") }",STATUS)return greeting}}and here is this code in action:

PANTHER:XEP:DEV>w ##class(Exec).test() HELLO PANTHER:XEP:DEV>w ##class(Exec).test(0) GOODBYE