My view on this solution started as that is will not work practically, but the more I thought about the scenarios, the more feasible it looks. My thinking was as follows.

When is temp table built, when the page is opened? Because that will cause a waiting time for the first load, especially if no filters have been applied yet. But in this scenario, you can just select like the top 200 or something else, as long as it is not all the rows.

Then also, every time a filter changes or the page size selection changes, you will have to rebuild the temp table. This means your temp table's lookup needs to include filters and page size to determine that it has changed for the session. It is not in the solution above, but not difficult to implement.

If you hit the indexes correct and get a small result this may be useful.

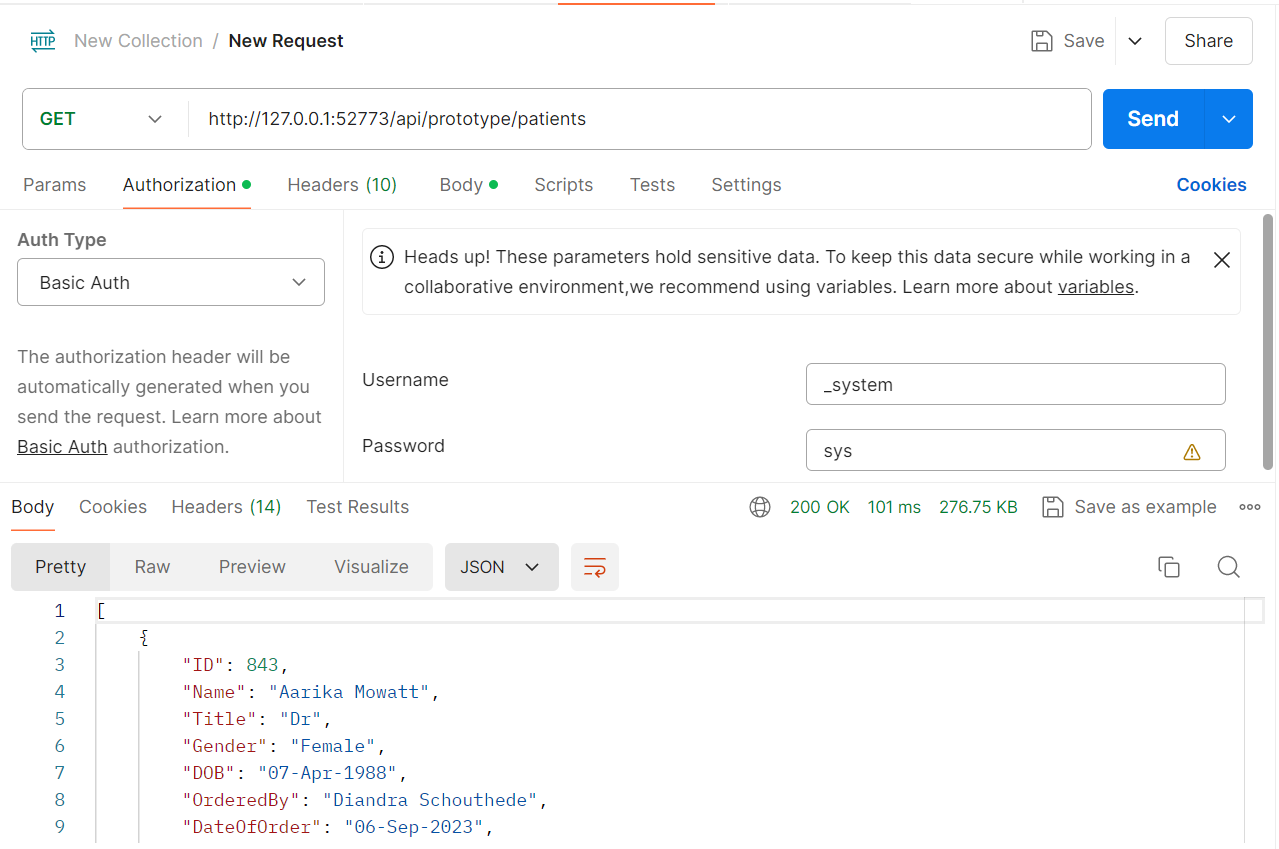

What about REST APIs, which are usually built to end the session after each request.

This will not work for REST APIs requiring pagination. There can be worked around this by letting the front-end pass in a value for the session which is more related to the front-end session.

You will also need to build some cleanup mechanism to purge rows after a session has ended. The front-end can send you some instruction on a logout, but not if the browser is just closed. It will have to be a task that runs at night or some other random time and truncate it.

- Log in to post comments