.png)

Target Practice for IrisClusters with KWOK

KWOK, Kubernetes WithOut Kubelet, is a lightweight tool that simulates nodes and pods—without running real workloads—so you can quickly test and scale IrisCluster behavior, scheduling, and zone assignment. For those of you wondering what value is in this without the IRIS workload, you will quickly realize it when you play with your Desk Toys awaiting nodes and pods to come up or get the bill for provisioning expensive disk behind the pvc's for no other reason than just to validate your topology.

Here we will use it to simulate an IrisCluster and target a topology across 4 zones, implementing high availability mirroring across zones, disaster recovery to an alternate zone, and horizontal ephemeral compute (ecp) to a zone of its own. All of this done locally, suitable for repeatable testing, and a valuable validation check mark on the road to production.

.png)

Goal

The graphic above sums it up, but lets put it in a list for attestation at the end of the exercise.

⬜ HA Mirror Set, Geographically situated > 500 miles as the crow flies (Boston, Chicago)

⬜ DR Async, Geographically situated > 1000 miles as an Archer eVTOL flies (Seattle)

⬜ Horizontal Compute situated across the pond for print (London)

⬜ Pod Counts Match Topology

lesgo...

Setup

We are using Kwok "In-Cluster", though it supports actually creating the clusters themselves, there will be a single control plane and a single initial node with the KWOK and IKO charts included.

Cluster

Lets provision a local Kind cluster, with a single "physical node" and a control plane.

cat <<EOF | kind create cluster --name ikoplus-kwok --config=-

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

networking:

disableDefaultCNI: false

EOF

Should be a simple cluster with a control plane and a worker node.

.png)

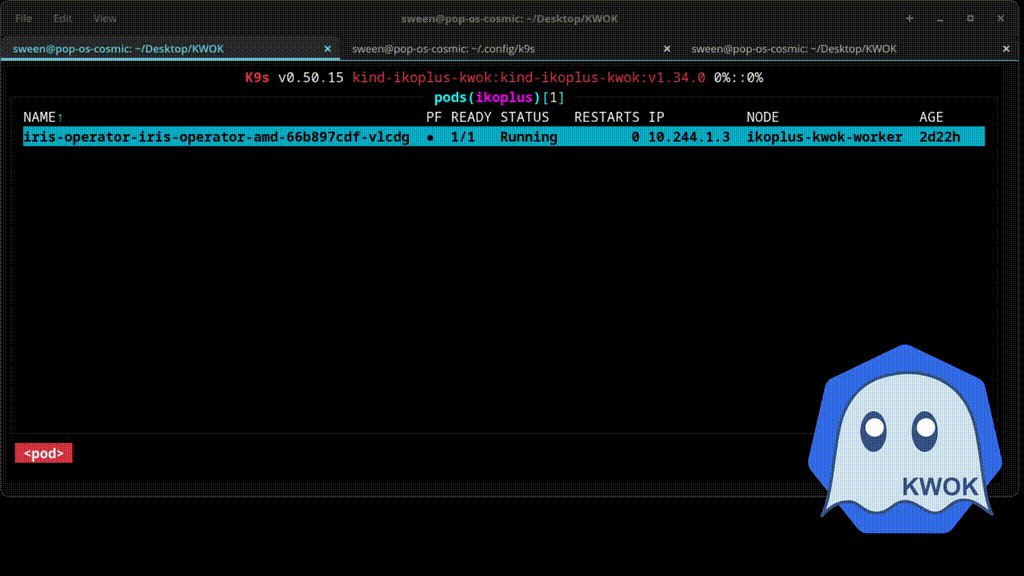

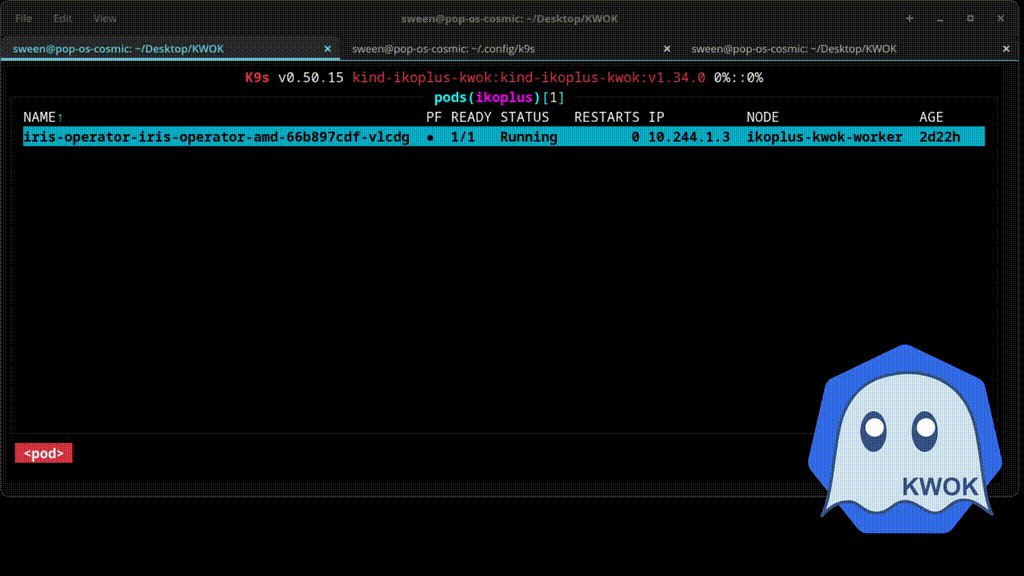

Install IKO Chart

Install the Operator under test.

helm install iris-operator . --namespace ikoplus

Install KWOK

In-cluster, per the docs.

helm repo add kwok https://kwok.sigs.k8s.io/charts/

helm upgrade --namespace kube-system --install kwok kwok/kwok

helm upgrade --install kwok kwok/stage-fast

helm upgrade --install kwok kwok/metrics-usage

Charts looking good:

.png)

Configure KWOK

KWOK configuration for this distraction is two parts:

- Create KWOK Nodes

- Tell KWOK which ones to Manage

Data Nodes

Here I create three specific KWOK nodes to hold the data nodes for HA/DR and the mirrormap topology. The nodes are kubernetes nodes, with specific annotations and labels to identify them as "fake", important to note that all the fields are fully configurable, meaning you can also do things with resources such as cpu and memory so you can test requests for the pods and fully configurable phase values.

apiVersion: v1

kind: Node

metadata:

annotations:

node.alpha.kubernetes.io/ttl: "0"

kwok.x-k8s.io/node: fake

labels:

beta.kubernetes.io/arch: amd64

beta.kubernetes.io/os: linux

kubernetes.io/arch: amd64

kubernetes.io/hostname: ikoplus-kwok-data-node-0

kubernetes.io/os: linux

kubernetes.io/role: worker

topology.kubernetes.io/zone: boston

type: kwok

name: ikoplus-kwok-data-node-0

status:

allocatable:

cpu: 32

nvidia.com/gpu: 32

memory: 256Gi

pods: 110

capacity:

cpu: 32

nvidia.com/gpu: 32

memory: 256Gi

pods: 110

nodeInfo:

architecture: amd64

bootID: ""

containerRuntimeVersion: ""

kernelVersion: ""

kubeProxyVersion: fake

kubeletVersion: fake

machineID: ""

operatingSystem: linux

osImage: ""

systemUUID: ""

phase: Running

---

apiVersion: v1

kind: Node

metadata:

annotations:

node.alpha.kubernetes.io/ttl: "0"

kwok.x-k8s.io/node: fake

labels:

beta.kubernetes.io/arch: amd64

beta.kubernetes.io/os: linux

kubernetes.io/arch: amd64

kubernetes.io/hostname: ikoplus-kwok-data-node-1

kubernetes.io/os: linux

kubernetes.io/role: worker

topology.kubernetes.io/zone: chicago

type: kwok

name: ikoplus-kwok-data-node-1

status:

allocatable:

cpu: 32

nvidia.com/gpu: 32

memory: 256Gi

pods: 110

capacity:

cpu: 32

nvidia.com/gpu: 32

memory: 256Gi

pods: 110

nodeInfo:

architecture: amd64

bootID: ""

containerRuntimeVersion: ""

kernelVersion: ""

kubeProxyVersion: fake

kubeletVersion: fake

machineID: ""

operatingSystem: linux

osImage: ""

systemUUID: ""

phase: Running

---

apiVersion: v1

kind: Node

metadata:

annotations:

node.alpha.kubernetes.io/ttl: "0"

kwok.x-k8s.io/node: fake

labels:

beta.kubernetes.io/arch: amd64

beta.kubernetes.io/os: linux

kubernetes.io/arch: amd64

kubernetes.io/hostname: ikoplus-kwok-data-node-2

kubernetes.io/os: linux

kubernetes.io/role: worker

topology.kubernetes.io/zone: seattle

type: kwok

name: ikoplus-kwok-data-node-2

status:

allocatable:

cpu: 32

nvidia.com/gpu: 32

memory: 256Gi

pods: 110

capacity:

cpu: 32

nvidia.com/gpu: 32

memory: 256Gi

pods: 110

nodeInfo:

architecture: amd64

bootID: ""

containerRuntimeVersion: ""

kernelVersion: ""

kubeProxyVersion: fake

kubeletVersion: fake

machineID: ""

operatingSystem: linux

osImage: ""

systemUUID: ""

phase: Running

Full yaml in the spoiler above, but here are the annotations and label that are important to call out:

- kwok annotation

- iko zone

- type: kwok

.png)

Apply the nodes to the cluster and take a peak, you should see your 3 fake data nodes:

.png)

Compute Nodes

Now, lets provision many compute nodes because we can, for this, you can use the script below to prompt and loop over the creation.

#!/bin/bash

SCRIPT_DIR=$(readlink -f `dirname "${BASH_SOURCE[0]}"`)

function help() {

echo "usage: nodes.sh [-h]"

echo

echo "Description: Creates simulated KWOK worker nodes for performance testing"

echo

echo "Preconditions: "

echo " - The script assumes you've logged into your cluster already. If not, it will tell you to login."

echo " - The script checks that you have the kwok-controller installed, otherwise it'll tell you to install it first."

echo

echo "Options:"

echo " -h Print this help message"

echo

}

function check_kubectl_login_status() {

set +e

kubectl get ns default &> /dev/null

res="$?"

set -e

OCP="$res"

if [ $OCP == 1 ]

then

echo "You need to login to your Kubernetes Cluster"

exit 1

else

echo

echo "Nice, looks like you're logged in"

echo ""

fi

}

function check_kwok_installed_status() {

set +e

kubectl get pod -A |grep kwok-controller &> /dev/null

res2="$?"

set -e

KWOK="$res2"

if [[ $KWOK == 1 ]]

then

echo "You need Install the KWOK Controller first before running this script"

exit 1

else

echo "Nice, the KWOK Controller is installed"

fi

}

while getopts hf: option; do

case $option in

h)

help

exit 0

;;

*)

;;

esac

done

shift $((OPTIND-1))

# Track whether we have a valid kubectl login

echo "Checking whether we have a valid cluster login or not..."

check_kubectl_login_status

# Track whether you have the KWOK controller installed

echo "Checking KWOK Controller installation status"

echo

check_kwok_installed_status

echo

read -p "How many simulated KWOK nodes do you want?" NODES

echo "Nodes number is $NODES"

echo " "

COUNTER=1

while [ $COUNTER -le $NODES ]

do

ORIG_COUNTER=$(($COUNTER - 1))

echo "Submitting node $COUNTER"

# Had to do this OSTYPE because sed acts differently on Linux versus Mac

case "$OSTYPE" in

linux-gnu*)

sed -i "s/ikoplus-kwok-node-$ORIG_COUNTER/ikoplus-kwok-node-$COUNTER/g" ${SCRIPT_DIR}/printnodes.yaml;;

darwin*)

sed -i '' "s/ikoplus-kwok-node-$ORIG_COUNTER/ikoplus-kwok-node-$COUNTER/g" ${SCRIPT_DIR}/printnodes.yaml${SCRIPT_DIR}/printnodes.yaml;;

*)

sed -i "/ikoplus-kwok-node-$ORIG_COUNTER/ikoplus-kwok-node-$COUNTER/g" ${SCRIPT_DIR}/printnodes.yaml;;

esac

kubectl apply -f ${SCRIPT_DIR}/printnodes.yaml

COUNTER=$[$COUNTER +1]

done

# Let's reset the original printnodes.yamlfile back to original value

case "$OSTYPE" in

linux-gnu*)

sed -i "s/ikoplus-kwok-node-$NODES/ikoplus-kwok-node-0/g" ${SCRIPT_DIR}/printnodes.yaml;;

darwin*)

sed -i '' "s/ikoplus-kwok-node-$NODES/ikoplus-kwok-node-0/g" ${SCRIPT_DIR}/printnodes.yaml;;

*)

sed -i "s/ikoplus-kwok-node-$NODES/ikoplus-kwok-node-0/g" ${SCRIPT_DIR}/printnodes.yaml;;

esac

# Check for all nodes to report complete

echo "Waiting until all the simualted pods become ready:"

kubectl wait --for=condition=Ready nodes --selector type=kwok --timeout=600s

echo " "

echo "Total amount of simulated nodes requested is: $NODES"

echo "Total number of created nodes is: "`kubectl get nodes --selector type=kwok -o name |wc -l`

kubectl get nodes --selector type=kwok

echo " "

echo "FYI, to clean up the kwow nodes, issue this:"

echo "kubectl get nodes --selector type=kwok -o name | xargs kubectl delete"

Lets create 128 compute nodes, which gets us close to a reasonable ecp limit.

.png)

The cluster should now be at 133 nodes, 1 control plane, 1 worker, 3 data nodes, and 128 compute.

.png)

Now hang this kwok command in another terminal to perpetually annotate.

sween@pop-os-cosmic:~$ kwok --manage-nodes-with-annotation-selector=kwok.x-k8s.io/node=fake

The result should be a pretty busy loop.

.png)

Apply IrisCluster SUT

Here is our system under test, the IrisCluster itself.

apiVersion: intersystems.com/v1alpha1

kind: IrisCluster

metadata:

name: ikoplus-kwok

namespace: ikoplus

labels:

type: kwok

spec:

volumes:

- name: foo

emptyDir: {}

topology:

data:

image: containers.intersystems.com/intersystems/iris-community:2025.1

mirrorMap: primary,backup,drasync

mirrored: true

podTemplate:

spec:

preferredZones:

- boston

- chicago

- seattle

webgateway:

replicas: 1

alternativeServers: LoadBalancing

applicationPaths:

- /*

ephemeral: true

image: containers.intersystems.com/intersystems/webgateway-lockeddown:2025.1

loginSecret:

name: webgateway-secret

type: apache-lockeddown

volumeMounts:

- name: foo

mountPath: "/irissys/foo/"

storageDB:

mountPath: "/irissys/foo/data/"

storageJournal1:

mountPath: "/irissys/foo/journal1/"

storageJournal2:

mountPath: "/irissys/foo/journal2/"

storageWIJ:

mountPath: "/irissys/foo/wij/"

arbiter:

image: containers.intersystems.com/intersystems/arbiter:2025.1

compute:

image: containers.intersystems.com/intersystems/irishealth:2025.1

ephemeral: true

replicas: 128

preferredZones:

- london

Explanations

The emptyDir {} volume maneuver is pretty clutch to this test with IKO. The opinionated workflow of a data node would be to use external storage through a CSI or whatever, but is counter intuitive for this test. Thank you to @Steve Lubars from ISC for the hint on this, was pretty stuck for a bit making the IrisCluster all ephemeral.

The mirrorMap and preferred zones specify the topology for the data and compute to target the location of the clusters and declare the roles.

Set the ephemeral flag on the compute to enforce the same thing as the emptyDir{} for the data nodes, and the number of nodes of 128 specifies the replica count which matches the number of nodes we provisioned.

lesgo...

.png)

The apply...

Attestation

One command to check them all.

kubectl get pods -A -l 'intersystems.com/role=iris' -o json \

| jq -r --argjson zmap "$(kubectl get nodes -o json \

| jq -c 'reduce .items[] as $n ({}; .[$n.metadata.name] = (

$n.metadata.labels["topology.kubernetes.io/zone"]

[ .items[] | {zone: ($zmap[.spec.nodeName] // "unknown")} ]

| group_by(.zone)

| map({zone: .[0].zone, total: length})

| (["ZONE","TOTAL"], (.[] | [ .zone, ( .total|tostring ) ]))

| @tsv' | column -t

ZONE TOTAL

boston 1

chicago 1

london 19

seattle 1

Results

✅ HA Mirror Set, Geographically situated > 500 miles as the crow flies (Boston, Chicago)

✅ DR Async, Geographically situated > 1000 miles as an Archer eVTOL flies (Seattle)

✅ Horizontal Compute situated across the pond for print (London)

❌ Pod Counts Match Topology

So after launching this over and over, it never got to schedule 128 pods to the available compute nodes, and it is due to the KWOK nodes going into NotReady at a random point around 20-50 nodes in. I think this is due to the fact that the ECP nodes rely on a healthy cluster to connect to to continue to provision by IKO... so I have some work to do to see if IKO readiness checks can be faked as well. The other thing I did was cordon the other "worker" to speed things up so the arbiter went to a fake node, I was able to get more compute nodes in with this arrangement. I guess too I could have targetted the topology for the arbiter too, but it seems that those are basically anywhere these days and can be out of cluster.

Fun and useful for 3/4 use cases above at this point for sure.

.

.

%20(2).jpg)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)