Export .cls and .mac files from database

I am taking over a production system that had some HSLIB and other database routine and class files modified. However, I do not know what was modified .

I developed a routine that allows me to load each database in our production instance and compare it against a default instance using the SIZE attribute. This allowed me to generate a list of files where the .INT file size in production does not match the file size in the default instance. However, doing a spot check of the .cls or .mac files indicates that in some cases these files are identical, just the intermediate files are different.

What I would like to do is export these files (.cls, .int, .mac) to XML, placing the files from production in one folder and the files for the default instance in another. After I can use something like BeyondCompare to better identify what has changed.

In my code I currently use the following to get information about a routine where p = the name of the routine:

set size1=^["^^"_prodDatabase]ROUTINE(p,0,"SIZE")From what I can see, all the $System.OBJ.Export type of methods only work with the current namespace. Is there any way to get this to work with a virtual namespace.

Is there anything like

set status = ["^^DatabaseName"]$System.OBJ.Export((itemName*.cls,itemname*.mac),exportFileName)Thanks.

Daniel Lee

Amazing Charts | Harris CareTracker

Comments

Is there a reason that the use of a Virtual Namespace is a requirement? If not, you can use ##class(%Studio.SourceControl.ISC).BaselineExport() to export the entire contents of the Namespace to disk for file-based diffing (this is how I normally do it)

Good question.

I tried comparing our production namespaces with default databases but because of mappings, the results were not accurate. This lead me to just compare database to database. This allowed me to accurately identify any missing files and changed files.

So my routine currently does not know the namespace in which the routine lives, it just knows which database the code lives in. For instance, I have over 1200 changes in mgr\hslib\cache.dat.

Below is an example of the results my code reveals:

isdifferent("HS.AU.IHE.XDSb.DocumentSource.Operations.1")="this production routine is DIFFERENT from default routine. Size1:61135, size2:48533"

isdifferent("HS.AU.Message.IHE.XDSb.ProvideAndRegisterRequest.1")="this production routine is DIFFERENT from default routine. Size1:268366, size2:263389"I add names like HS.AU.IHE.XDSb.DocumentSource.Operations.1 to an array. After I have compared the two databases, now want to export each item from the production database and the default database for each item in the array. I would export each item, HS.AU.IHE.XDSb.DocumentSource.Operations.1.*, to an XML. Production and Default XML files go to different directories.

I understand ... so you want to only export specific things which it has found to have differences in the code. That makes sense since you are trying to use it at the end of a piece of code already in place. I am afraid I don't know how to do that, but if you contact Support they may be able to suggest a trick or two.

The function you mention, ##class(%Studio.SourceControl.ISC).BaselineExport(), seems tied to something that our production system isn't using. I tried this in terminal and got the following.

Creating Source Control Object...

Failed! Quiting BaselineExport().

We do not have any source control tied to our production system.

Sorry - I forgot that you first need to specify the source control class in the Management Portal. Put "Source Control" in the Search box on the SMP homepage, and then go to that page (e.g. http://localhost:57772/csp/sys/mgr/%25CSP.UI.Portal.SourceControl.zen). Select the Namespace in the left column and then "%Studio.SourceControl.ISC". Save the changes and try the BaselineExport() again. When you are done with the export, change the Source Control back to "None"

As a general tip, if you do enable and enforce source control, then you wouldn't need to be querying your class definitions to find variations between environments - you could see all of that (and so much more!) in your source control system ;)

That is a fairly common problem - the need to compare two different codebases. It could be a test and prod server or just different production servers. Anyway I usually compare them like this:

- Install source control hook (I prefer cache-tort-git udl fork but any udl based will do) on all affected servers.

- Export everything from the base server (with oldest sources) using source control hook into a new repostory.

- Commit this state as an initial state.

- Clone the repository with initial state to the other server.

- Export everything from the other server into the repository.

- Commit again (if you have more than two parallel codebases you may need to branch out).

- Use any commit viewer to see the difference (I prefer GitHub/GitLab).

In a Terminal you can issue a ZNSPACE command or run DO ^%CD and swap to a database (technically, an implied namespace). See the ZNSPACE doc here.

Or if you want to do your exporting from the System Explorer section of Portal, use the dropdown on the left-hand panel to change from a "Namespaces" perspective to a "Databases" one.

Agree with @Eduard Lebedyuk answer, want to introduce another toolset:

1. Import ISC_DEV utility to a DEFAULT_INSTANCE say in a USER namespace and map the classes of the utility to %All.

2. Setup the workdir to export the code

YOURNAMESPACE> w ##class(dev.code).workdir("/path/to/your/wor

king/directory/")2. export code calling:

YOURNAMESPACE> w ##class(dev.code).export()

This will export cls, routines, and dfi (DeepSee) into separate files.

3. Create the repository in git and commit all the files from the directory into the repository (and even push, if you use Github/Gitlab)

4. Repeat p1-2 for a PRODUCTION_INSTANCE and export classes into the same directory.

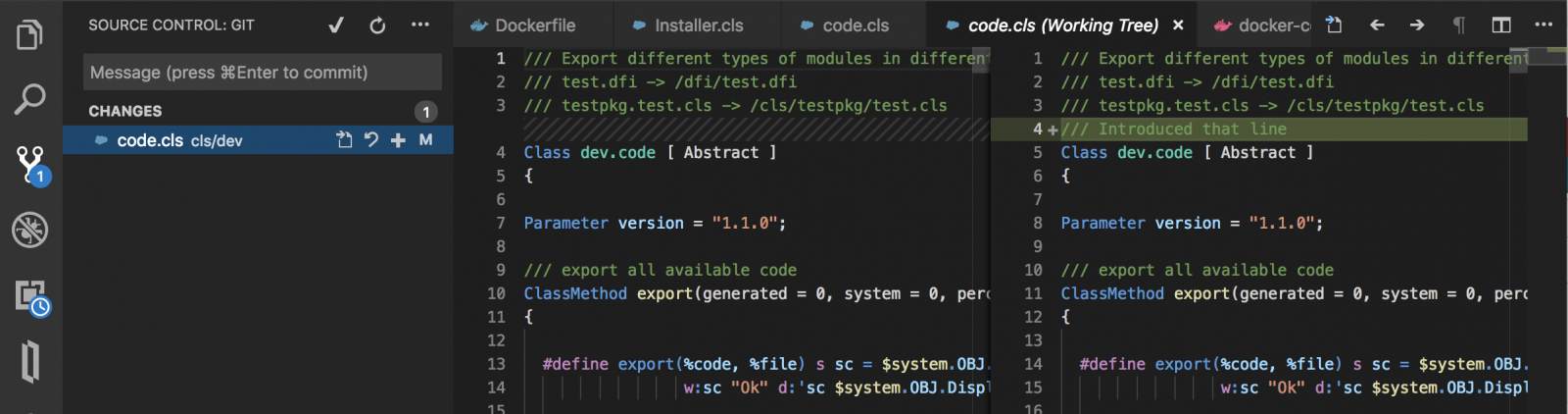

5. Compare the changes. If you Open the directory in VSCode with Object_Script plugin by @Dmitry Maslennikov you will immediately see the changes in Source Control section of VSCode. E.g. I introduced one line and saved the class and it shows the files changed since the latest commit and the line with the change.

Alternatively you can commit and push changes to Github/Gitlub and see the diff since the latest commit. E.g. like changes in this commit.

If you don't have DeepSee resources, p.1 can be changed to Atelier or VSCode - both have the out-of-the-box functionality to export the source into files in UDL form.

HTH

Thank you for all the excellent responses. The solution was far simpler.

#;Do not include Cache.DAT as part of the sourceDB path, just folder path

set impliedNamespace = "^^"_sourceDB

New $NAMESPACE

set $NAMESPACE = impliedNamespaceThis changes the namespace to an implied namespace and allows me to export the classes and routines.

This documentation is what got me on the right path: https://docs.intersystems.com/irislatest/csp/docbook/DocBook.UI.Page.cl…