Continuous Delivery of your InterSystems solution using GitLab - Part IX: Container architecture

In this series of articles, I'd like to present and discuss several possible approaches toward software development with InterSystems technologies and GitLab. I will cover such topics as:

- Git 101

- Git flow (development process)

- GitLab installation

- GitLab Workflow

- Continuous Delivery

- GitLab installation and configuration

- GitLab CI/CD

- Why containers?

- Containers infrastructure

- CD using containers

- CD using ICM

- Container architecture

In this article, we would talk about building your own container and deploying it.

Durable %SYS

Since containers are rather ephemeral they should not store any application data. Durable %SYS feature enables us to do just that - store settings, configuration, %SYS data and so on a host volume, namely:

- The iris.cpf file.

- The /csp directory, containing the web gateway configuration and log files.

- The file /httpd/httpd.conf, the configuration file for the instance’s private web server.

- The /mgr directory, containing the following:

- The IRISSYS system database, comprising the IRIS.DAT and iris.lck files and the stream directory, and the iristemp, irisaudit, iris and user directories containing the IRISTEMP, IRISAUDIT, IRIS and USER system databases.

- The write image journaling file, IRIS.WIJ.

- The /journal directory containing journal files.

- The /temp directory for temporary files.

- Log files including messages.log, journal.log, and SystemMonitor.log.

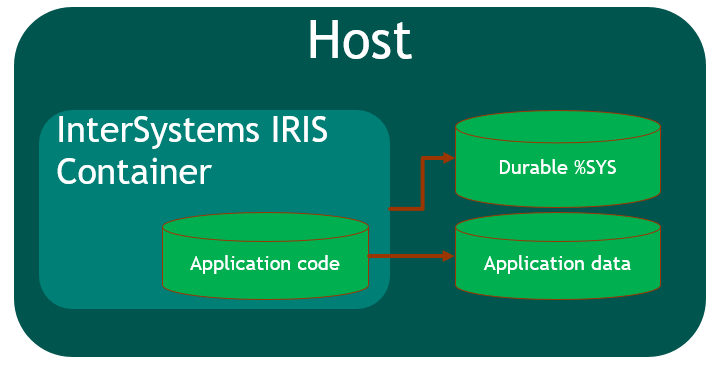

Container architecture

On the other hand, we need to store application code inside our container so that we can upgrade it when required.

All that brings us to this architecture:

To achieve this during build time we, at the minimum, need to create one additional database (to store application code) and map it into our application namespace. In my example, I would use USER namespace to hold application data as it already exists and is durable.

Installer

Based on the above, our installer needs to:

- Create APP namespace/database

- Load code into APP Namespace

- Map our application classes to USER namespace

- Do all other installation (in this case I created CSP web app and REST app)

Class MyApp.Hooks.Local

{

Parameter Namespace = "APP";

/// See generated code in zsetup+1^MyApp.Hooks.Local.1

XData Install [ XMLNamespace = INSTALLER ]

{

<Manifest>

<Log Text="Creating namespace ${Namespace}" Level="0"/>

<Namespace Name="${Namespace}" Create="yes" Code="${Namespace}" Ensemble="" Data="IRISTEMP">

<Configuration>

<Database Name="${Namespace}" Dir="/usr/irissys/mgr/${Namespace}" Create="yes" MountRequired="true" Resource="%DB_${Namespace}" PublicPermissions="RW" MountAtStartup="true"/>

</Configuration>

<Import File="${Dir}Form" Recurse="1" Flags="cdk" IgnoreErrors="1" />

</Namespace>

<Log Text="End Creating namespace ${Namespace}" Level="0"/>

<Log Text="Mapping to USER" Level="0"/>

<Namespace Name="USER" Create="no" Code="USER" Data="USER" Ensemble="0">

<Configuration>

<Log Text="Mapping Form package to USER namespace" Level="0"/>

<ClassMapping From="${Namespace}" Package="Form"/>

<RoutineMapping From="${Namespace}" Routines="Form" />

</Configuration>

<CSPApplication Url="/" Directory="${Dir}client" AuthenticationMethods="64" IsNamespaceDefault="false" Grant="%ALL" Recurse="1" />

</Namespace>

</Manifest>

}

/// This is a method generator whose code is generated by XGL.

/// Main setup method

/// set vars("Namespace")="TEMP3"

/// do ##class(MyApp.Hooks.Global).setup(.vars)

ClassMethod setup(ByRef pVars, pLogLevel As %Integer = 0, pInstaller As %Installer.Installer) As %Status [ CodeMode = objectgenerator, Internal ]

{

Quit ##class(%Installer.Manifest).%Generate(%compiledclass, %code, "Install")

}

/// Entry point

ClassMethod onAfter() As %Status

{

try {

write "START INSTALLER",!

set vars("Namespace") = ..#Namespace

set vars("Dir") = ..getDir()

set sc = ..setup(.vars)

write !,$System.Status.GetErrorText(sc),!

set sc = ..createWebApp()

} catch ex {

set sc = ex.AsStatus()

write !,$System.Status.GetErrorText(sc),!

}

quit sc

}

/// Modify web app REST

ClassMethod createWebApp(appName As %String = "/forms") As %Status

{

set:$e(appName)'="/" appName = "/" _ appName

#dim sc As %Status = $$$OK

new $namespace

set $namespace = "%SYS"

if '##class(Security.Applications).Exists(appName) {

set props("AutheEnabled") = $$$AutheUnauthenticated

set props("NameSpace") = "USER"

set props("IsNameSpaceDefault") = $$$NO

set props("DispatchClass") = "Form.REST.Main"

set props("MatchRoles")=":" _ $$$AllRoleName

set sc = ##class(Security.Applications).Create(appName, .props)

}

quit sc

}

ClassMethod getDir() [ CodeMode = expression ]

{

##class(%File).NormalizeDirectory($system.Util.GetEnviron("CI_PROJECT_DIR"))

}

}To create the not-durable database I use a subdirectory of /usr/irissys/mgr, which is not persistent. Note that call to ##class(%File).ManagerDirectory() returns path to the durable directory and not the path to internal container directory.

Continuous delivery configuration

Check part VII for complete info, but all we need to do is to add these two lines (in bold) to our existing configuration.

run image:

stage: run

environment:

name: $CI_COMMIT_REF_NAME

url: http://$CI_COMMIT_REF_SLUG.docker.eduard.win/index.html

tags:

- test

script:

- docker run -d

--expose 52773

--volume /InterSystems/durable/$CI_COMMIT_REF_SLUG:/data

--env ISC_DATA_DIRECTORY=/data/sys

--env VIRTUAL_HOST=$CI_COMMIT_REF_SLUG.docker.eduard.win

--name iris-$CI_COMMIT_REF_NAME

docker.eduard.win/test/docker:$CI_COMMIT_REF_NAME

--log $ISC_PACKAGE_INSTALLDIR/mgr/messages.logvolume argument mounts host directory to the container and ISC_DATA_DIRECTORY variable shows InterSystems IRIS what directory to use. To quote documentation:

When you run an InterSystems IRIS container using these options, the following occurs:

Updates

When application evolves and a new version (container) gets released sometimes you may need to run some code. It could be pre/post compile hooks, schema migrations, unit tests but all it boils down to is that you need to run arbitrary code. That's why you need a framework that manages your application. In previous articles, I outlined a basic structure of this framework, but it, of course, can be considerably extended to fit specific application requirements.

Conclusion

Creating a containerized application takes some thoughts but InterSystems IRIS offers several features to make this process easier.

Links

Comments

Great article, Eduard, thanks!

It's not clear though what do I need to have on a production host initially? In my understanding, installed docker and container with IRIS and container with IRIS is enough. In that case how Durable %SYS and Application data (USER NAMESPACE) appear on the production host for the first time?

what do I need to have on a production host initially?

First of all you need to have:

- FQDN

- GitLab server

- Docker Registry

- InterSystems IRIS container inside your Docker Registry

They could be anywhere as long as they are accessible from production host.

After that on a production host (and on every separate host you want to use), you need to have:

- Docker

- GitLab Runner

- Nginx reserve proxy container

After all these conditions are met you can create Continuous Delivery configuration in GitLab and it would build and deploy your container to production host.

In that case how Durable %SYS and Application data (USER NAMESPACE) appear on the production host for the first time?

When InterSystems IRIS container is started in Durable %SYS mode, it checks directory for durable data, if it does not exist InterSystems IRIS creates it and copies the data from inside the container. If directory already exists and contains databases/config/etc then it's used.

By default InterSystems IRIS has all configs inside.

Thanks, Eduard!

But why Gitlab? Can I manage all the Continuous Delivery say with Github?

GitHub does not offer CI functionality but can be integrated with CI engine.

Another question: can I deploy a container manually on production host with a reasonable number of steps in management or CI is the only option?

can I deploy a container manually

Sure, to deploy a container manually it's enough to execute this command:

docker run -d --expose 52773 --volume /InterSystems/durable/master:/data --env ISC_DATA_DIRECTORY=/data/sys --name iris-master docker.eduard.win/test/docker:master --log $ISC_PACKAGE_INSTALLDIR/mgr/messages.log

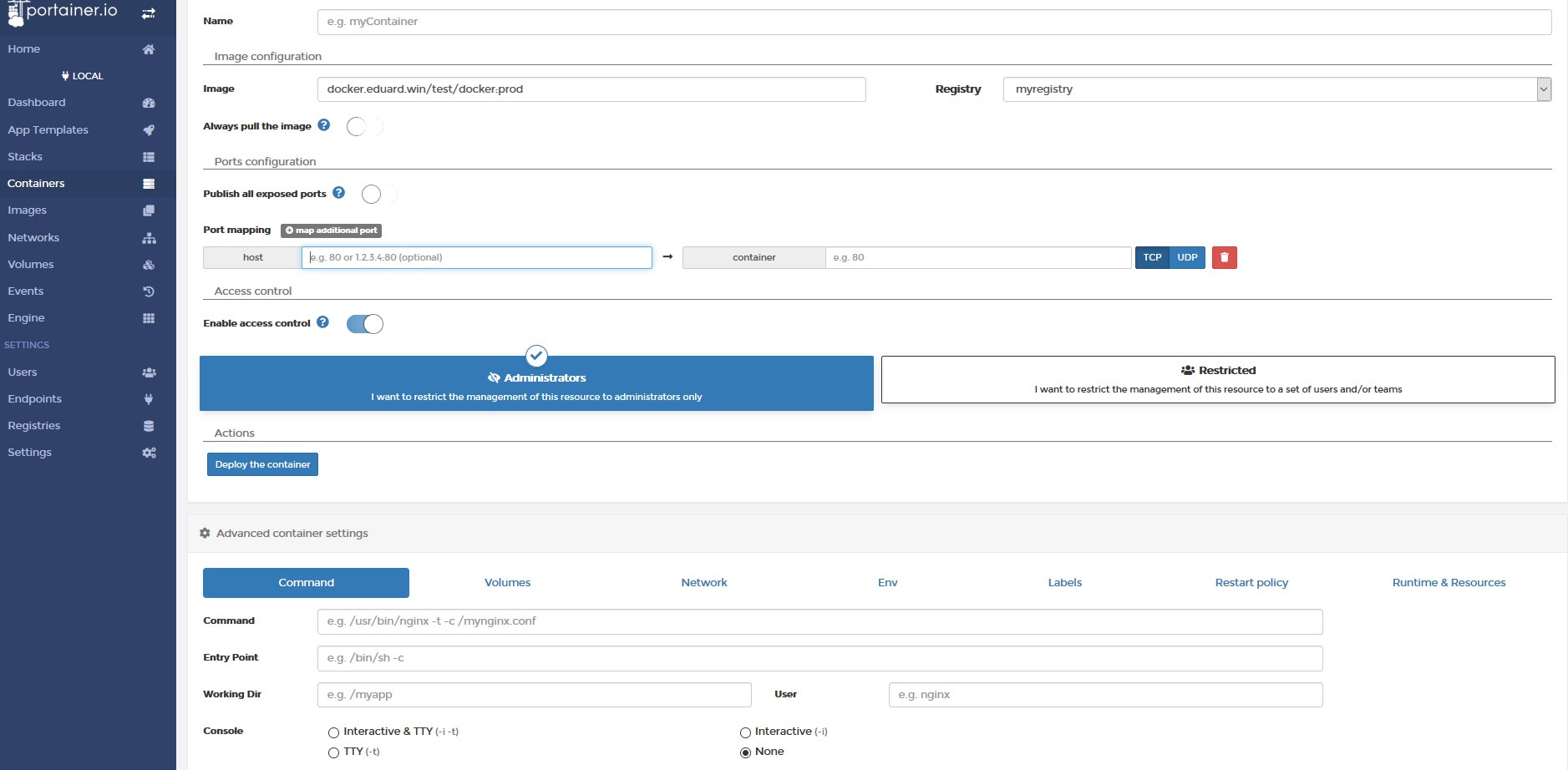

Alternatively, you can use GUI container management tools to configure a container you want to deploy. For example, here's Portainer web interface, you can define volumes, variables, etc there:

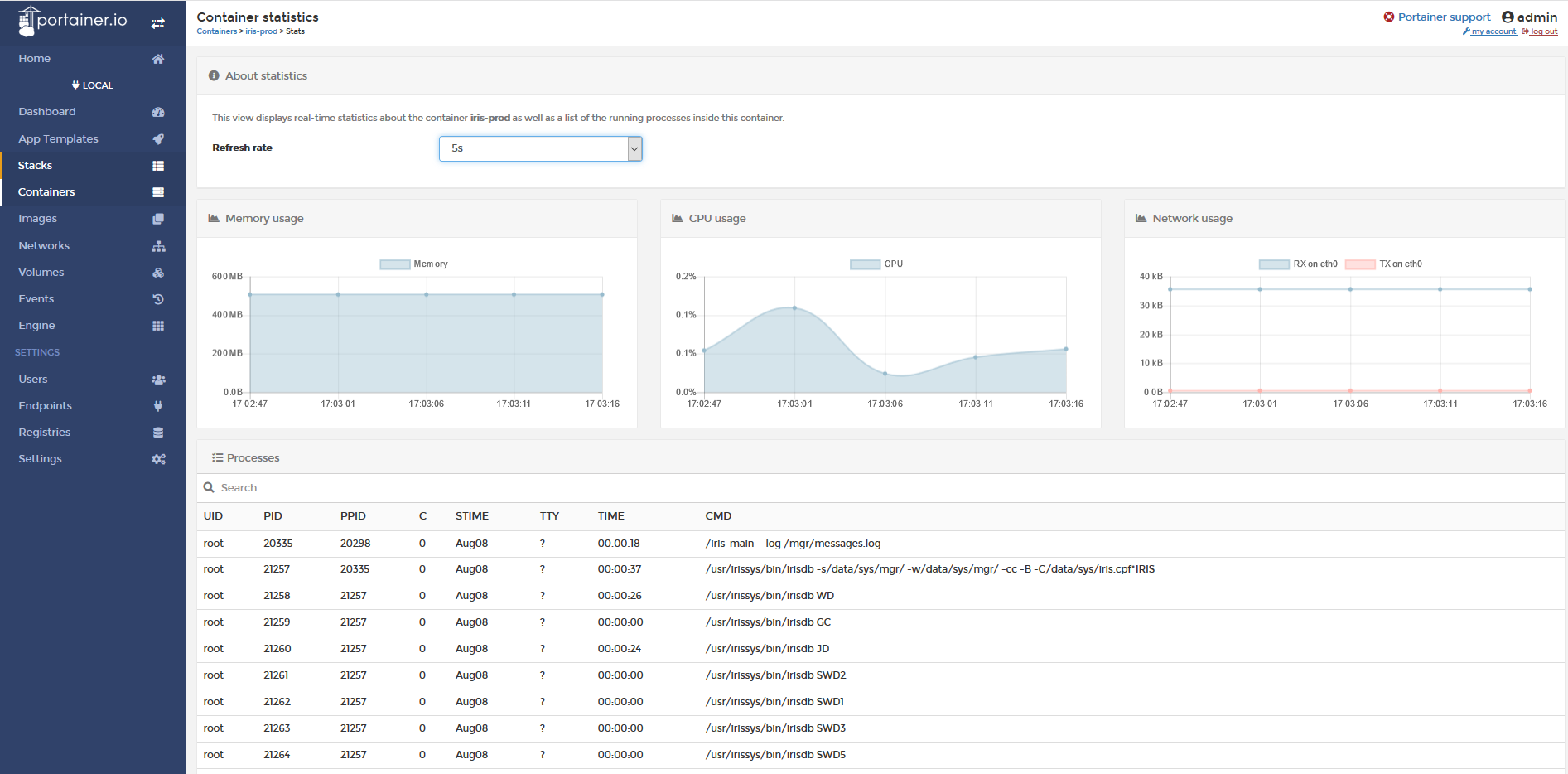

it also allows browsing registry and inspecting your running containers among other things:

Wow. Do you have any experience with Portanier + IRIS containers? I think it deserves a new article.

I ran InterSystems IRIS containers via Rancher and Portainer it's all the same stuff. GUI over docker run.

Is it possible to add the intersystems registry (https://containers.intersystems.com/) in portainer with authentication ?

Excellent work! Way to go!

Thank you, Luca!

Thanks for the sharing, Eduard. That is a good demonstration of the use of -volume in the docker run command.

In the situation of bringing up multiple containers of the same InterSystems IRIS image (such as starting up a number of sharding nodes, etc.), the system administrator may consider organising the host by some files/folders among instances as sharable but read-only (such as the license key, etc.), and some as isolated yet temporary. Maybe we can go further and enhance the docker run command in this direction, so that the same command with a small change (such as the port number and the container name) is enough for starting up a cluster of instances quickly.

I think you'll need a full fledged orchestrator for that. Docker run always starts one container, bit several volumes could be mounted, some of them RW, some RO. I'm not sure about mounting the same folder into several containers (again, orchestration).

You can also use volumes_from argument to mount directory from one container to another.

That's right. Maybe we can consider running docker-compose as an option for such an orchestration.