Yes, this is very long story. :)

But in any case, there is [better spelled] system method, which returns endianness flag from kernel code:

0

DEVLATEST:18:09:56:SAMPLES>w $system.Version.IsBigEndian()

0

- Log in to post comments

Yes, this is very long story. :)

But in any case, there is [better spelled] system method, which returns endianness flag from kernel code:

As you might recognize the fact that JSON support is still work-in-progress, and any next version is getting better than prior. In this case 2016.3 will be better than 2016.2 because there iis added support for $toJSON in resultsets (though I ran this in "latest" so results might look different that in 2016.3FT).

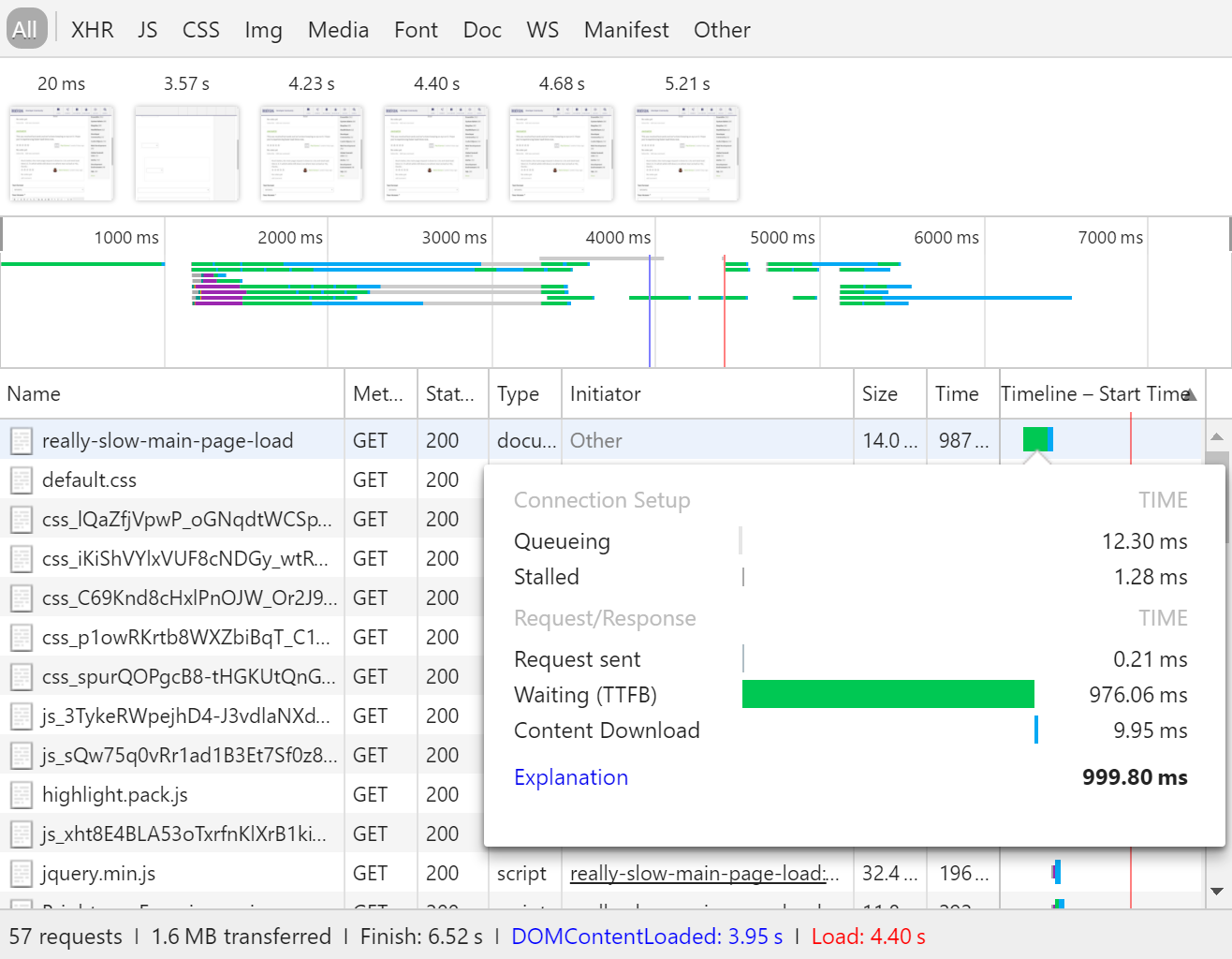

Overseas it's still as bad as original 6seconds for Mark. At the moment total load time is 4 seconds, though "time-to-first-byte" (TTFB) for the 1st request is 0.9seconds (which is not instant, but good enough).

The page is still heavy (57 requests and 1.6MB payload), and Drupal devs should pay attention to this large number of files involved.

I'd guess it would be 8192.

[Hint, see %sySecurity.inc]

I'm curious what is the point to write longer ##class(%Object).$new() instead of simple {} ?

And what is the version of Cache', is it 32-bit similarly?

32-bit driver or 64-bit? "User DSN" or "System DSN"?

Disclaimer: I've never worked with MultiValue Basic till today.

The proble with the table you were refferring to http://docs.intersystems.com/latest/csp/docbook/DocBook.UI.FrameSet.cls?KEY=GVRF_basicfeatures- it's saying quite the opposite, roman numerical conversion code ('NR') is not supported in the Caché OCONV implementation.

Though if the code would be supported then it would be callable from ObjectScript mode via syntax like:

You have missed the extension of file "data.csv" used in the example. CSV stands for "Comma Separated Values", which is simplest way to exportt Excel like data to the textual file. And in this case it was apparently exported in the Russian-loale where "comma" is ";" actually.

Ok, here is the "simplest"

(Well, I'm joking - this is the standard preamble for self-loading INT file)

Woud this "Quick Reference" be of any help here?

Ok, Eduard, let me elaborate using some fictional, ideal picture.

And as a good citizen you always run all relevant unit-tests for changed classes before committing to VCS. So what would you choice before commit: to run unit-tests for whole package (which might take hours) or only for your project with relevant classes (which will take few minutes)?

Although I do foresee the need to run all unit-tests in current namespace or for the seelected package, but from developers prospective I will need to run only those unit-test which are connected to the current project I'm working on. And for that I use Studio project (or would use separate Atelier workspaces once and if I'd migrate to Atelier).

So it's a proper approach IMVHO to start from project items and then add wider collections (package(s) or namespace wide).

Ok, ok, good point about PPG that it will remove reference to the object. And that simple local variable will work better, because it will not serialize OREF to string.

And the only case when it was workng for me - then the parent call is still presented in the stack, and still has reference to the originally created object. Which is exactly the case when %-named singleton would work (i.e. you open object at the top of the stack, and it's still visible in the nested calls).

So yes, we better to get rid of PPG and use %-named variables in our CPM code (pull-requests welcome)

Here are assumptions:

- you could not implement singleton which will be working across jobs, only for this same process;

- to share instance to teh object you could emply %-named variable or process-private global.

Here is the sample using PPG, which is easy to convert to % with redefinition of CpmVar macro

https://github.com/cpmteam/CPM/blob/master/CPM/Main.cls.xml#L33

OMG! Looks a little bit over-designed

Glad you've mentioned it.

We have a plenty of open-source projects on several our GitHub hubs (the central one - intersystems, the russian one, and spanish one). But, unfortunately, nobody yet created msgpack implementation for Caché or Ensemble.

If you want to implement such component then we will republish it via those hubs with pleasure. :)

P.S.

Looks like it's easy to implement something similar to Python-library, especially having current native JSON support in 2016.x

Good question. There, on docs.intersystems.com, is set of documentations acompanying each version of Caché released so far. And for each version yu could find "Release Notes" which contains teh list of changes introduced to the language, among the other changes (see Release Notes for 2015.2 as an example)

I would need to go and collect all changes for all releases... if documentation team would not do it for you already :)

The recentmost release notes has all the changes since Caché 5.1. The only you need to do now is to go thru the newest list (from 2016.2FT) and to find those relevant changes for ObjectScript compiler or depretaion warnings.

Could you please elaborate? Or provide some screenshots? I, for example, have no experience with NetBeans IDE so do not understand what special they provide in code organization.

I'm confused a bit with the set of proposed tools for "code coverage" and let me explain why...

Yes, ZBREAK is quite powerful, and ages ago, when there was no yet source-level MAC/CLS debugging in the Studio people implemented all the nasty things using smart ZBREAK expressions and some post-massaging with gathered data. Myself, for example, in my prior life, I've even implemented some "call tree" utility which was gathering information about called routines touched while executing arbitrary expression. And ZBREAK could help in many things, if there would be no kernel support...

But there is kernel support for profiling, which gather all the necessary [for code coverage] information, and which you could see used in ^%SYS.MONLBL. You don't actually interested in the actual timings for every line, but you do want to know that this particular line was called, but this was not.

Also I'm confused with you mentioned UnitTest infrastructure as key in solution, actually it's not necessary. Code coverage utilities are usually targetting not only unit-test code, but whole code size of your system. Assumption is - you do have some set of regression or unit-tests, not necessary using directly that mentioned UnitTest.Manager infrastructure, probably any other test framework, but what is the goal of execution - is collecting information of covered lines in some core of your system. This is quite independent of the test infrastructure, IMVHO.

So for me "code coverage" tool should:

On the other hand: if we would deal with SAMPLES and ENSDEMO similarly, and %ALL mapping would work in SAMPLES the same way as for others, then leaving DOCBOOK alone would not make much sense :)

I'd prefer %ALL to work everywhere, without exceptions, that would significantly simiplify global mapping creation in some package manager you may be aware of.

Or better yet to disable this mode (removal of extra line breaks) globally in the Outlook:

[This is in Russian, sorry, but location of this element in the English version is the same, so you'll find it easily]

Feel free, Wolf, to request these features via GitHub repo issues , and, if you are brave enough, pull requests are very welcomed! This is open-source model, things might be changed easier (if you are really wanting them to be changed).

Nobody would complain if you would simply provide the direct link to the book on Amazon

My guess that this sounds like result of some source control hook activity (Deltanji?)...

Saved but not compiled.

Very nice tools, but I disagreed with majority of fatal problems (especially in my projects :)). And who said that I could not write $$$MACRO as a single statement? Where did you get one?

So... it would require a lot of fine tuning to work properly accroding to the experienced Cache' ObjectScript programmer tastes. Java idioms ported as-is to ObjectScript just doesn't work.

Though, returning to the original question, I tend to agree with the recommendation to use ^GLOBUFF, because of dynaic nature of a data in the Ensemble configuration. There is no hard data, but consistent statistics.

GLOBUFF is the nice tool for showing current distribution of a cached data (given current memory limitations, past activity and current algorithm), but what is more important is to estimate a maximum of working set for your applications using your own globals. Which might be quite big if yu have transactions-heavy application.

[Worth to mention that for ^GLOBUFF case you could quite unique information - these % of memory used by process-private variables, which will be essential information for SQL heavy applications]

But for me it's always interesting to know - what amount of memory would be enough to keep all your globals in memory? Use ^%GSIZE:

So we see 5 millions of 8KB blocks necessary to hold whole application dataset, this might serve as upper estimate for the memory necessary for your applications. [I intentionally omit here needs for routine buffer, which is usually is negligible comparing to the database buffers, it's very hard to find application code which, in its OBJ form, would occupy multiple gigabytes, or even single GB]

All in all, for the case shown above we started from 40GB of global buffers for this application, because we had plenty of RAM.

Ok, here is the simpler route:

1. if you have performance problems then run pButtons, call WRC, they will get "performance team" involved and explain you what you see :);

2. if you have budget for new HW then see item #1 above and ask WRC for advice.