Clear filter

Article

Evgeny Shvarov · Feb 9, 2021

Hi developers!

Recently we announced the preview of Embedded Python technology in InterSystems IRIS.

Check the Sneak Peak video by @Robert.Kuszewski.

Embedded python gives the option to load and run python code in the InterSystems IRIS server. You can either use library modules from Python pip, like numpy, pandas, etc, or you can write your own python modules in the form of standalone py files.

So once you are happy with the development phase of the IRIS Embedded Python solution there is another very important question of how the solution could be deployed.

One of the options you can consider is using the ZPM Package manager which is described in this article.

I want to introduce you a template repository that introduces a deployable ZPM module and shows how to build such a module.

The example is very simple and it contains one sample.py, that demonstrates the usage of pandas and NumPy python libs and the test.cls objectscript class that makes calls to it.

The solution could be installed with ZPM as:

zpm "install iris-python-template"

NB: Make sure the IRIS you install the module contains an Embedded Python preview code. E.g. you can use the image:

intersystemsdc/iris-ml-community:2020.3.0.302.0-zpm

With commands:

docker run --rm --name my-iris -d --publish 9091:1972 --publish 9092:52773 intersystemsdc/iris-ml-community:2020.3.0.302.0-zpm

docker exec -it my-iris iris session IRIS

USER>zpm "install iris-python-template"

[iris-python-template] Reload START

...

[iris-python-template] Activate SUCCESS

The module installs sample.py python file and titanic.csv sample file along with test.cls to the system.

E.g. sample.py exposes meanage() function which accepts the csv file path and calculates the mean value using numpy and pandas llibraries.

test.cls objectscript class loads the python module with the following line code:

set tt=##class(%SYS.Python).Import("sample")

then provides the path to csv file and collects the result of the function.

Here is how you can test the installed module:

USER>d ##class(dc.python.test).Today()

2021-02-09

USER>d ##class(dc.python.test).TitanicMeanAge()

mean age=29.69911764705882

USER>

OK! Next, is how to deploy Embedded Python modules?

You can add the following line to module.xml:

<FileCopy Name="python/" Target="${mgrdir}python/"/>

the line copies all python files from the python folder of the repository to the python folder inside /mgr folder of IRIS installation.

This lets the python modules then be imported from ObjectScript via ##class(%SYS.Python).Import() method.

Also if you want the data files to be packed into the ZPM module check another FileCopy line in the module that imports the data folder from the repository along with titanic.csv into the package:

<FileCopy Name="data/" Target="${mgrdir}data/"/>

this is it!

Feel free to use the template as a foundation for your projects with Embedded Python for IRIS!

Any questions and comments are appreciated! Hi Evgeny!

I tried embedded Python in my multi model contest app but used an ugly approach to deploy Python code. I didn't realize that ZPM could do this for me... Nice tip! Thanks, Jose!

Yes, indeed ZPM option of delivering files to a target IRIS installation looks elegant and robust. Maybe it could be used not only for Embedded python but e.g. for jar-files delivery and data. @Yuri.Gomes, what do you think? Nice option! OK. I did node.js, @Yuri.Gomes Java is yours. A suggestion is allows ZPM to copy from a HTTP URL like a github address.

Announcement

Anastasia Dyubaylo · May 8, 2020

Hi Community,

We're pleased to invite you to join the upcoming InterSystems IRIS 2020.1 Tech Talk: Integrated Development Environments on May 19 at 10:00 AM EDT!

In this edition of InterSystems IRIS 2020.1 Tech Talks, we put the spotlight on Integrated Development Environments (IDEs). We'll talk about InterSystems latest initiative with the open source ObjectScript extension to Visual Studio Code, discussing what workflows are particularly suited to this IDE, how development, support, and enhancement requests will work in an open source ecosystem, and more.

Speakers:🗣 @Raj.Singh5479, InterSystems Product Manager, Developer Experience🗣 @Brett.Saviano, InterSystems Developer

Date: Tuesday, May 19, 2020Time: 10:00 AM EDT

➡️ JOIN THE TECH TALK!

Additional Resources:

ObjectScript IDEs [Documentation]

Using InterSystems IDEs [Learning Course]

Hi Community!Join the Tech Talk today. 😉 ➡️ You still have time to REGISTER.

Announcement

Olga Zavrazhnova · Aug 25, 2020

Hi Community, As you may know, on Global Masters you can redeem a consultation with InterSystems expert on any InterSystems product: InterSystems IRIS, IRIS for Health, Interoperability (Ensemble), IRIS Analytics (DeepSee), Caché, HealthShare.And we have exciting news for you: now these consultations available in the following languages: English, Portuguese, Russian, German, French, Italian, Spanish, Japanese, Chinese. Also! The duration is extended to 1.5 hours for your deep dive into the topic.

If you are interested, don't hesitate to redeem the reward on Global Masters!

If you are not a member of Global Masters yet - you are very welcome to join here (click on the InterSystems login button and use your InterSystems WRC credentials). To learn more about Global Masters read this article: Global Masters Advocate Hub - Start Here!

See you on InterSystems Global Masters today! 🙂

Article

Timothy Leavitt · Aug 27, 2020

Introduction

In a previous article, I discussed patterns for running unit tests via the InterSystems Package Manager. This article goes a step further, using GitHub actions to drive test execution and reporting. The motivating use case is running CI for one of my Open Exchange projects, AppS.REST (see the introductory article for it here). You can see the full implementation from which the snippets in this article were taken on GitHub; it could easily serve as a template for running CI for other projects using the ObjectScript package manager.

Features demonstrated implementation include:

Building and testing an ObjectScript package

Reporting test coverage measurement (using the TestCoverage package) via codecov.io

Uploading a report on test results as a build artifact

The Build Environment

There's comprehensive documentation on GitHub actions here; for purposes of this article, we'll just explore the aspects demonstrated in this example.

A workflow in GitHub actions is triggered by a configurable set of events, and consists of a number of jobs that can run sequentially or in parallel. Each job has a set of steps - we'll go into the details of the steps for our example action in a bit. These steps consist of references to actions available on GitHub, or may just be shell commands. A snippet of the initial boilerplate in our example looks like:

# Continuous integration workflow

name: CI

# Controls when the action will run. Triggers the workflow on push or pull request

# events in all branches

on: [push, pull_request]

# A workflow run is made up of one or more jobs that can run sequentially or in parallel

jobs:

# This workflow contains a single job called "build"

build:

# The type of runner that the job will run on

runs-on: ubuntu-latest

env:

# Environment variables usable throughout the "build" job, e.g. in OS-level commands

package: apps.rest

container_image: intersystemsdc/iris-community:2019.4.0.383.0-zpm

# More of these will be discussed later...

# Steps represent a sequence of tasks that will be executed as part of the job

steps:

# These will be shown later...

For this example, there are a number of environment variables in use. To apply this example to other packages using the ObjectScript Package Manager, many of these wouldn't need to change at all, though some would.

env:

# ** FOR GENERAL USE, LIKELY NEED TO CHANGE: **

package: apps.rest

container_image: intersystemsdc/iris-community:2019.4.0.383.0-zpm

# ** FOR GENERAL USE, MAY NEED TO CHANGE: **

build_flags: -dev -verbose # Load in -dev mode to get unit test code preloaded

test_package: UnitTest

# ** FOR GENERAL USE, SHOULD NOT NEED TO CHANGE: **

instance: iris

# Note: test_reports value is duplicated in test_flags environment variable

test_reports: test-reports

test_flags: >-

-verbose -DUnitTest.ManagerClass=TestCoverage.Manager -DUnitTest.JUnitOutput=/test-reports/junit.xml

-DUnitTest.FailuresAreFatal=1 -DUnitTest.Manager=TestCoverage.Manager

-DUnitTest.UserParam.CoverageReportClass=TestCoverage.Report.Cobertura.ReportGenerator

-DUnitTest.UserParam.CoverageReportFile=/source/coverage.xml

If you want to adapt this to your own package, just drop in your own package name and preferred container image (must include zpm - see https://hub.docker.com/r/intersystemsdc/iris-community). You might also want to change the unit test package to match your own package's convention (if you need to load and compile unit tests before running them to deal with any load/compile dependencies; I had some weird issues specific to the unit tests for this package, so it might not even be relevant in other cases).

The instance name and test_reports directory shouldn't need to be modified for other use, and the test_flags provide a good set of defaults - these support having unit test failures flag the build as failing, and also handle export of jUnit-formatted test results and a code coverage report.

Build Steps

Checking out GitHub Repositories

In our motivating example, two repositories need to be checked out - the one being tested, and also my fork of Forgery (because the unit tests need it).

# Checks out this repository under $GITHUB_WORKSPACE, so your job can access it

- uses: actions/checkout@v2

# Also need to check out timleavitt/forgery until the official version installable via ZPM

- uses: actions/checkout@v2

with:

repository: timleavitt/forgery

path: forgery

$GITHUB_WORKSPACE is a very important environment variable, representing the root directory where all of this runs. From a permissions perspective, you can do pretty much whatever you want within that directory; elsewhere, you may run in to issues.

Running the InterSystems IRIS Container

After setting up a directory where we'll end up putting our test result reports, we'll run the InterSystems IRIS Community Edition (+ZPM) container for our build.

- name: Run Container

run: |

# Create test_reports directory to share test results before running container

mkdir $test_reports

chmod 777 $test_reports

# Run InterSystems IRIS instance

docker pull $container_image

docker run -d -h $instance --name $instance -v $GITHUB_WORKSPACE:/source -v $GITHUB_WORKSPACE/$test_reports:/$test_reports --init $container_image

echo halt > wait

# Wait for instance to be ready

until docker exec --interactive $instance iris session $instance < wait; do sleep 1; done

There are two volumes shared with the container - the GitHub workspace (so that the code can be loaded; we'll also report test coverage info back to there), and a separate directory where we'll put the jUnit test results.

After "docker run" finishes, that doesn't mean the instance is fully started and ready to command yet. To wait for the instance to be ready, we'll keep trying to run a "halt" command via iris session; this will fail and continue trying once per second until it (eventually) succeeds, indicating that the instance is ready.

Installing test-related libraries

For our motivating use case, we'll be using two other libraries for testing - TestCoverage and Forgery. TestCoverage can be installed directly via the Community Package Manager; Forgery (currently) needs to be loaded via zpm "load"; but both approaches are valid.

- name: Install TestCoverage

run: |

echo "zpm \"install testcoverage\":1:1" > install-testcoverage

docker exec --interactive $instance iris session $instance -B < install-testcoverage

# Workaround for permissions issues in TestCoverage (creating directory for source export)

chmod 777 $GITHUB_WORKSPACE

- name: Install Forgery

run: |

echo "zpm \"load /source/forgery\":1:1" > load-forgery

docker exec --interactive $instance iris session $instance -B < load-forgery

The general approach is to write out commands to a file, then run then in IRIS session. The extra ":1:1" in the ZPM commands indicates that the command should exit the process with an error code if an error occurs, and halt at the end if no errors occur; this means that if an error occurs, it will be reported as a failed build step, and we don't need to add a "halt" command at the end of each file.

Building and Testing the Package

Finally, we can actually build and run tests for our package. This is pretty simple - note use of the $build_flags/$test_flags environment variables we defined earlier.

# Runs a set of commands using the runners shell

- name: Build and Test

run: |

# Run build

echo "zpm \"load /source $build_flags\":1:1" > build

# Test package is compiled first as a workaround for some dependency issues.

echo "do \$System.OBJ.CompilePackage(\"$test_package\",\"ckd\") " > test

# Run tests

echo "zpm \"$package test -only $test_flags\":1:1" >> test

docker exec --interactive $instance iris session $instance -B < build && docker exec --interactive $instance iris session $instance -B < test && bash <(curl -s https://codecov.io/bash)

This follows the same pattern we've seen, writing out commands to a file then using that file as input to iris session.

The last part of the last line uploads code coverage results to codecov.io. Super easy!

Uploading Unit Test Results

Suppose a unit test fails. It'd be really annoying to have to go back through the build log to find out what went wrong, though this may still provide useful context. To make life easier, we can upload our jUnit-formatted results and even run a third-party program to turn them into a pretty HTML report.

# Generate and Upload HTML xUnit report

- name: XUnit Viewer

id: xunit-viewer

uses: AutoModality/action-xunit-viewer@v1

if: always()

with:

# With -DUnitTest.FailuresAreFatal=1 a failed unit test will fail the build before this point.

# This action would otherwise misinterpret our xUnit style output and fail the build even if

# all tests passed.

fail: false

- name: Attach the report

uses: actions/upload-artifact@v1

if: always()

with:

name: ${{ steps.xunit-viewer.outputs.report-name }}

path: ${{ steps.xunit-viewer.outputs.report-dir }}

This is mostly taken from the readme at https://github.com/AutoModality/action-xunit-viewer.

The End Result

If you want to see the results of this workflow, check out:

Logs for the CI job on intersystems/apps-rest (including build artifacts): https://github.com/intersystems/apps-rest/actions?query=workflow%3ACITest coverage reports: https://codecov.io/gh/intersystems/apps-rest

Please let me know if you have any questions!

Article

Mihoko Iijima · Mar 5, 2021

**This article is a continuation of this post.**

The purpose of this article is to explain how the Interoperability menu works for system integration.

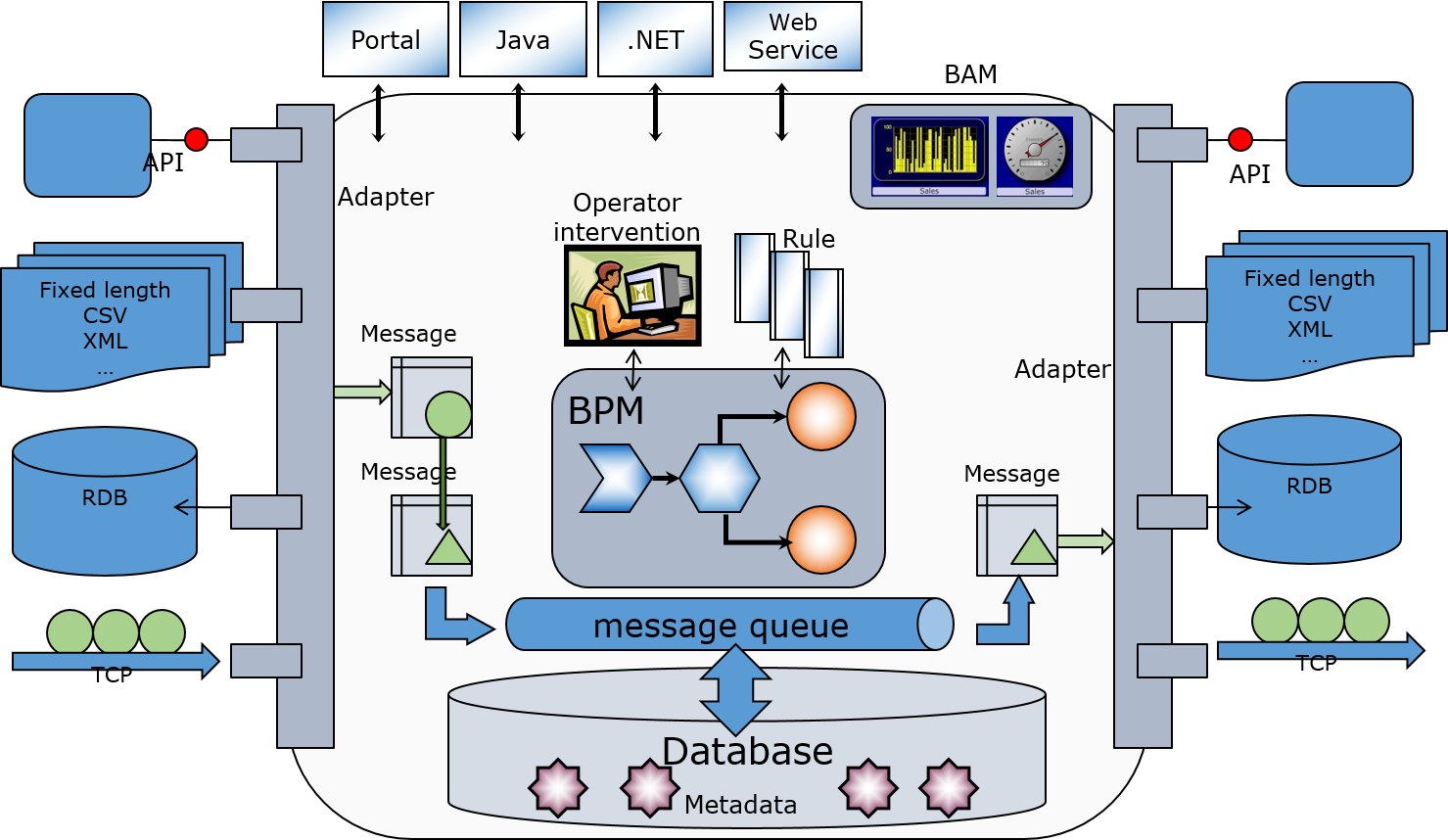

The left side of the figure is the window for accepting information sent from external systems.

There are various ways to receive information, such as monitoring the specified directory at regular intervals to read files, periodically querying the database, waiting for input, or directly calling and having it passed from applications in other systems.

In the system integration mechanism created in the IRIS Interoperability menu, the received information is stored in an object called a **message**. The **message** is sent to the component responsible for the subsequent processing.

A **message** can be created using all the received information or only a part of it.

Suppose you want to send the information contained in the **message** to an external system. In that case, you need to send the message to the component responsible for requesting the external network to process it (the right side of the figure). The component that receives the **message** will request the external system to process it.

Besides, suppose a **message** requires human review, data conversion, or data appending. In that case, the **message** is sent to the component in the middle of the diagram (BPM), which is responsible for coordinating the processing flow.

**Messages** are used to send and receive data between each component. When a **message** is sent or received, the message is automatically stored in the database.

Since **messages** are stored in the database, it is possible to check the difference before and after the data conversion. Check the **message** that was the source of a problem during an operation or start over (resend) from the middle of the process. Verify the status using **messages** at each stage of development, testing, and operation.

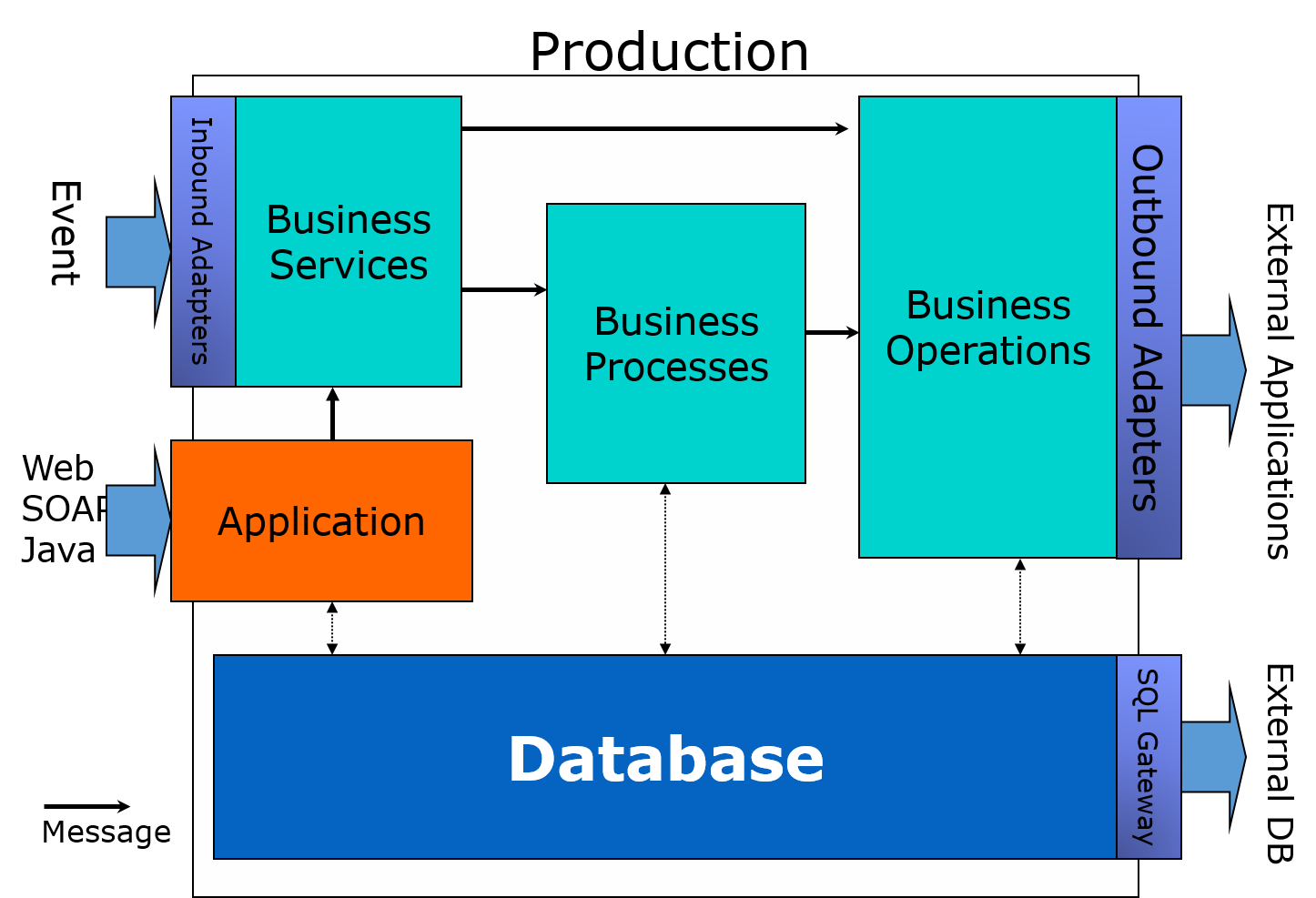

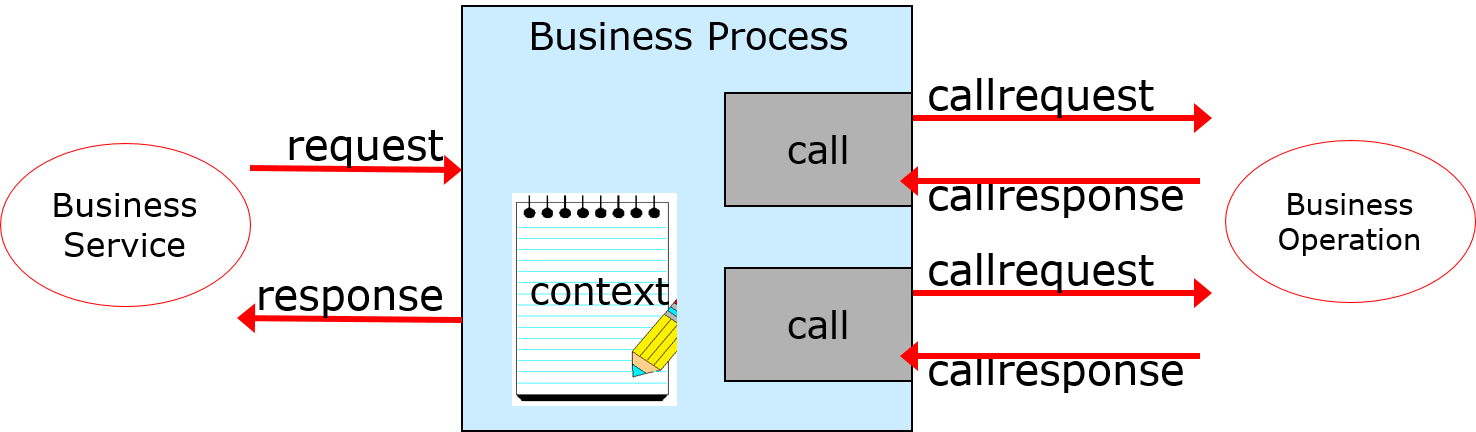

A simple picture of system integration would be divided into three components (business services, business processes, and business operations), as shown in the figure below.

There is also a definition called "**production**" that stores information about the components to be used (e.g., connection information).

The role of each component is as follows:

**Business Services**

Responsible for receiving information from external sources, creating **messages**, and sending **messages** to other components.

**Business Processes**

This role is activated when a **message** is received and is responsible for coordinating the process (calling components in the defined order, waiting for responses, waiting for human review results, etc.).

**Business Operations**

This function is activated when a **message** is received and has a role in requesting the external system to process the message.

**Messages** are used to send and receive data between components.

Components other than business services initiate processing when they receive a **message**.

The question is, what is the purpose of creating and using this **message**?

**Messages** are created by retrieving the information you want to relay to the external system from entered data into the business service.

Since not all external systems connected to IRIS use the same type of data format for transmission, and the content to be relayed varies, the production can freely define message classes according to the information.

There are two types of **messages**: request (= request message) and response (= response message). The **message** that triggers the component's activation is called request (= request message), and the **message** that the component responds to after processing is called response (= response message).

These **messages** will be designed while considering the process of relaying them.

In the following articles, we will use a study case to outline the creation of **productions**, **messages**, and components.

Nice article. To be perfect, iris interoperability could be implement bpmn to BPM task, but it is done using bpel.

Article

Mihoko Iijima · Mar 5, 2021

**This article is a continuation of this post.**

In the previous article, we discussed business operations' creation from the components required for system integration.

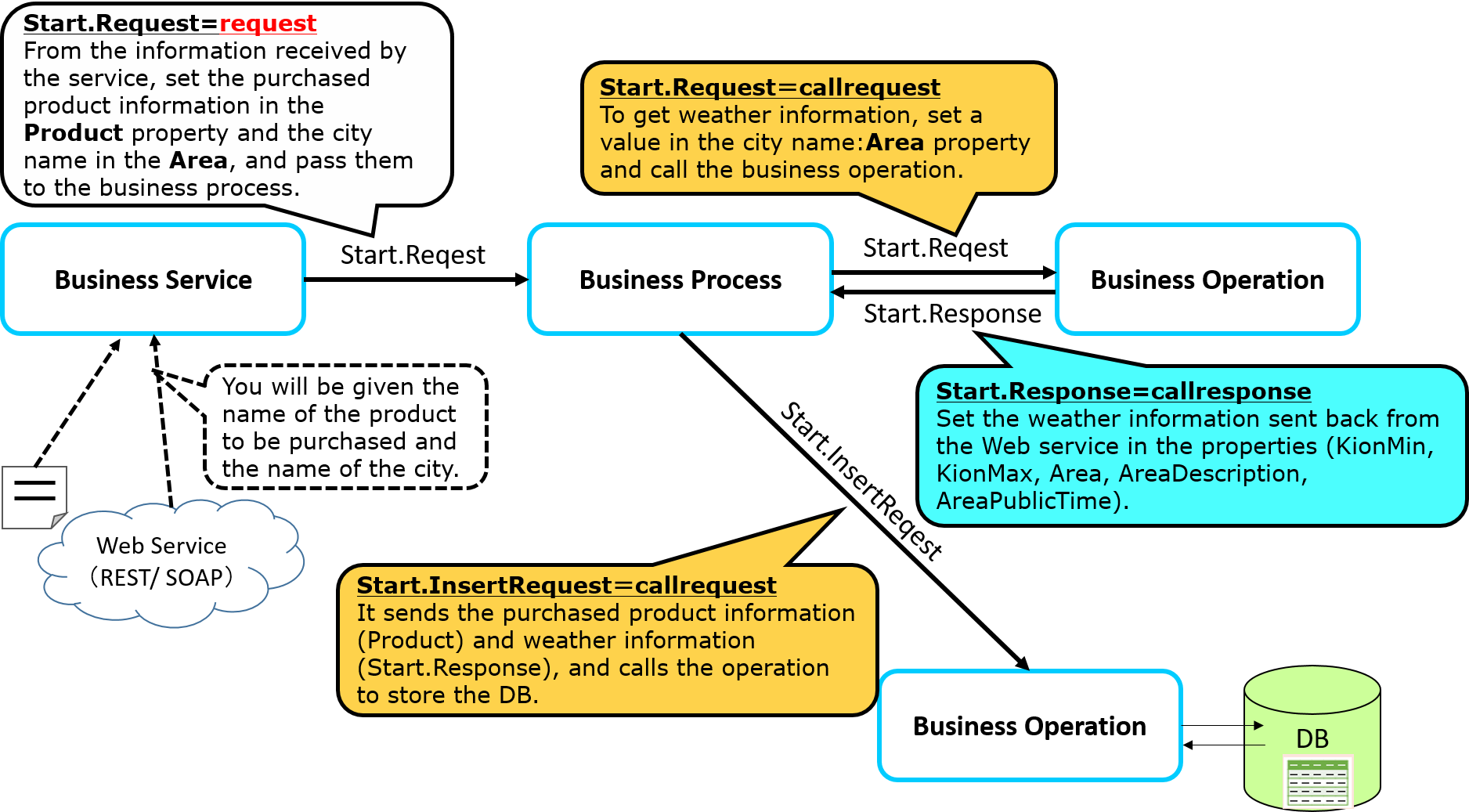

In this article, you will learn how to create a business process that calls the two business operations you have defined in the sequence order.

* Production

* Message

* **Components**

* Business Services

* **Business Processes**

* Business Operations (previous post)

The business process acts as the coordinator (command center) of the process.

The processing adjustments you may want to implement in the sample include the following:

Step 1: Provide the city name to an external Web API and request weather information.Step 2: Register the result of the query (weather information) from Step 1 and the name of the purchased product received at the start of the production.

In the sample business process, we will wait for the answer to step 1 and adjust step 2 to operate.

In the process of waiting for a response (i.e., synchronization), for instance, what happens if step 1) doesn't respond for a few days?

If new messages are delivered to the business process while waiting for a response for a few days, the messages will not be dismissed since they are stored in a queue. However, the business process will not process new messages, and there will be a delay in the operation.

Note: Business processes and business operations have cues.

Therefore, in production, when there is a synchronous call, there are two ways for the business process to move: **A) to synchronize perfectly**, and B) to save the state of the business process itself in the database and hand over the execution environment so that other processes can run while waiting for a response.

**A) How to synchronize perfectly:**

While a synchronous call is being made, the business process's processing is ongoing, and waiting for the next message to be processed until all processing is completed.➡This function is used when the order of processing needs to be guaranteed in the first-in-first-out method.

B) The method of saving the state of the business process itself in the database and hand over the execution environment so that other processes can run while waiting for a response is

When a synchronous call is made, the process saves its state in the database. When a response message is received, and it is time to process the message, it opens the database and executes the next process. (IRIS will manage the storage and re-opening of business processes in the database). ➡ Used when it is acceptable to switch the processing order of messages (i.e., when it is allowed to process more and more different messages received while waiting for a response).

In the sample, **B)** is used.

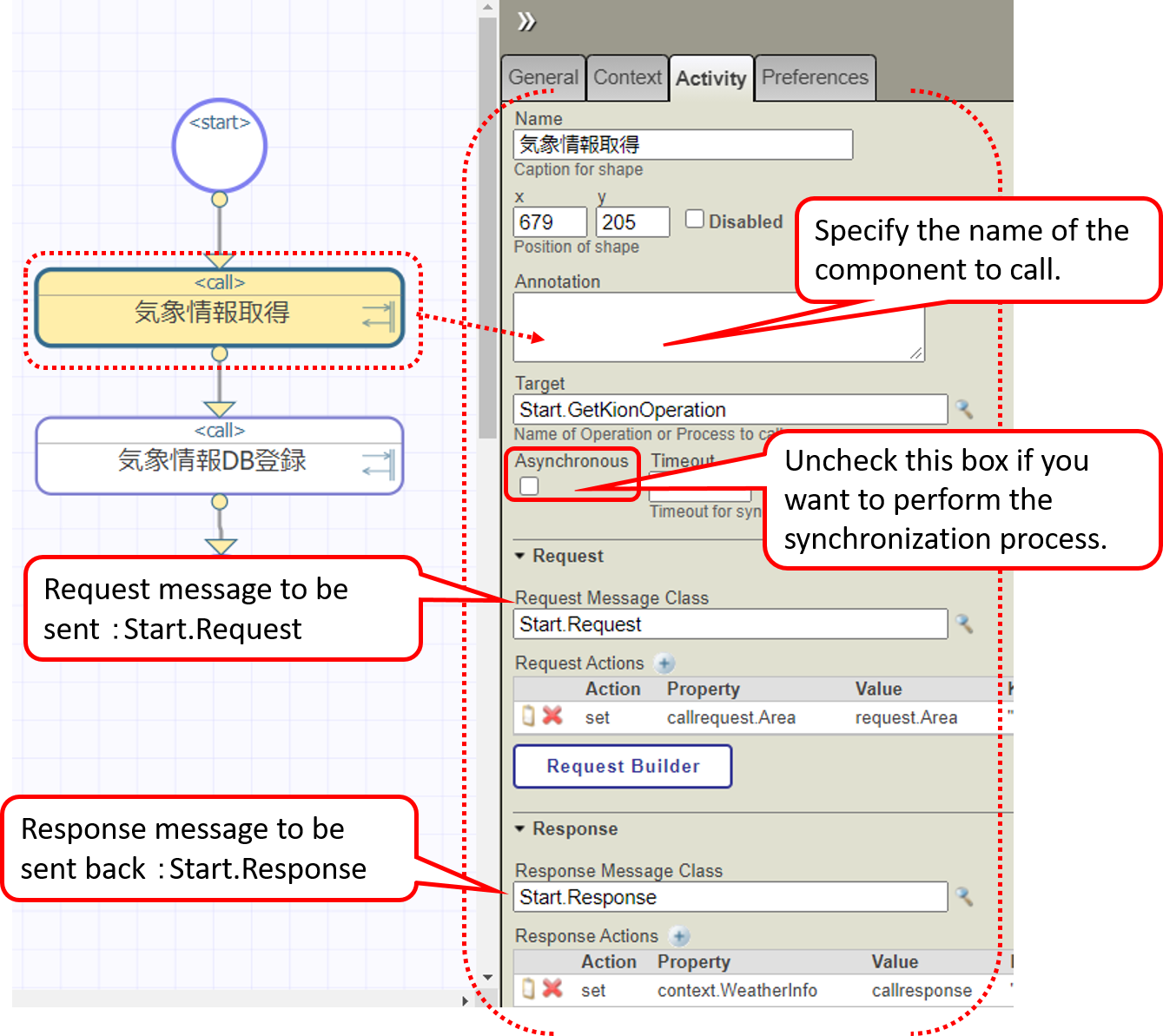

There are two types of editors for creating business processes: a Business Process Editor that allows you to place processing boxes (activities) and implement them while defining their execution, and a method for creating them using ObjectScript in Studio or VSCode.

If you use the Business Process Editor, you will use the call activity to invoke the component, but this activity is **implemented** in the **B)** way. **Of course, you can also implement the** **A)** method in the Business Process Editor, except that you will not use the call activity in that case (you will use the code activity).

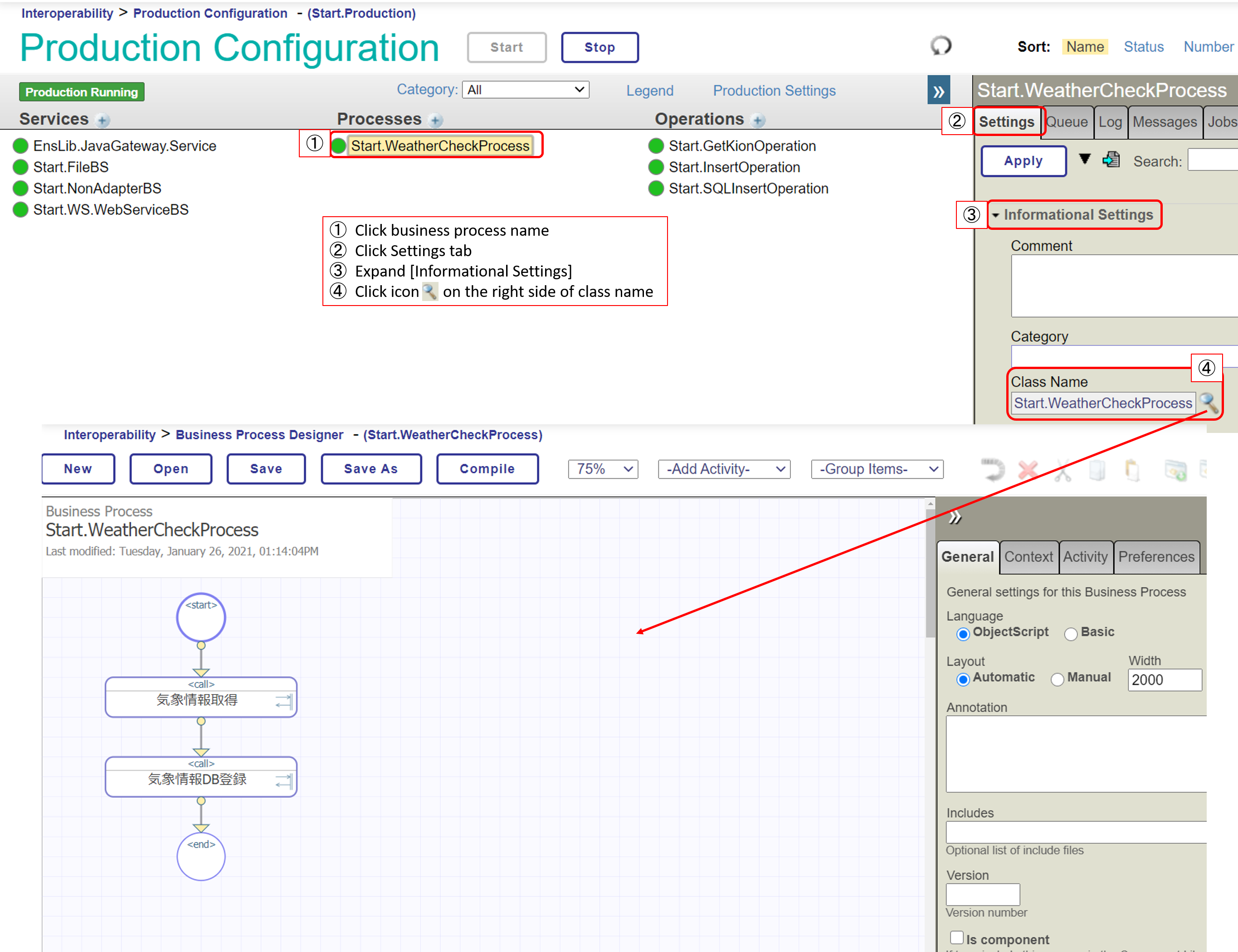

In this section, I will explain how to create it.

If you use the Business Process Editor, you write them in the Management Portal.

You can also open the business process from the production configuration page. The figure below shows the procedure.

Icons like .png) in this editor are called activities, and those marked with are activities that can invoke other components.

This symbol.png) indicates that a response message will be returned (i.e., a synchronous call will be made). The activity defaults to the asynchronous call setting, which can be changed as needed.

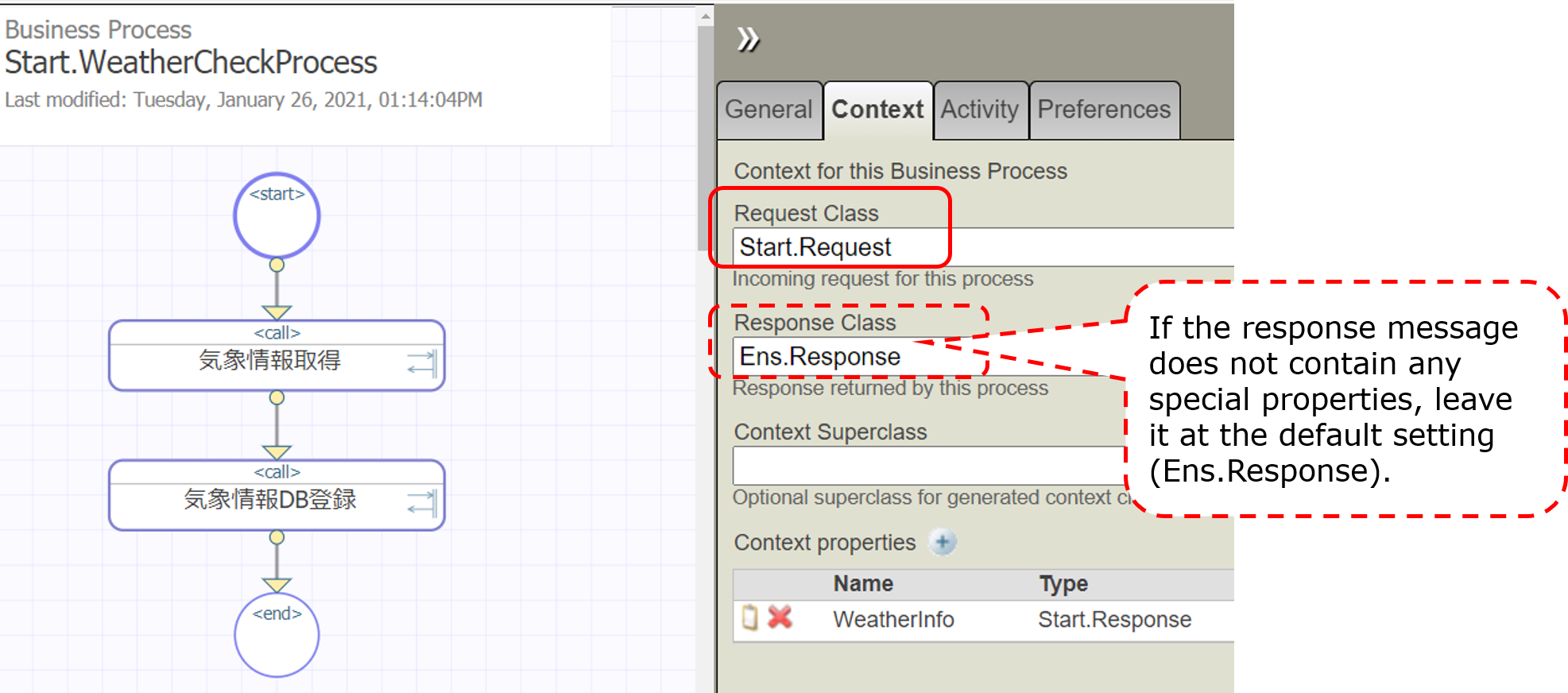

Now let's look at business processes, which are components that are invoked upon receiving a request message, as well as business operations.

In the sample, the request message: It is set to start when it receives a Start.Request and does not return a response message.

In the business process, messages appear in various situations.

Request messages that are sent to business processes.

Request message (+ response message) to be sent when calling another component using the activity.

In the Business Process Editor, the names of the objects that store messages are clearly separated to be able to see which message was sent from which destination.

* request(basic requirements)

The message that triggered the start of the business process, in our example, is Start.Request (the message to be specified in the Request settings on the Context tab in the Business Process Editor)

* response(basic response)

Response message to return to the caller of the business process (not used in the sample) (message to be specified in the settings of the response in the context tab in the Business Process Editor)

* callrequest(request message)

Request message to be sent when calling the component determined by the activity.

* callresponse(response message)

Response message returned from the component specified by the activity.

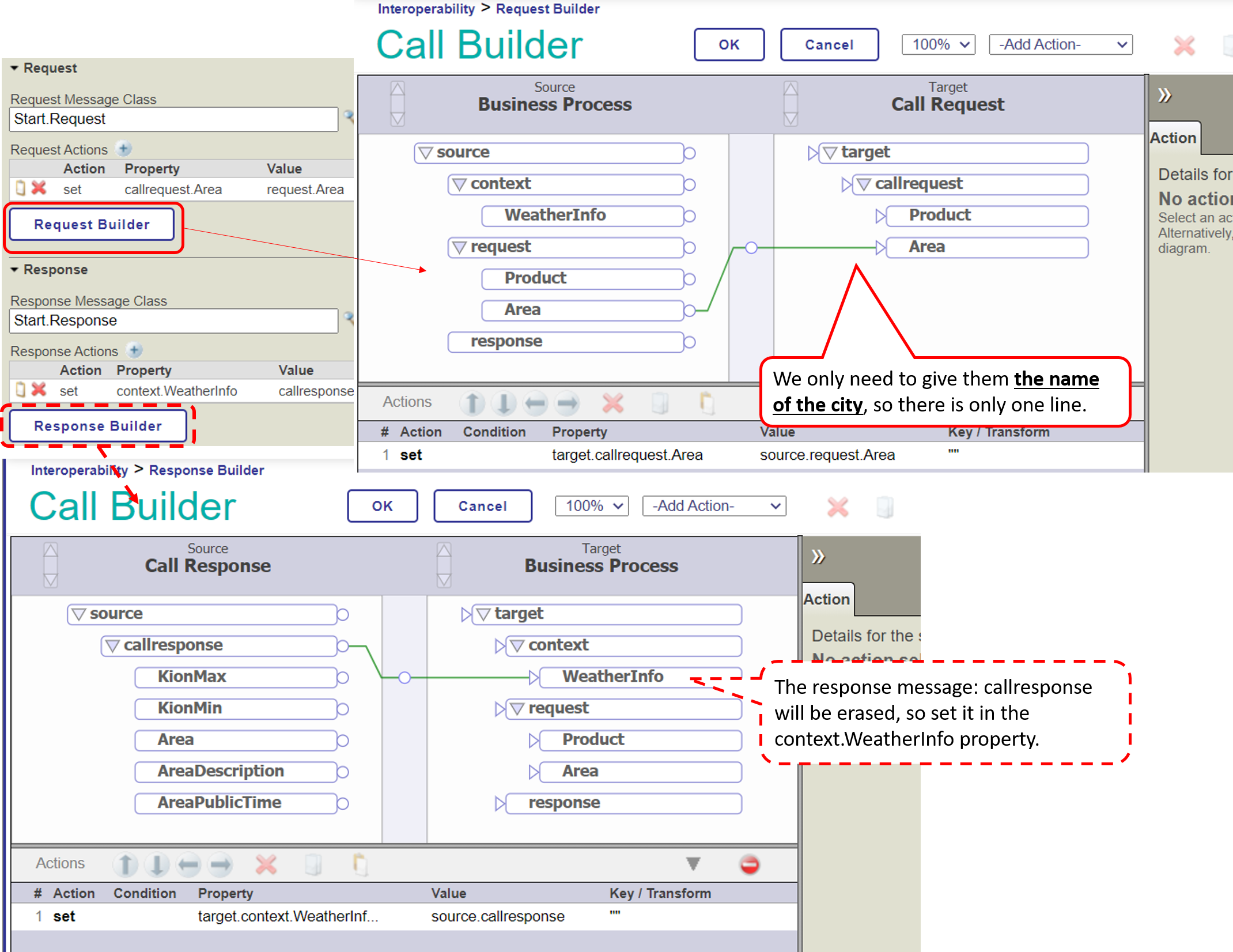

**callrequest and callresponse are objects that will be deleted when the call processing of the activity is completed.**

All other objects will not disappear until the business process is finished.

Now comes the problem when callresponse disappears.

That's because, as you can see in this sample,

**When calling a component, if you want to use the response result of a previously called component, the response message will be lost, and the information that was to be used in the next component will be erased.**

It is a problem 😓

What should we do?・・・・・

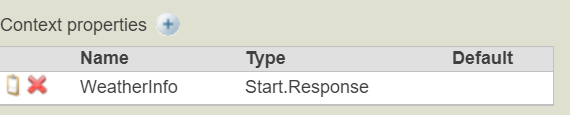

In such a case, you can use the context object.

The context object, like request/response, is an object that survives until the end of the business process.

Moreover, since context is a generic object, it can be defined in the process editor.

In addition to the context, the response object can also be used if it has a property that matches the inherited data's saving.

Now, let's go over the steps again.

Response message in the light blue balloon: Start.Response is an object that will be deleted when the process is finished.

Since we want to use the response message (Start.Response) that contains the weather information as the message to be sent to the next [Business Operation for DB Update], we have to implement the context object in a way that all the property values of the response message (Start.Response) can be assigned to it.

Then what is the setting for the context property?

The properties are defined in "Context Properties" in the Context tab of the Business Process Editor.

In this case, we would like to save all the properties of the response message (Start.Response) to the context object. Therefore, the property type specification is set to Start.Response.

Following that, check the settings in the activity.

The request and response messages have a button called ○○ Builder.

Clicking on this button will launch a line-drawing editor that allows you to specify what you want to register in the properties of each message.

After this, the business operation for requesting a database update (Start.SQLInsertOperation or Start.InsertOperation) is called in the same way with the activity, and you are all set.

(For more information, see Configuring .png) for Business Processes).

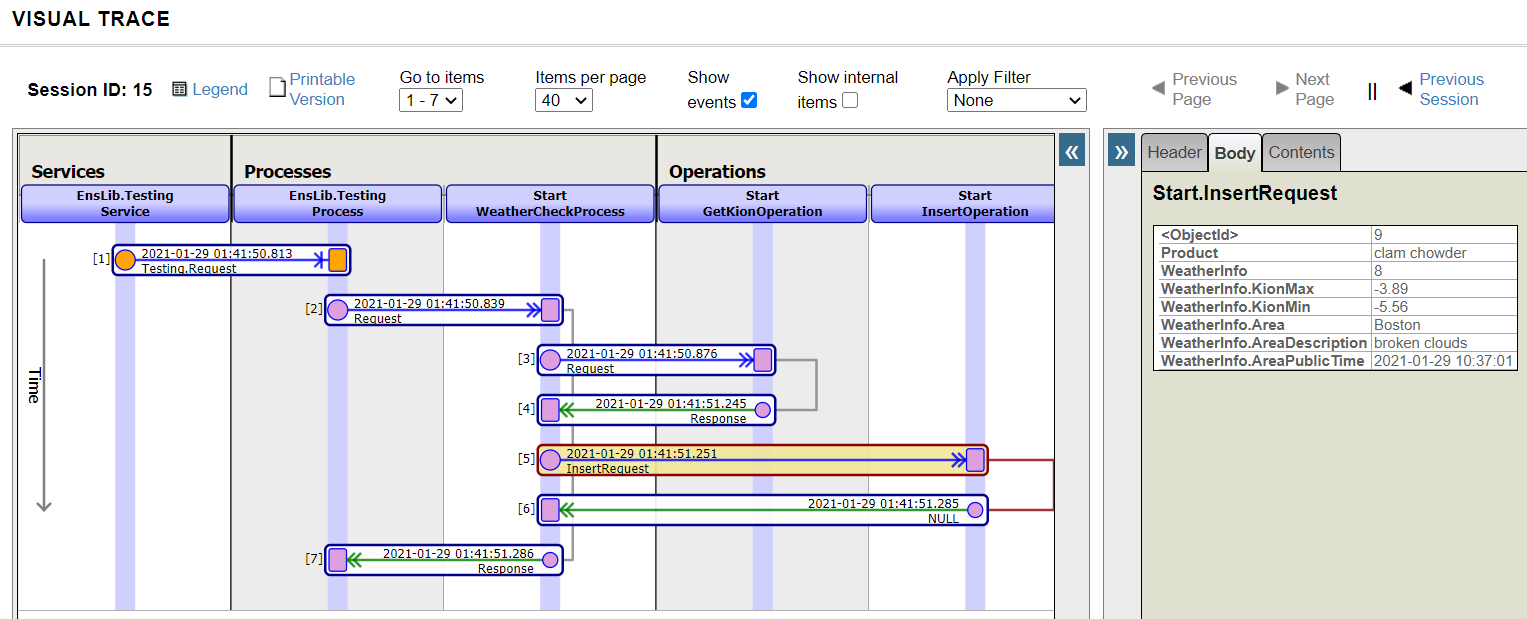

Once you have completed the verification, you can test it. The testing method is the same as the one used for testing business operations (see this article).

The trace after the test is as follows:

Since the business process is the coordinator, we could see that it invoked the defined components sequentially, keeping the synchronous execution.

Note 1: The sample only deals with the call activity, but various other activities such as data transformation.

Note 2: Business processes created by ObjectScript alone, other than the Business Process Editor, inherit from the Ens.BusinessProcess class. If it is created in the Business Process Editor, it inherits from the Ens.BusinessProcessBPL class.

The business process is the coordinator of the system integration process.The Business Process Editor provides the following types of variables for messages (request/response/callrequest/callreponse/context).A business process created with the Business Process Editor can work in a way that does not delay other messages, even if there is synchronization in the component's calling.

In the next section, we will finally show you how to develop the last component: business services.

Discussion

Matthew Waddingham · May 17, 2021

We've been tasked with developing a file upload module as part of our wider system, storing scanned documents against a patients profile. Our Intersystems manager suggested storing those files in the DB as streams would be the best approach and it sounded like a solid idea, it can be encrypted, complex indexes, optimized for large files and so on. However the stake holder questioned why would we want to do that over storing them in windows folders and that putting it in the DB was nuts. So we were wondering what everyone else has done in this situation and what made them take that route. The nice advantage of storing them in the DB is that is makes the following easier:

- refreshing earlier environments for testing- mirroring the file contents- encryption- simpler consistent backups

However, if you're talking about hundreds of GBs of data, then you can run into issues which you should weigh against the above:

- journaling volume- .dat size- .dat restore time

One way to help mitigate the above for larger volume file management is to map the classes that are storing the the stream properties into their own .DAT so they can be managed separately from other application data, and then you can even use subscript level mapping to cap the size of the file .DATs.

Hope that helps I can't disagree with Ben, there is a cut-off point where it makes more sense to store the files external to IRIS however it should be noted that if I was working with any other database technology such as Oracle or SQL Server I wouldn't even consider storing 'Blobs' in the database. However Cache/Ensemble/IRIS is extremely efficient at storing stream data especially binary steams.

I agree with Ben that by storing the files in the database you will have the benefits of Journallng and Backups which support 24/7 up time. If you are using Mirroring as part of your Disaster Recovery strategy then restoring your system will be faster.

If you store the files externally you will need to back up the files as a separate process from Cache/Ensemble/IRIS backups. I assume that you would have a seperate file server as you wouldn't want to keep the external files on the same server as your Cach/Ensemble/IRIS server for two reasons:

1) You would not want the files to be stored on the same disk as your database .dat files as the disk I/O might be compromised

2) If your database server crashes you may lose the external files unless they are are on separate server.

3) You would have to backup your file server to another server or suitable media

4) If the steam data is stored in IRIS then you can use iFind and iKnow on the file content which leads you into the realms of ML, NLP and AI

5) If your Cache.dat files and the External files are sored on the same disk system you potentially run into disk fragmentation issues over time and the system will get slower as the fragmentation gets worse. Far better to have your Cache.dat files on a disk system of their own where the database growth factor is set quite high but the database growth will be contiguous and fragmentation is considerably reduced and the stream data will be managed as effectively as any other global structure in Cache/Ensemble/IRIS.

Yours

Nigel Fragmentations issues, with SSD disks not an issue anymore.

But in any way, I agree with storing files in the database. I have a system in production, where we have about 100TB of data, while more than half is just for files, stored in the database. Some of our .dat files by mapping used exclusively for streams, and we take care of them, periodically by cutting them at some point, to continue with an empty database. Mirroring, helps us do not to worry too much about backups. But If would have to store such amount of files as files on the filesystem, we would lose our mind, caring about backups and integrity. Great data point! Thanks @Dmitry.Maslennikov :) I'm throwing in another vote for streams for all the reasons in the above reply chain, plus two more:

1. More efficient hard drive usage. If you have a ton of tiny files and your hard drive is formatted with a larger allocation unit, you're going to use a lot of space very inefficiently and very quickly.

2. At my previous job, we got hit by ransomware years ago that encrypted every document on our network. (Fortunately, we had a small amount of data and good offline backup process, so we were able to recover fairly quickly!) We were also using a document management solution that ran on Cache and stored the files as Stream objects, and they were left untouched. I'm obviously not going to say streams and ransomewareproof, but that extra layer of security can't hurt! Thank you all for your input, they're all sound reasoning that I can agree with. It's not a good idea to store files in the DB that you'll simply be reading back in full. The main issue you'll suffer from if you do hold them in the database (which nobody else seems to have picked up on) is that you'll needlessly flush/replace global buffers every time you read them back (the bigger the files, the worse this will be). Global buffers are one of the keys to performance.

Save the files and files and use the database to store their filepaths as data and indices.

Hi Rob, what factors play a part in this though, we'd only be retrieving a single file at a time (per user session obviously) and the boxes have around 96gb-128gb memory each (2 app, 2 db) if that has any effect on your answer? I've mentioned above a system with a significant amount of streams stored in the database. And just checked how global buffers used there. And streams are just around 6%. The system is very active, including files. Tons of objects created every minute, attached files, changes in files (yeah, our users can change MS Word files online on the fly, and we keep all the versions).

So, I still see no reasons to change it. And still, see tons of benefits, of keeping it as is. Hey Matthew,

No technical suggestions from me, but I would say that there are pros/cons to file / global streams which have been covered quite well by the other commenters. For the performance concern in particular, it is difficult to compare different environments and use patterns. It might be helpful to test using file / global streams and see how the performance for your expected stream usage, combined with your system activity, plays into your decision to go with one or the other. I agree, for our own trust we'll most likely go with Stream. However I've suggested we plan to build both options for customers but we'll just reference the links to files and then they can implement back up etc as they see fit. Great! This was an interesting topic and I'm sure one that will help future viewers of the community. There are a lot of considerations.

Questions:

Can you describe what are you going to do with that streams (or files I guess)?

Are they immutable?

Are they text or binary?

Are they already encrypted or zipped?

Average stream size?

Global buffers are one of the keys to performance.

Yes, that's why if streams are to be stored in the db they should be stored in a separate db with distinct block size and separate global buffers. Having multiple different global buffers for different block sizes, does not make sense. IRIS will use bigger size of block for lower size blocks inefficiently. The only way to separate is, to use a separate server, right for streams. For us it will be scanned documents (to create a more complete picture of a patients record in one place) so we can estimate a few of the constants involved to test how it will perform under load. I'm not sure what you mean by this. On an IRIS instance configured with global buffers of different sizes, the different sized buffers are organized into sperate pools. Each database is assigned to a pool based on what is the smallest size available that can handle that database. If a system is configured with 8KB and 32KB buffers, the 32KB buffers could be assigned to handle 16KB database or 32KB databases but never 8KB databases. It depends. I would prefer to store the files in the linux filesystem with a directory structure based on a hash of the file and only store the meta-information (like filename, size, hash, path, author, title, etc) in the database. In my humble opinion this has the following advantages over storing the files in the database:

The restore process of a single file will run shorter than the restore of a complete database with all files.

Using a version control (f.e. svn or git) for the files is possible with a history.

Bitrot will only destroy single files. This should be no problem if a filesystem with integrated checksums (f.e. btrfs) is used.

Only a webserver and no database is needed to serve the files.

You can move the files behind a proxy or a loadbalancer to increase availability without having to use a HA-Setup of Caché/IRIS.

better usage of filesystem cache.

better support for rsync.

better support for incremental/differential backup.

But the pros and cons may vary depending on the size and amount of files and your server setup. I suggest to build two PoCs, load a reasonable amount of files in each one and do some benchmarks to get some figures about the performance and to test some DR- and restore-scenarios. Jeffrey, thanks. But if I would have only 16KB blocks buffer configured and with a mix of databases 8KB (mostly system or CACHETEMP/IRISTEMP) and some of my application data stored in 16KB blocks. 8KB databases in any way will get buffered in 16KB Buffer, and they will be stored one to one, 8KB data in 16KB buffer. That's correct?

So, If I would need to separate global buffers for streams, I'll just need the separate from any other data block size and a significantly small amount of global buffer for this size of the block and it will be enough for more efficient usage of global buffer? At least for non-stream data, with a higher priority? Yes, if you have only 16KB buffers configured and both 8KB and 16KB databases, then the 16KB buffers will be used to hold 8KB blocks - one 8KB block stored in one 16KB buffer using only 1/2 the space...

If you allocate both 8KB and 16KB buffers then (for better or worse) you get to control the buffer allocation between the 8KB and 16KB databases.

I'm just suggesting that this is an alternative to standing up a 2nd server to handle streams stored in a database with a different block size.

One more consideration for whether to store the files inside the database or not is how much space gets wasted due to the block size. Files stored in the filesystem get their size rounded up to the block size of the device. For Linux this tends to be around 512 bytes (blockdev --getbsz /dev/...). Files stored in the database as streams are probably* stored using "big string blocks". Depending on how large the streams are, the total space consumed (used+unused) may be higher when stored in a database. ^REPAIR will show you the organization of a data block.

*This assumes that the streams are large enough to be stored as big string blocks - if the streams are small and are stored in the data block, then there will probably be little wasted space per block as multiple streams can be packed into a single data block.

Some info about blocks, in this article and others in cycle. In my opinion, it is much better & faster to store binary files out of the database. I have an application with hundreds of thousands of images. To get a faster access on a Windows O/S they are stored in a YYMM folders (to prevent having too many files in 1 folder that might slow the access) while the file path & file name are stored of course inside the database for quick access (using indices). As those images are being read a lot of times, I did not want to "waste" the "cache buffers" on those readings, hence storing them outside the database was the perfect solution. Hi, I keep everything I need in Windows folders, I'm very comfortable, I have everything organized. But maybe what you suggest won't look bad and be decent in terms of convenience! it depends on the file type , content, use frequency and so on , each way has its advantage

Announcement

Anastasia Dyubaylo · Nov 9, 2021

Hey Community!

New video is already waiting on InterSystems Developers YouTube:

⏯ Introducing the InterSystems IRIS Smart Factory Starter Pack for Manufacturing

See how InterSystems IRIS® data platform and the Smart Factory Application Starter Pack enable manufacturers to fast-track their smart factory initiatives. The package combines operational technology systems and real-time signals from the shop floor with enterprise IT and analytics systems, enabling manufacturers to improve quality and efficiency, respond faster to events, and predict and avoid problems before they occur.

Presenters: 🗣 @Joseph.Lichtenberg, Director, Product and Industry Marketing, InterSystems 🗣 @Marco.denHartog, Chief Technology Officer, IT Visors🗣 Marcel Artz, Chief Information Officer, Vlisco

Enjoy and stay tuned!

Announcement

Anastasia Dyubaylo · Feb 1, 2022

Hi Community,

New video is already on InterSystems Developers YouTube! Learn about the easy process of migrating to InterSystems IRIS:

⏯ Join Us on a Journey from Caché/Ensemble to InterSystems IRIS

🗣 Presenter: @Jeffrey.Morgan, Sales Engineer, InterSystems

Enjoy and stay tuned!

Question

Oleksandr Kyrylov · Mar 3, 2022

Hello dear community

I tried to run windows command from an intersystems terminal using objectscript routine.

routine :

Zpipe quit ; CPIPE example to run host console command

cmd(command="",test=0) ;

if command="" set command="dir"

quit $$execute(command,test) execute(cmd,test) Public {

set dev="|CPIPE|1"

set $zt="cls"

set empty=0

open dev:cmd:0

write:test $test,!

else write "pipe failed",! quit 0

while empty<3 {

use dev read line

set empty=$s($l(line):0,1:$i(empty))

use 0 write line,! ;;; or do any kind of analysis of the line

} cls ;

set $zt="" use 0

close dev

if $ze'["<ENDOFFILE>" w $ze,!

quit $t

}

In InterSystems terminal:

do cmd^pipe("cmd /c ""cd /d E:\repos\twilio-video-app-react && call npm run build""")

When i run the command directly in windows shell with admin privileges it works.

The routine runs the command without admin privileges and that causes error:

question:

Is there way to add admin privileges to intersystems?

Thanks! I believe the privilege comes from the OS user that is launching Terminal.

Have you tried running Terminal as an Admin and seeing if runs as expected?

Announcement

Shane Nowack · Oct 19, 2022

Get certified on InterSystems IRIS System Administration!

Hello Community,

After beta testing the new InterSystems IRIS System Administration Specialist exam, the Certification Team of InterSystems Learning Services has performed the necessary calibration and adjustments to release it to our community. It is now ready for purchase and scheduling in the InterSystems certification exam catalog. Potential candidates can review the exam topics and the practice questions to help orient them to exam question approaches and content. Passing the exam allows you to claim an electronic certification badge that can be embedded in social media accounts such as Linkedin.

If you are new to InterSystems Certification, please review our program pages that include information on taking exams, exam policies, FAQ and more. Also, check out our Organizational Certification that can help your organization access valuable business opportunities and establish your organization as a solid provider of InterSystems solutions in our marketplace.

The Certification Team of InterSystems Learning Services is excited about this new exam and we are also looking forward to working with you to create new certifications that can help you advance your career. We are always open to ideas and suggestions at certification@intersystems.com.

Looking forward to celebrating your success,

Shane Nowack - Certification Exam Developer, InterSystems @Shane.Nowack - congratulations on his launch!! Very exciting and a great addition to the Professional Certification Exam portfolio for ISC technology :)

Announcement

Anastasia Dyubaylo · Mar 18, 2022

Hi Community,

We're pleased to invite you to the upcoming webinar in Spanish called "Exploring the new Embedded Python feature of InterSystems IRIS"

Date & Time: March 30, 4:00 PM CEST

Speaker: @Eduardo.Anglada, Sales Engineer, InterSystems Iberia

The webinar is aimed at any Python developer or data scientist.

At the webinar, we'll discover the potential of Python, the new programming language added to IRIS. Python is at its peak, increasing its popularity every year. It is now used by +1.5M developers around the world, and has thousands of libraries to perform almost any function needed. It is also the most widely used language in data science.

During the webinar, we'll explain how to create anonymous data and to develop interactive dashboards.

If you are using Python, don't miss it! You'll have no excuses to avoid trying InterSystems IRIS.

👉 Register today and enjoy!

Announcement

Shane Nowack · Apr 11, 2022

Get certified on InterSystems CCR!

Hello Community,

After beta testing the new CCR Technical Implementation Specialist exam, the Certification Team of InterSystems Learning Services has performed the necessary calibration and adjustments to release it to our community. It is now ready for purchase and scheduling in the InterSystems certification exam catalog. Potential candidates can review the exam topics and the practice questions to help orient them to exam question approaches and content. Passing the exam allows you to claim an electronic certification badge that can be embedded in social media accounts such as Linkedin.

If you are new to InterSystems Certification, please review our program pages that include information on taking exams, exam policies, FAQ and more. Also, check out our Organizational Certification that can help your organization access valuable business opportunities and establish your organization as a solid provider of InterSystems solutions in our marketplace.

The Certification Team of InterSystems Learning Services is excited about this new exam and we are also looking forward to working with you to create new certifications that can help you advance your career. We are always open to ideas and suggestions at certification@intersystems.com.

One final note: The Certification Team will be proctoring free certification exams ($150 value) at Global Summit 2022. All of the products in our exam catalog will be available. The summit will be in Seattle, WA from June 20-23. All individuals registered for the Summit will be eligible for one free certification exam (that must be taken during the Summit at one of the live proctoring sessions).

Looking forward to celebrating your success,

Shane Nowack - Certification Exam Developer, InterSystems @Shane.Nowack - thank you for this tremendous news!! A hearty congratulations to you and to everyone who helped to get to this moment of full certification exam launch!

@Evgeny.Shvarov / @DC.Administration - Does the D.C. provide a way to display / share InterSystems Professional Certifications in a member's profile? If so I would love to see the instructions, and if not then perhaps we could consider it an enhancement request?

A second request would be to see a list of everyone who has publicly listed a specific certification (e.g. a catalog of experts). Has any thought been put into that? @Evgeny.Shvarov / @DC.Administration - THANK YOU for the feature that allows you to display your professional certifications in your profile, as well as the check-mark on your profile pic .... love it!

Has any thought been given to the second idea of being able to see a list of people who publicly listed a specific certification? That would make the experts more discoverable. Hi Ben,

Glad to hear you enjoyed our latest updates ;)

It seems worth implementing smth special to highlight the list of our certified members on one page.

p.s. To make your ideas more visible, feel free to submit them to our InterSystems Ideas portal. We constantly review ideas from this portal and try to follow the feedback of our users.

And thanks for your constant feedback and ideas!

Article

Deirdre Viau · Mar 16, 2022

Support is helping you troubleshoot a report. They want to reproduce a problem in their local system. Too bad they can't run your report, because the JDBC data source connection will fail. Or... is there a way?

There is a way to run a report offline, with no access to the source database. You must provide a cached query result exported from Designer. This is a file containing report source data. Support will use this to override the report's data source. When they run the report, it will get data from the file, rather than the JDBC connection.

Clarification

This has nothing to do with the IRIS BI query cache.

IRIS BI query cache

Globals used by the BI query engine to improve performance.

InterSystems Reports cached query result

A file that enables running a report offline, with no access to the source database. The report retrieves data from the file instead of the usual JDBC connection.

How to send a report with a cached query result

1. Zip and send the entire catalog folder. Indicate which report you are testing.

2. Open your report in Designer. In the Data panel on the left, right-click the dataset and choose Create Cached Query Result. If the report has multiple datasets, do this for each one. Send the exported files.

Disclaimer

Do not send a cached query result containing confidential data.

Documentation

Logi Cached Query documentation (v18) very useful tip ... thank you for writing this up!

Announcement

Anastasia Dyubaylo · Oct 12, 2022

Hi Community,

In this video you will learn about new features in InterSystems Healthshare Clinical Viewer and what's coming next:

⏯ Clinical Viewer & Navigation Application: New & Next 2022 @ Global Summit 2022

🗣 Presenter: @Julie.Smith, Clinical Product Manager, InterSystems

Stay tuned for more videos on InterSystems Developers YouTube!