Wall-M : Perform semantic queries on your email inbox and get accurate responses along with source citations

Introduction

With the rise of Gen AI, we believe that now users should be able to access unstructured data in a much simpler fashion. Most people have many emails that they cannot often keep track of. For example, in investment/trading strategies, professionals rely on quick decisions leveraging as much information as possible. Similarly, senior employees in a startup dealing with many teams and disciplines might find it difficult to organize all the emails that they receive. These common problems can be solved using GenAI and help make their lives easier and more organized. The possibility of hallucinations in GenAI models can be scary and that's where RAG + Hybrid search comes in to save the day. This is what inspired us to build the product WALL-M ( Work Assistant LL-M). At HackUPC 2024, we developed WALL-M as part of the InterSystems vector search challenge. It is a retrieval augmented generation (RAG) platform designed for accurate question-answering in emails with minimal hallucinations. This solution addresses the challenge of managing numerous long emails, especially in fast-paced fields like investment/trading, startups with multiple teams and disciplines, or individuals looking to manage their full inbox.

What it does

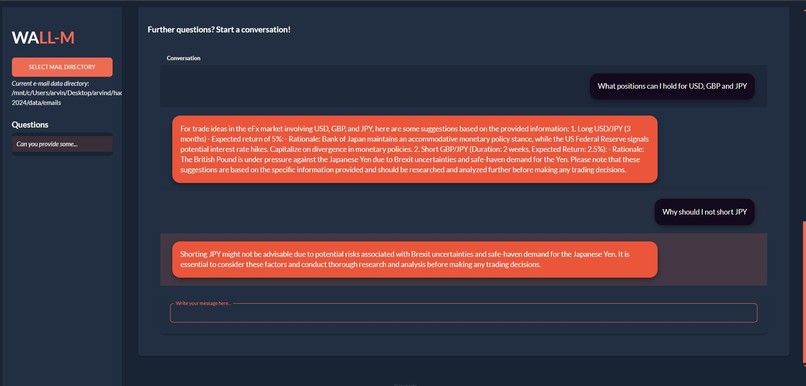

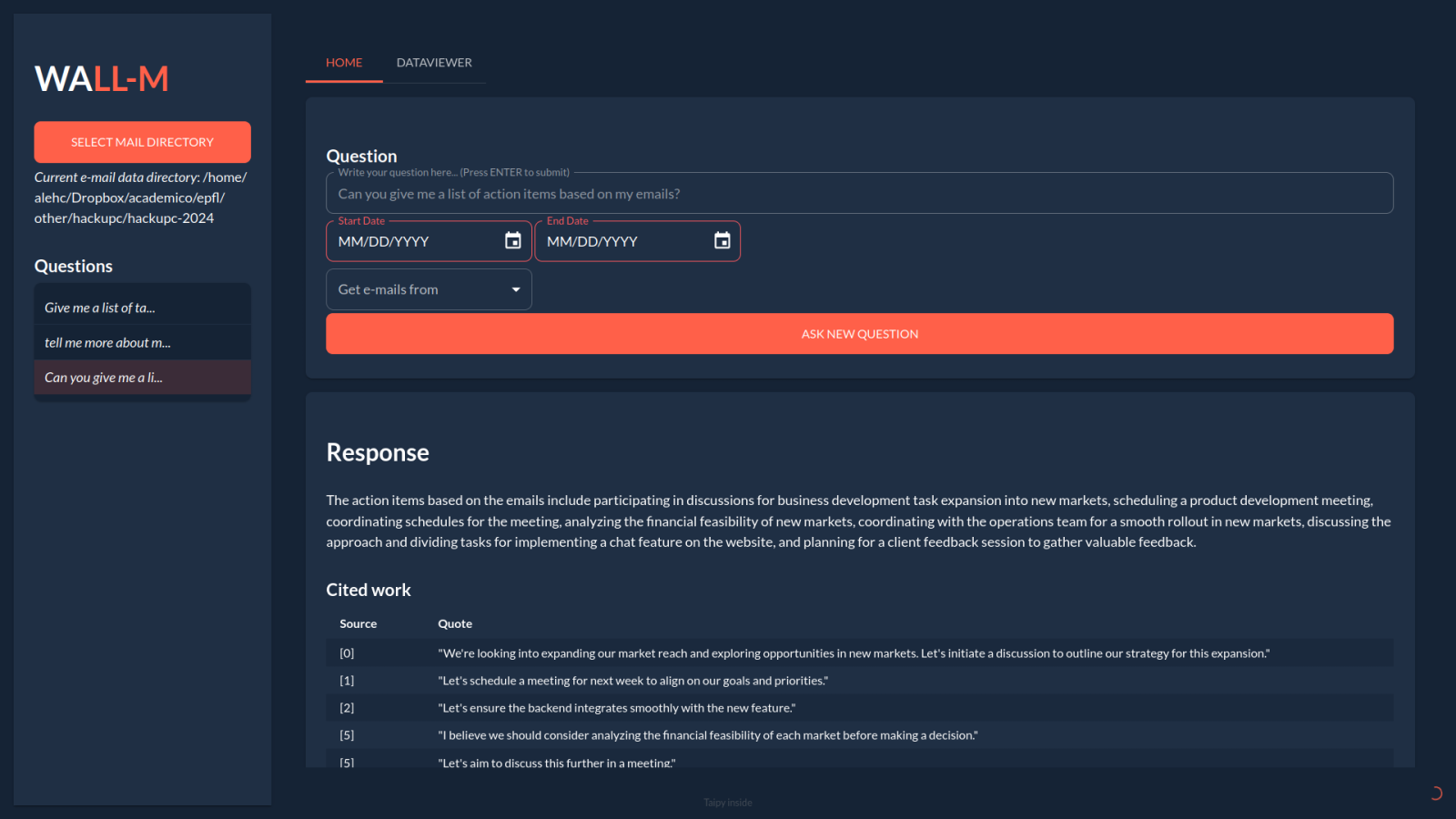

You can load the emails from your inbox, and choose to filter by date and senders to define the context for the LLM. Then within the context, you can choose to make specific queries related to the chosen emails. Example 1, trading ideas based on select bank reports or investment research reports. Example 2, An employee in a company/startup can ask for a list of action items based on the work-emails they received over the last week.

After this, if you have any further questions we also added a segment to chat with Wall-M, based on the context selected using the initial query. This ensures that all the follow up questions still receive responses that do not hallucinate and include the source emails used to generate the response.

How we built it

Frontend: Taipy

Backend: InterSystems Database, SQL

RAG + Vector Search: InterSystems Software, ChatGPT

Tools: LangChain, LlamaIndex

Challenges we ran into

Learning to work with the Python full-stack framework "TaiPy" Prompt optimization to avoid hallucinations Using LangChain to get a specific template that includes citations pointing to the source of the response/claim Incompatibilities between different tools we wanted to use

What's next for Wall-M

Use the proof of concept for the specified use cases and evaluate its performance using benchmarks to validate the product's credibility. Improved integration into commonly used email applications such as Outlook and Gmail with personalized uses to improve the utility of WALL-M

Try it out

Our Github repository : https://github.com/lars-quaedvlieg/WALL-M