Using iknowpy to do analytics on logs from iris-fhir-generative-ai

As said in the previous article about the iris-fhir-generative-ai experiment, the project logs all events for analysis. Here we are going to discuss two types of analysis covered by analytics embedded in the project:

- Users prompts

- Execution errors

In order to extract useful data to apply analytics, we used the iknowpy library - an opensource library for Natural Language Processing based in the iKnow for IRIS Data Platform. It makes possible identifies entities (phrases) and their semantic context in natural language text in several languages.

Here it's used to extract concepts from data of each log. Check the method SaveConcepts() in the class LogConceptTable for more details.

So, we create a IRIS BI Cube for counting concepts and relate them with other dimensions, like log types and descriptions, for instance.

After you got some prompts answered, you are ready to build the cube. You can do this by accessing the cube manager and hit the Build button, or do it programatically:

ZN "USER"

Do ##class(%DeepSee.Utils).%BuildCube("LogAnalyticsCube")

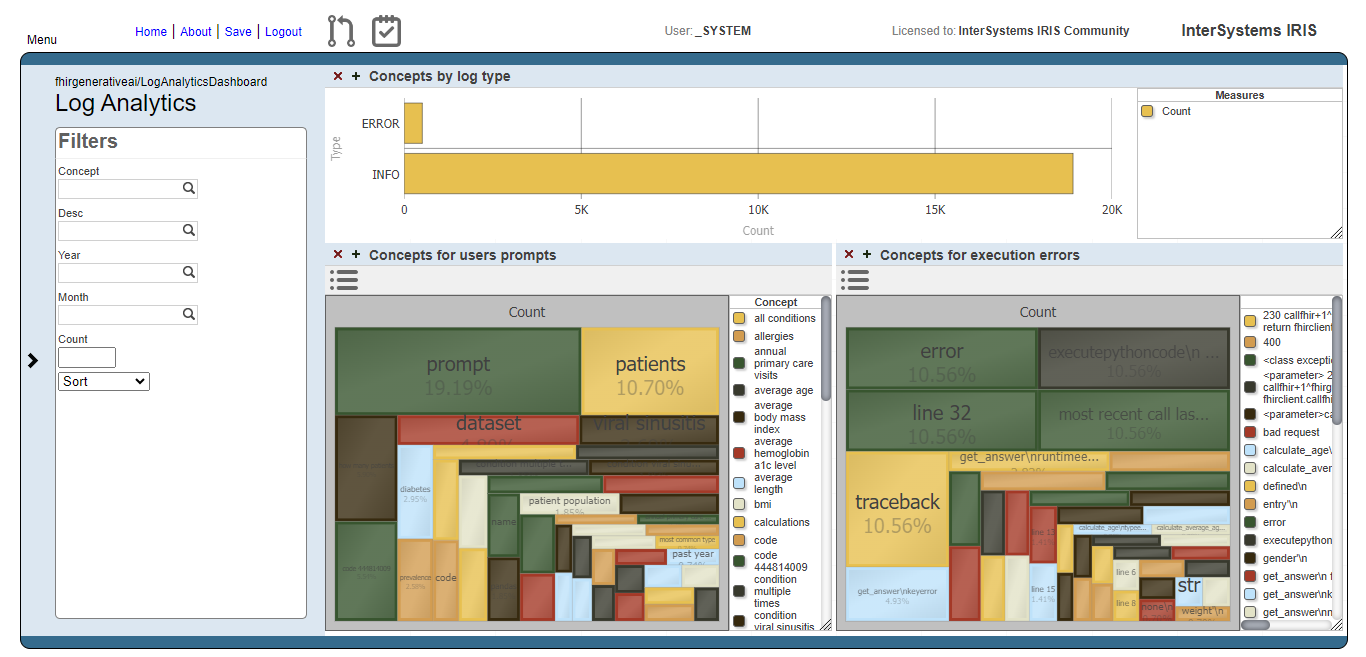

With this cube, we create a dashboard which people can get insights about how the prompts are going in terms of what users are asking and if those prompts are beeing executed or not.

Fig.1 - Log Analytics Dashboard

Users prompts analysis

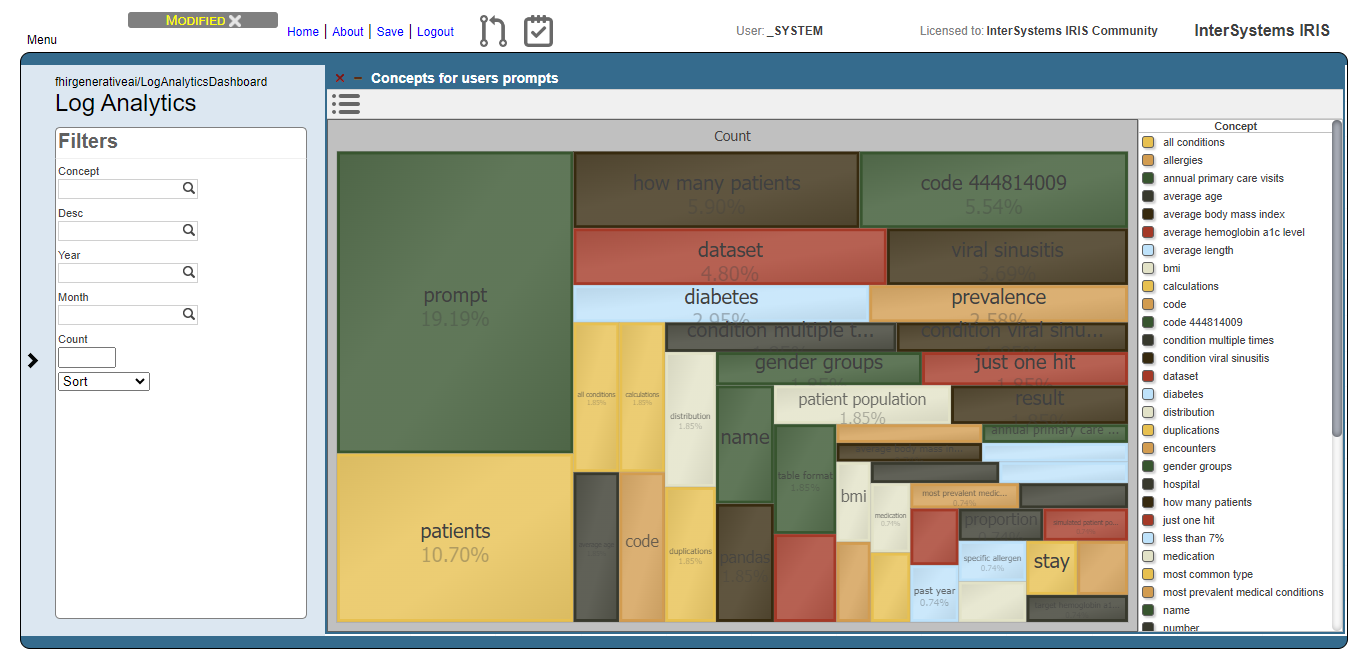

The image below shows the result of the users prompts analysis after running the methods DoAccuracyTests and DoAccuracyExtendedSetTests() of the class fhirgenerativeai.Tests(). It uses a treemap to show the most prevelent concepts.

Fig.2 - Detail of users prompts

As you can see, the most prevelent concepts are meaningless concepts like prompt, code, dataset etc.

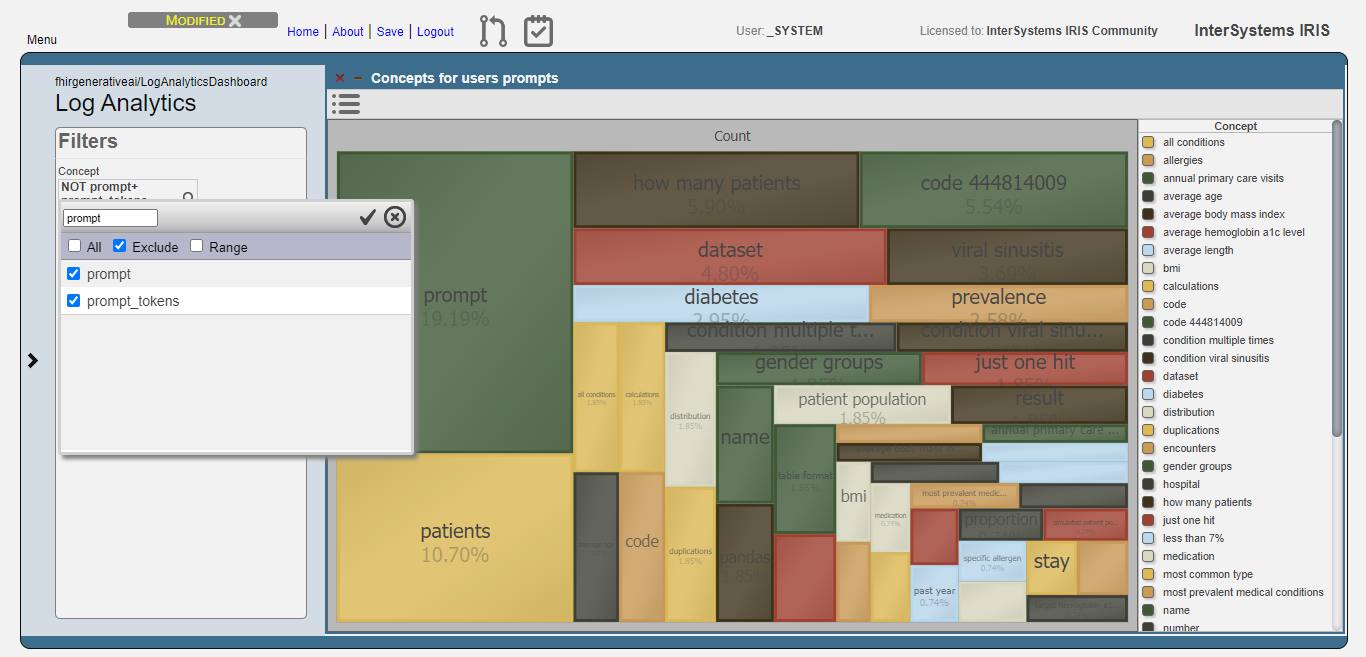

Let's exclude these concepts from the analysis:

Fig.3 - Exclusion of meaningless concepts

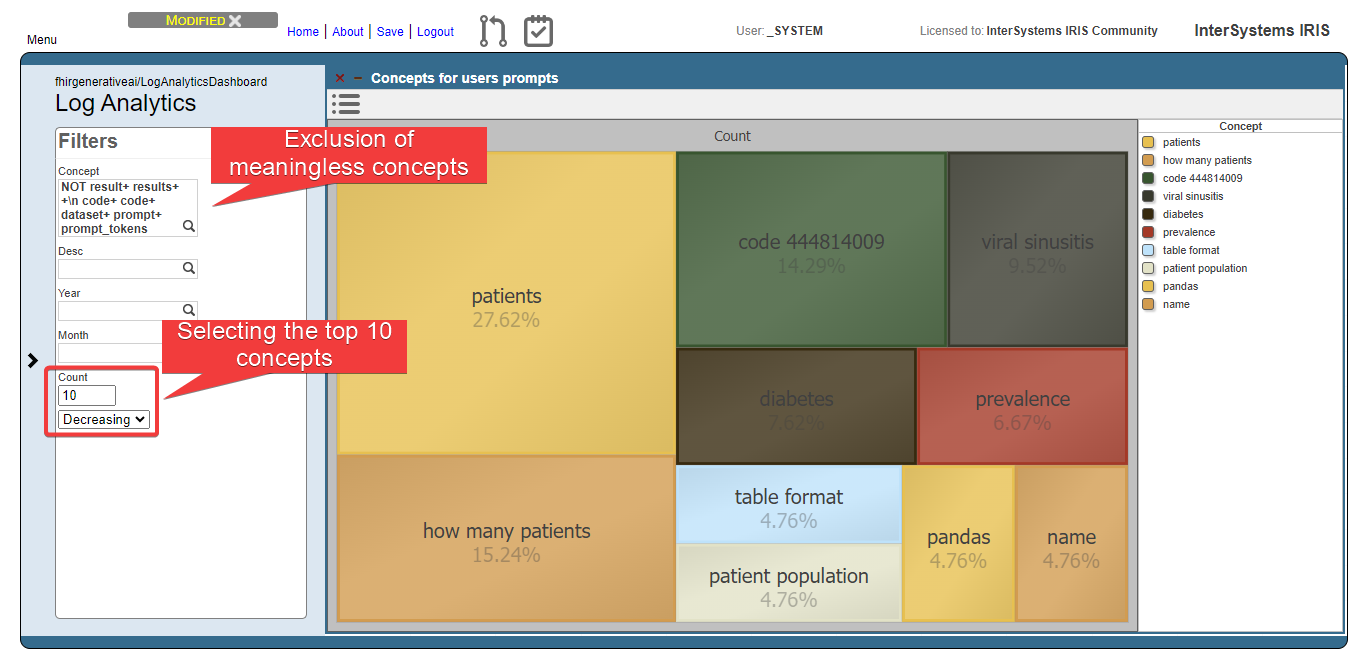

Then, get the top 10 concepts:

Fig.4 - Top 10 concepts for users prompts

Now, we can see that users are asking questions rearging patients, and conditions like viral sinusitis and diabetes, for instance. This could lead system administrators to get insights about what users are expecting and proceed to attend such needs.

Execution errors analysis

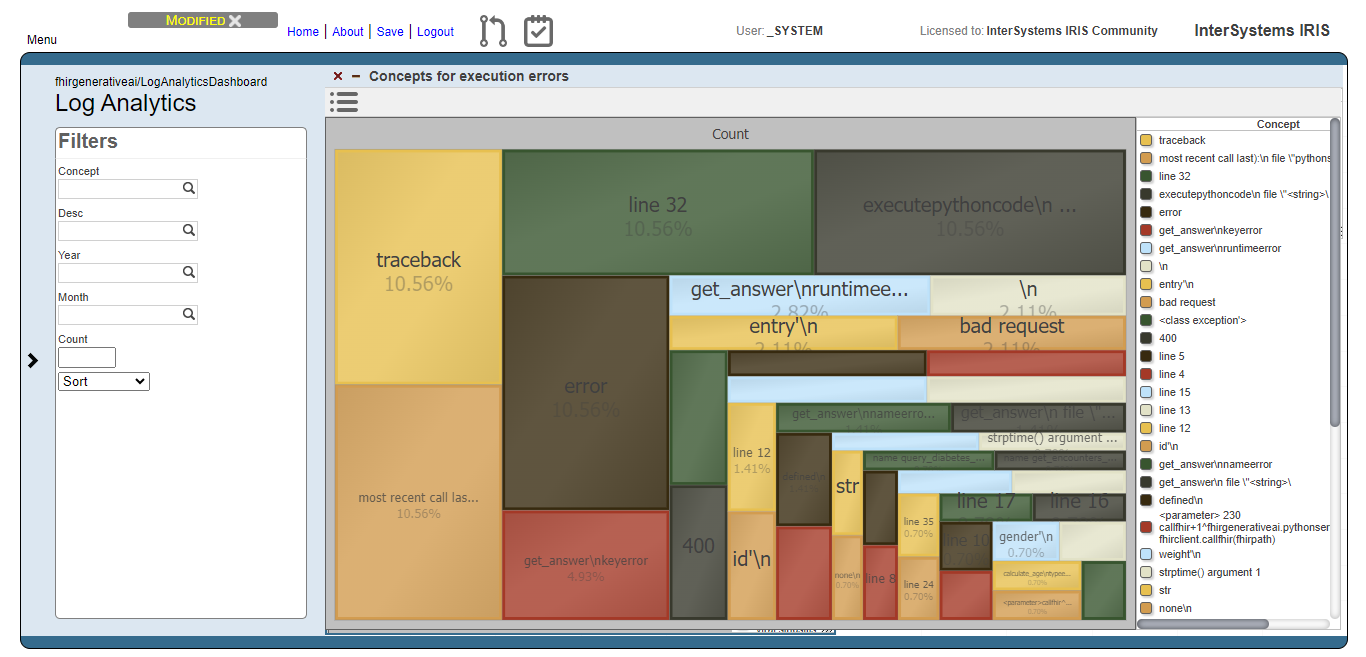

For the execution errors analysis, we have the same visualization as the users prompts. But now, displaying concepts related to execution errors.

Fig.5 - Details of execution errors

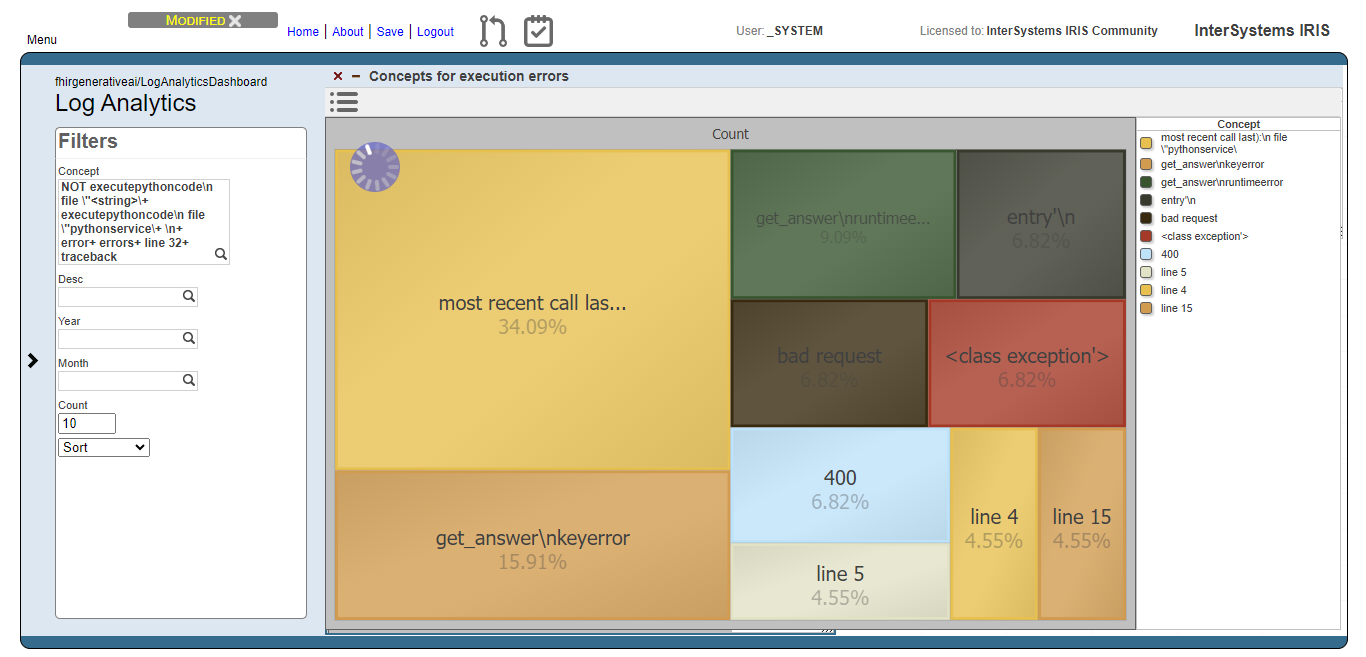

And like for the users prompts analysis, we exclude meaningless concepts and got just the top 10 concepts:

Fig.6 - Top 10 concepts for execution errors

Now we can note, for instance, that concepts like "bad request" and 400 (the HTTP code for bad request error) are relevant. This means that the AI model are generating code that tends to setting invalid FHIR requests.