InterSystems IRIS Parquet file support

Hi community,

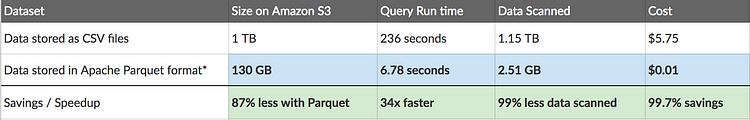

The parquet file format is the evolution of the CSV file format. See the differences when you process data in the AWS using CSV and Parquet:

Do you like to have support to parquet files in the InterSystems IRIS?

Comments

Can you elaborate on what it would mean to support Parquet? The diagram appears to have come from this post:

https://blog.openbridge.com/how-to-be-a-hero-with-powerful-parquet-google-and-amazon-f2ae0f35ee04

In that context, the query run time refers to acting on the file directly with something like Amazon Athena. Do you have in mind importing the file (which is what an IRIS user would currently do with CSV), or somehow querying the file directly?

Support features:

1 - convert parquet file to an objectscript dynamic array and vice versa.

2 - pex business service to get parquet data.

3 - pex business operation to write parquet data.

Ok, the below is from my limited knowledge so may need correcting.

The base file format is simple, the complication is the compression methods (which includes encoding) used and making sure that is all supported as it is part of the actual file format specification.

I have issues with some of the speed claims as they seem to stem from statements that the column based, as opposed to row based layout, means it doesn't have to read the whole file but that is pretty limited, as long as you need to look at a single record and the last field then you still have to read through the whole file. It also ignores that for most of the usage I have had of CSV files I have needed to ingest the whole file anyway.

Any other speed improvements are likely due to the code and database ingesting/searching the data not the format itself.

That doesn't mean there aren't advantages. It may be easier to write more efficient code and the built in compression can reduce time and costs.

So the question is how popular is this format. Well it's pretty new and so hasn't made great inroads as yet, however, given Intersystems interest in "Big Data", ML, and it's integration with Spark etc... it does make sense as it is part of Hadoop and Spark.

One thing to note though is that python already supports this through the PyArrow package and so this might be the best solution, simply use embedded python to process the files.

Ok, thanks

Implemented! See it: https://openexchange.intersystems.com/package/iris-parquet