The continuing convergence of AI technologies and healthcare systems has brought forward many compelling advancements. Let's set the scene. If you have interacted with dynamic models like ChatGPT, you might have, like many of us, begun to envision its application using your unique datasets. Suppose in the healthcare sector you wish to link this technology with Electronic Health Records (EHR) or Electronic Medical Records (EMR), or perhaps you aim for heightened interoperability using FHIR's resources. It all boils down to how we transfer/receive contextual-data to/from LLMs available in the market.

More accurate techniques include fine-tuning, training LLMs exclusively with the context datasets. Except, it costs millions of dollars to accomplish this today. The other way is to feed context to LLMs via one-shot or few-shot queries and getting an answer. Some ways in which this can be achieved are - generating SQL queries, generating code to query/parse, making calls with information from API specifications and so on. But, there is a problem of high token consumption and some of these answers may not be accurate always.

There’s no one solution fits all magic here, but understanding the pros and cons of these techniques can be helpful in devising your own strategy. Also, leveraging good engineering practices (like caches, secondary storage) and focussing on problem solving can help find a balance between the available methods. This post is an attempt at sharing some strategies and drawing comparison between them under different metrics.

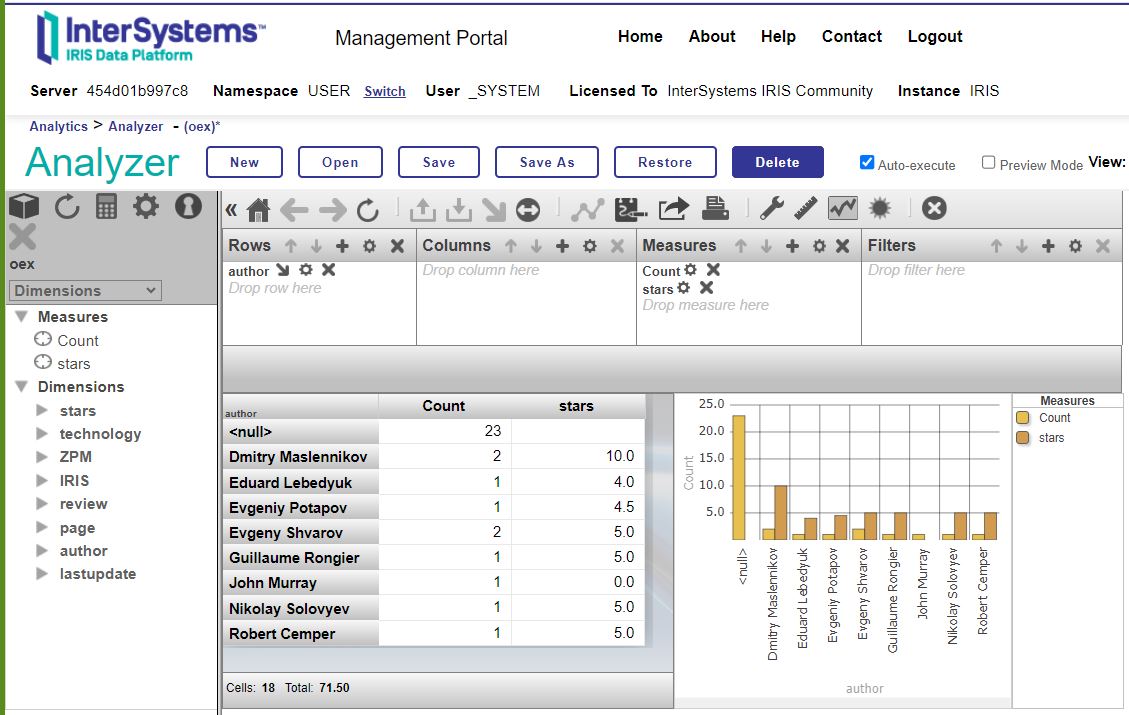

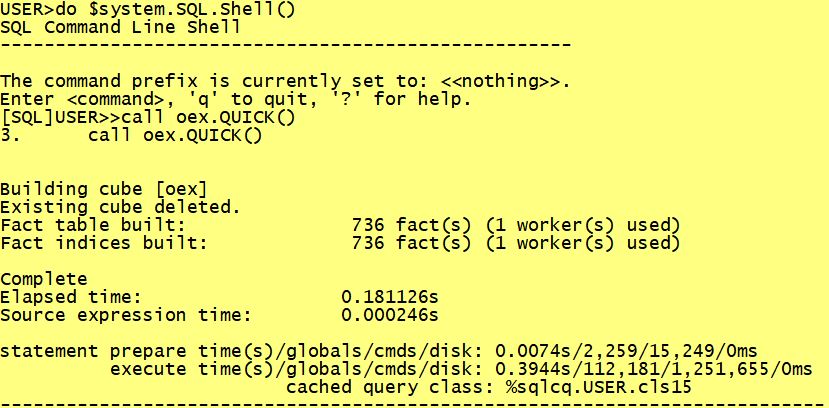

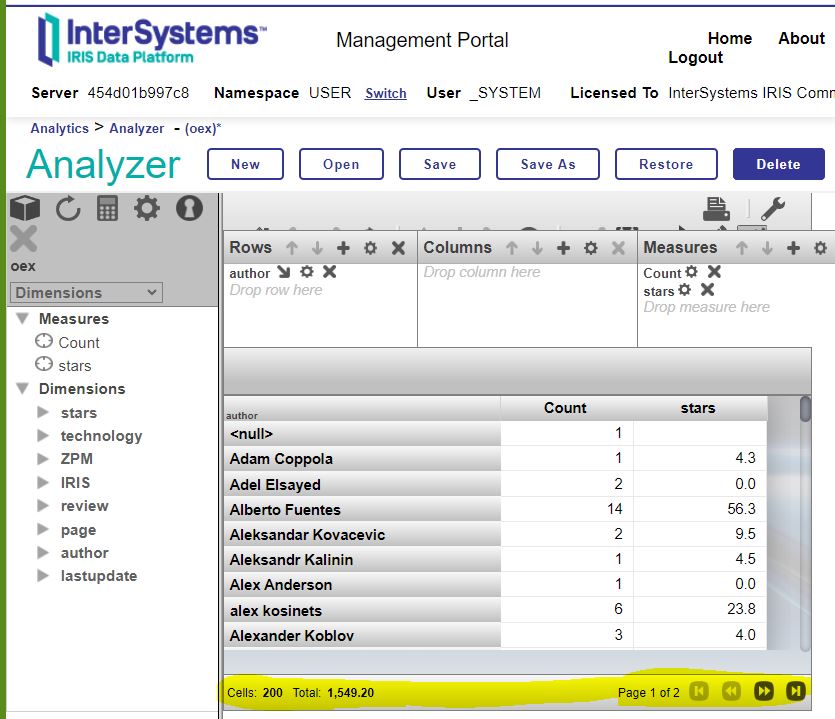

Generating SQL queries

Firstly, we have the more conventional method - loading and parsing the SQL database structure and sample content through LangChain and executing GPT queries. This method has a track record of facilitating efficient and dynamic communication with our healthcare systems, marking itself as a tried-and-true technique in our industry.

There are solutions that pass just the database structure (table schema for example) and others that pass some redacted data to help the LLM generate accurate queries. The former solution has the advantage of fixed token usage and predictable costs but takes a hit on accuracy due to not being completely context-aware. The latter solution might be more token intensive and needs special care with anonymization techniques. These solutions might be perfect for some use-cases, but could there exist a more optimal strategy?

Using LLMs to Generate code to navigate APIs and Database queries

Another sophisticated technique is to let the LLMs generate code to break down a question into multiple queries or API calls. This is a very natural way of solving complicated questions and unleashes the power of combining natural-language and underlying code.

This solution requires good prompt engineering and fine-tuning the template prompts to work well for all corner cases. Fitting this solution into an enterprise context can be challenging with the uncertainties in token usage, secure code generation and controlling the boundaries of what is and is not accessible by the generated code. But in it’s entirety the power of this technique to act autonomously to solve complex problems is fascinating and further advances in this area are something to look forward to.

Loading OpenAPI specs as context to LLMs

Our team wanted to try a different approach to control token usage but also leverage available context to get accurate results. How about employing LangChain to load and parse FHIR’s OpenAPI specifications? OpenAPI presents itself as an impactful alternative, furnished with adaptive and standardized procedures, validating the importance of FHIR's comprehensive API standards. Its distinct advantage lies in promoting effortless data exchange between diverse systems. The control here lies in being able to modify the specifications itself and not the prompts or generated outputs from the LLM.

Imagine the scenario: a POST API performs all required validating checks before data is added to the database. Now, envision leveraging that same POST API, but using a natural language method. It still carries out the same rigorous checks, ensuring consistency and reliability. This nature of OpenAPI doesn't just simplify interactions with healthcare services and applications, but also enhances API comprehensibility, making them easy to understand and predictable.

We understand this solution doesn’t hold the same power as autonomously breaking down tasks or generating code, but this is an aim at arriving at a more practical solution that can be adapted for most use-cases quickly.

Comparison

While all these techniques demonstrate unique benefits and the potential to serve different purposes, let us evaluate their performance against some metrics.

1. Reliability - Prioritizing reliability considering our alliance with AI, OpenAPI has an edge due to its utilization of standardized APIs. This ensures restricted unauthorized access and precise user authentication to specific data, providing enhanced data security as compared to passing AI-generated SQL for DB access - a method that could potentially raise reliability concerns.

2. Cost - The efficiency of the API’s filtering capabilities defined by FHIR plays a role in cost reduction. This permits only necessary data, streamlined through intense prompt engineering, to be transacted, unlike traditional DBs that may return more records than needed, leading to unnecessary cost surges.

3. Performance - The structured, and standardized presentation of data by OpenAPI specifications often contribute to superior output results from GPT-4 models, enhancing performance. However, SQL DBs can return results more swiftly for direct queries. It is important to account for Open API's potential for over-informing due to the definition of more parameters than might be needed for a query.

4. Interoperability - OpenAPI specifications shine when it comes to interoperability. Being platform-independent, they align perfectly with FHIR's mission to boost interoperability in healthcare, fostering a collaborative environment for seamless synchronization with other systems.

5. Implementation & Maintenance - Although it may be comparatively easier to spin off a DB and provide the context to the AI for querying makes the SQL database loading method with its lean control layer may seem easier to implement, the OpenAPI specifications, once mastered, offer benefits like standardization and easier maintenance that outweigh the initial learning and execution curve.

6. Scalability and Flexibility - SQL databases demand a rigid schema that may not comfortably allow for scalability and flexibility. Unlike SQL, OpenAPI offers a more adaptive and scalable solution, making it a future-friendly alternative.

7. Ethics and Concerns - An important, yet complex factor to consider given the rapid growth of AI. Would you be comfortable providing direct DB access to customers, even with filters and auth? Reflect on the importance of data de-identifiers in ensuring privacy within the healthcare space. Even though both OpenAPI and SQL databases have mechanisms to address these concerns, the inherent standardization provided by OpenAPI adds an additional layer of security.

While this discussion offers insights into some of the key factors to consider, it's essential to recognize that the choice between SQL, code generation and OpenAPI is multifaceted and subject to the specific requirements of your projects and organizations.

Please feel free to share your thoughts and perspectives on this topic - perhaps you have additional points to suggest or you'd like to share some examples that have worked best for your use-case.

Vote for our app in the Grand Prix contest if you find it promising!

-

-

.png)

.png)

.png)

【ご参考】

【ご参考】