DataPipe: a data ingestion framework

Hi all!

I'm sharing a tool for data ingestion that we have used in some projects.

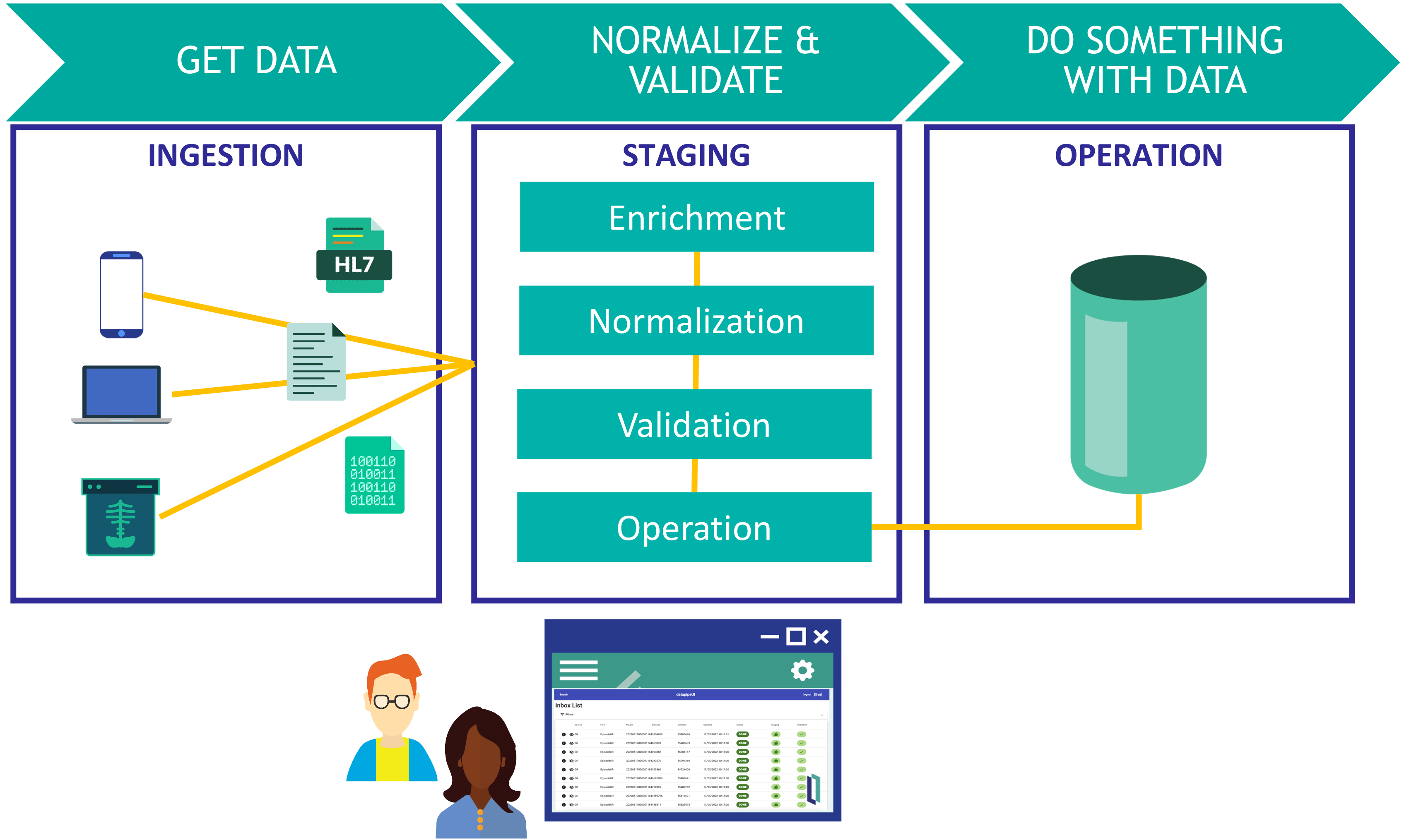

DataPipe is an interoperability framework for data ingestion in InterSystems IRIS in a flexible way. It allows you to receive data from external sources, normalize and validate the information and finally perform whatever operation you need with your data.

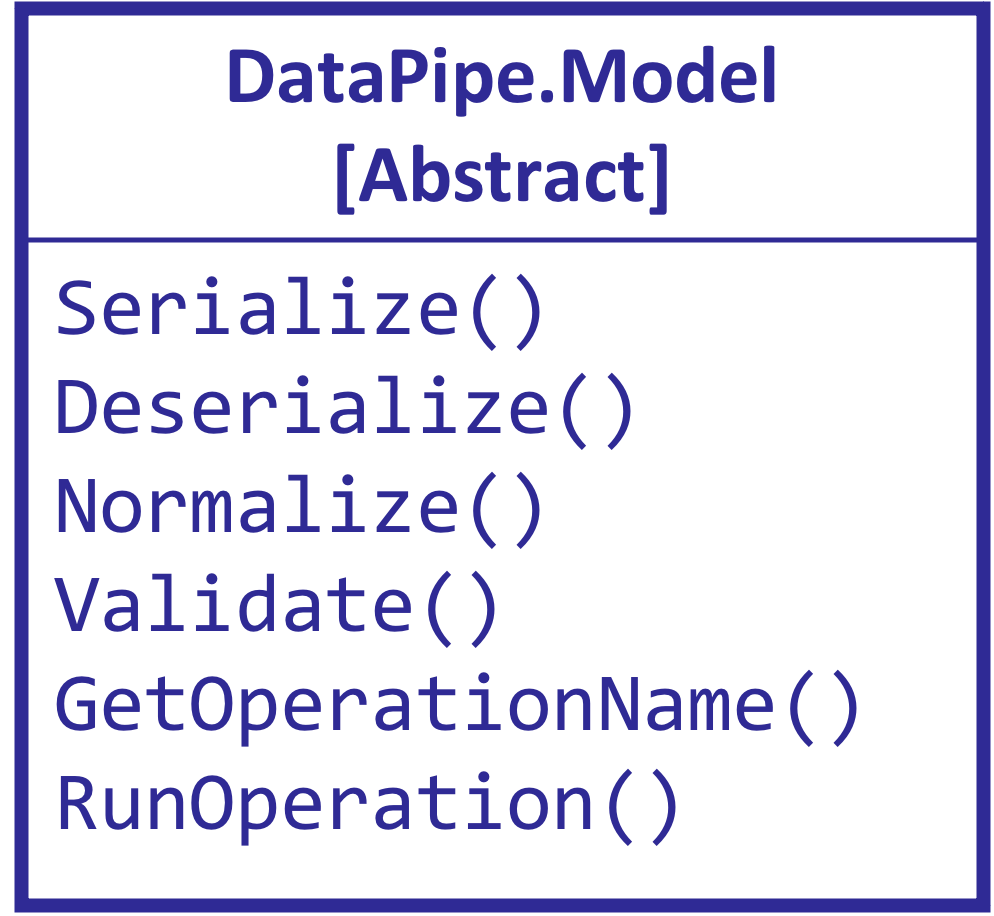

Model

In first place, you need to define a model. A model is simply a class that extends from DataPipe.Model where you need to implement some methods:

In the model you specify how are you going to serialize / deserialize the data, how you normalize and validate it and finally what operation you want to perform with your data once it is normalized and validated.

Here you can find a full example of a DataPipe model.

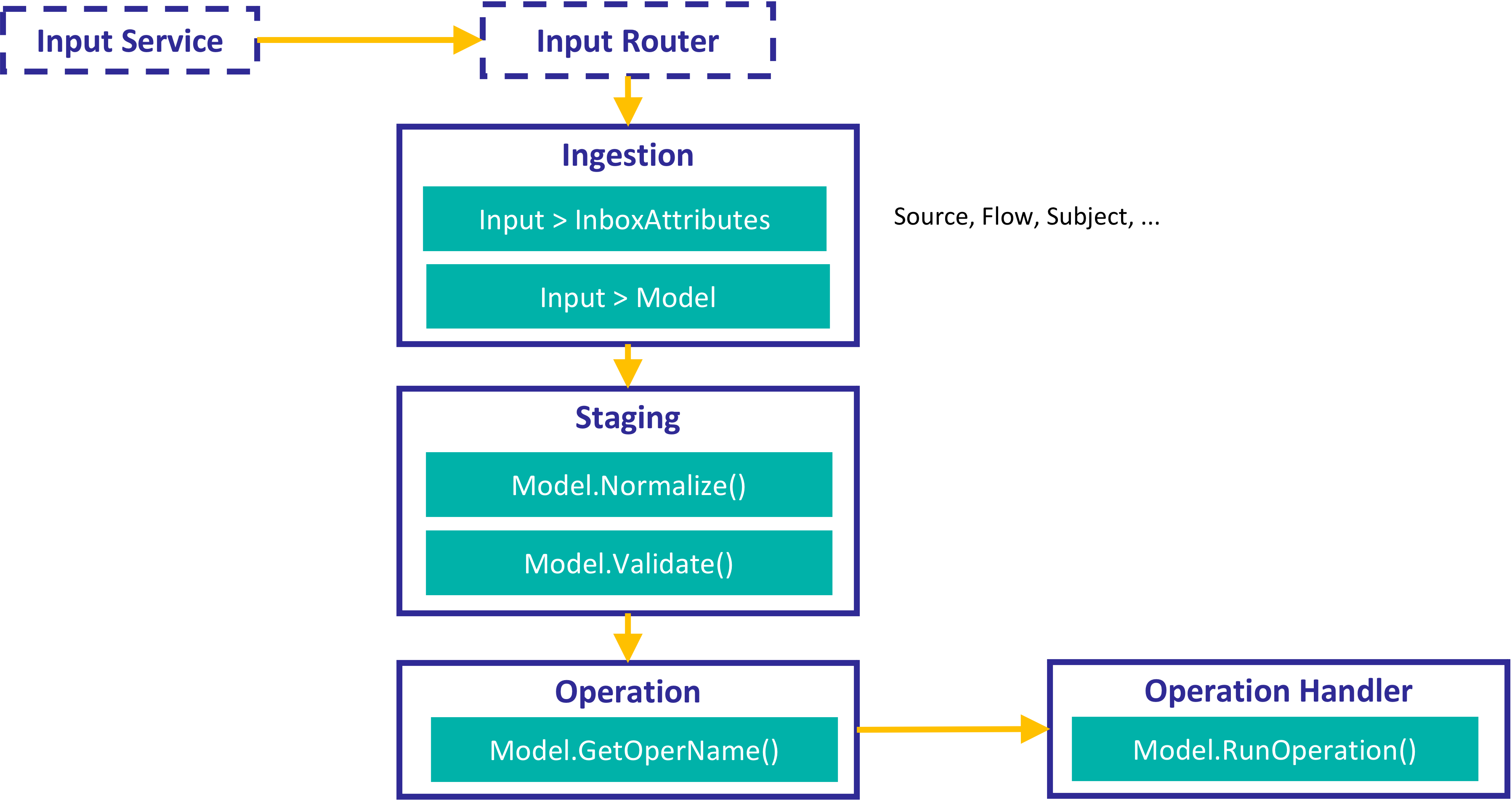

Interoperability components

After defining the model, you can add the components you need to an interoperability production.

You need to build an ingestion process that should include:

Input > InboxAttributestransformation where you specify how to extract attributes that describes your input data. Those attributes can be used to search your processed data.Input > Modeltransformation where you implement how to convert the incoming data to your DataPipe model.

Rest of the components are already pre-built within DataPipe. You have an example of a production here.

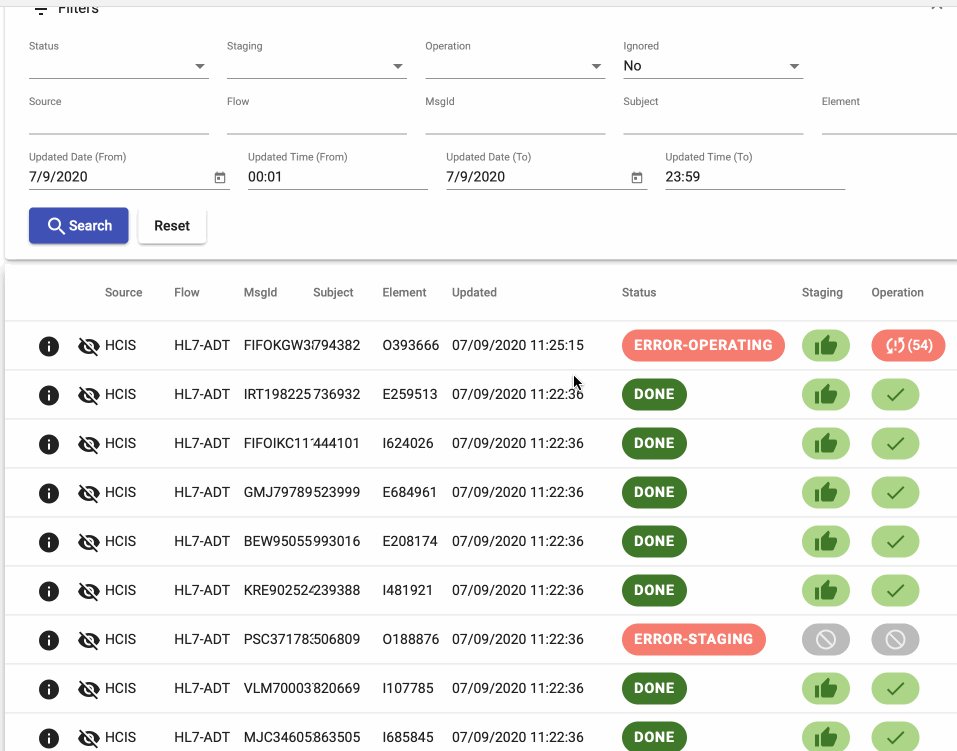

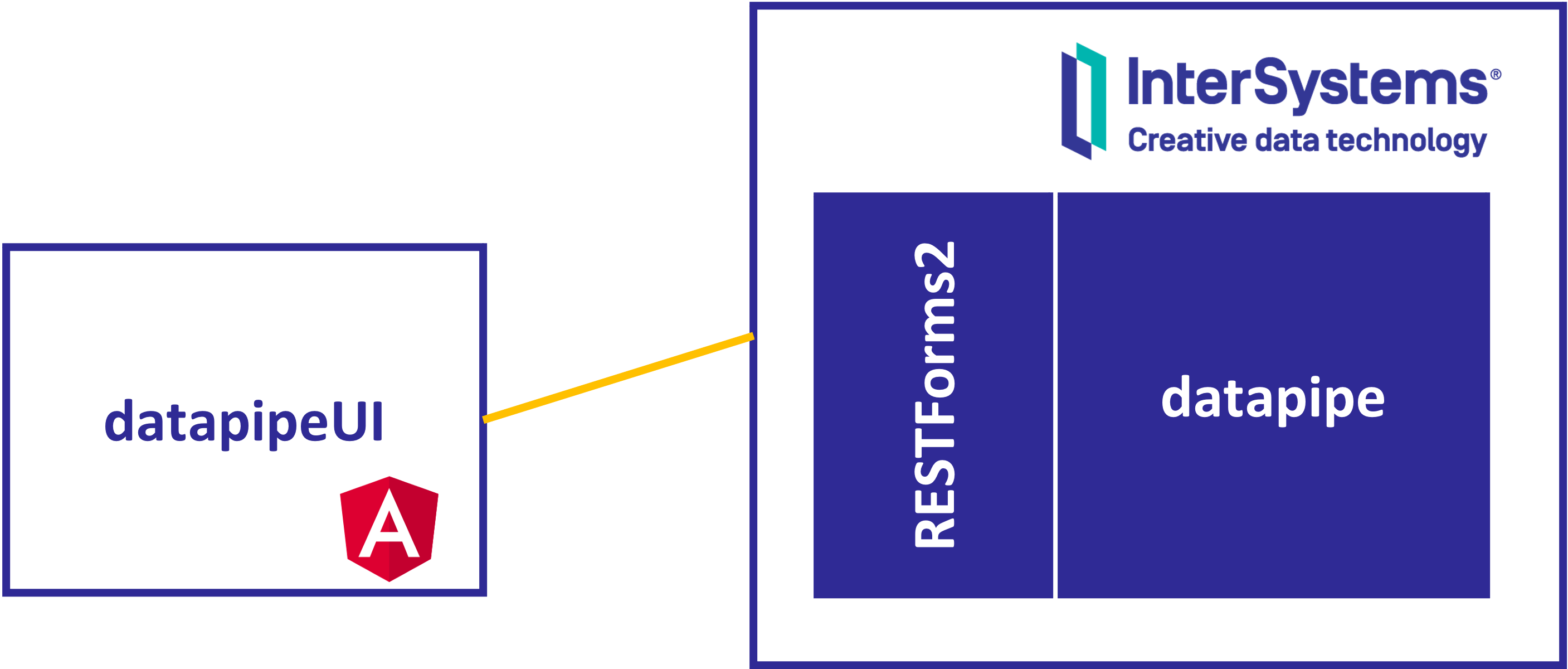

DatapipeUI

There is also a user interface you can use to manage the data you are ingesting into the system.

Deployment

To deploy it, you need an InterSystems IRIS instance, where you install DataPipe (and RESTForms2 for REST APIs) and a external web application (UI) that will interact with the instance.

If you are interested and want to have a look and test it, you can find all the information you need in the Open Exchange link.