Ask your IRIS server using an AI Chat

Now it is possible ask your IRIS server using an AI Chat or compose other agent applications to get:

- List the server metrics

- Return intersystems iris server information

- Save the global value Hello to the global name Greetings

- Get the global value Greetings

- Kill the global Greetings

- List the classes on IRIS Server

- Where is intersystems iris installed?

- Return namespace information from the USER

- List the CSP Applications

- List the server files on namespace USER

- List the jobs on namespace %SYS

To do it, get and install the new package langchain-iris-tool (https://openexchange.intersystems.com/package/langchain-iris-tool).

Installation:

$ git clone https://github.com/yurimarx/langchain-iris-tool.git

$ docker-compose build

$ docker-compose up -dUsing:

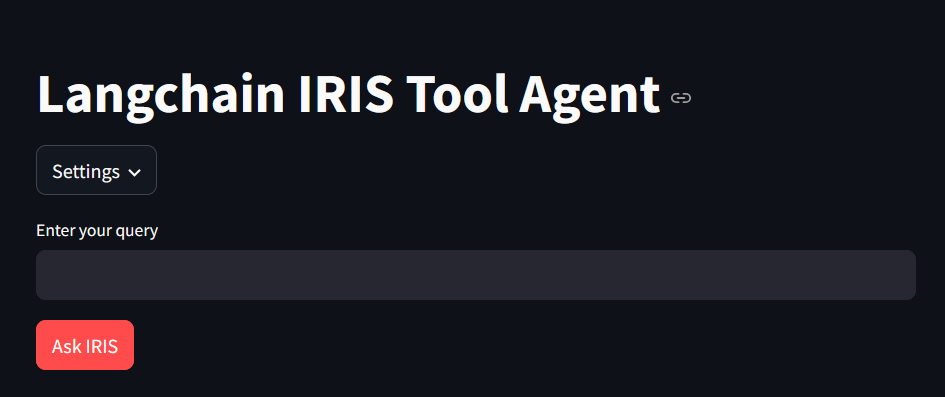

1. Open the URL http://localhost:8501

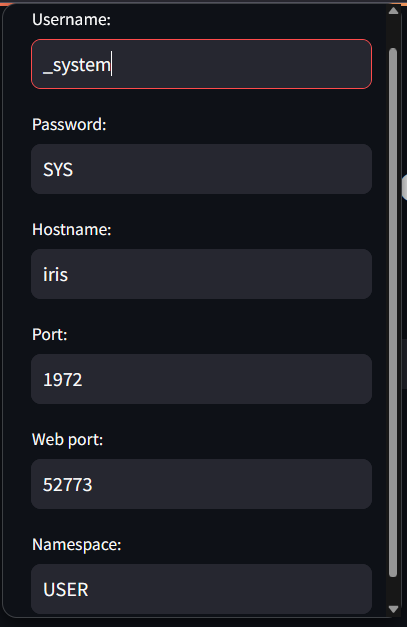

2. Check out the Settings button used to the Agent connect the InterSystems IRIS:

3. Ask one of the following questions and wait some seconds to see the results:

- List the server metrics

- Return intersystems iris server information

- Save the global value Hello to the global name Greetings

- Get the global value Greetings

- Kill the global Greetings

- List the classes on IRIS Server

- Where is intersystems iris installed?

- Return namespace information from the USER

- List the CSP Applications

- List the server files on namespace USER

- List the jobs on namespace %SYS

See the results:

Solutions used:

- Ollama - private LLM and NLP Chat tool

- Lanchain - plataform to build AI agents

- Streamlit - Frontend framework

- InterSystems IRIS as a server to answer the questions about it

About Ollama

It is a free and on-premises LLM solution to be able running Generative AI with privacy and security because your data will be processed on-premises only. The project Ollama supports many models including mistral, Open AI models, Deepseek models and others running on-premises. This package used Ollama via docker compose with the model mistral:

ollama: image:ollama/ollama:latest deploy: resources: reservations: devices: - driver:nvidia capabilities:["gpu"] count:all# Adjust count for the number of GPUs you want to use ports: -11434:11434 volumes: -./model_files:/model_files -.:/code -./ollama:/root/.ollama container_name:ollama_iris pull_policy:always tty:true entrypoint:["/bin/sh","/model_files/run_ollama.sh"]# Loading the finetuned Mistral with the GGUF file restart:always environment: -OLLAMA_KEEP_ALIVE=24h -OLLAMA_HOST=0.0.0.0About Langchain:

Langchain it is a framework to build GenAI applications easily. The Langchain has the concept of tool. Tools are plug-ins (RAG applications) used by Langchain to complement the work of the LLMs. This application implemented a langchain tool to ask management and development questions for your IRIS server:

"""InterSystems IRIS tools for interacting with InterSystems IRIS."""from typing import Any, Dict, List, Optional, Type, Union, cast

from langchain_core.callbacks import CallbackManagerForToolRun

from langchain_core.runnables import RunnableConfig

from langchain_core.tools import BaseTool

from langchain_core.tools.base import ToolCall

from pydantic import BaseModel, Field, PrivateAttr

import requests

import yaml

import iris

import urllib.parse

classInterSystemsIRISInput(BaseModel):"""Input schema for InterSystems IRIS operations."""

operation: str = Field(

...,

description=(

"The operation to perform: 'set_global' (Set Global value), 'get_global' ""(get global value), 'kill_global (delete global)', 'list_objects' (get available objects), 'describe' ""(get object documentation), 'query' (sql query), install_path (get Intersystems IRIS installation path), ""class_list (get Intersystems IRIS class list), server_info (get InterSystems IRIS server information), ""list_csp (list web/csp applications), list_files (list server/intersystems iris files), ""list_metrics(List server/intersystems iris monitoring/metrics), ""get_namespace (get information about a namespace), list_jobs (list the jobs on server/intersystems iris namespace)""'create', 'update', or 'delete'"

),

)

global_name: Optional[str] = Field(

None,

description="The InterSystems IRIS global name for the operations get_global and set_global (e.g., 'Contact', 'Account', 'Lead')",

),

global_value: Optional[str] = Field(

None, description="InterSystems IRIS global value for set_global operation"

),

query: Optional[str] = Field(

None, description="The SQL query string for 'query' operation"

),

filename: Optional[str] = Field(

None,

description="The InterSystems IRIS file name (e.g., 'Contact', 'Account', 'Lead')",

),

class_name: Optional[str] = Field(

None,

description="The InterSystems IRIS class name (e.g., 'Contact', 'Account', 'Lead')",

),

record_data: Optional[Dict[str, Any]] = Field(

None, description="Data for create/update operations as key-value pairs"

)

record_id: Optional[str] = Field(

None, description="InterSystems IRIS record ID for update/delete operations"

)

classInterSystemsIRISTool(BaseTool):"""Tool for interacting with InterSystems IRIS using intersystems-irispython.

Setup:

Install required packages and set environment variables:

.. code-block:: bash

pip install intersystems-irispython

export IRIS_USERNAME="your-username"

export IRIS_PASSWORD="your-password"

export IRIS_NAMESPACE="your-namespace"

export IRIS_PORT="iris-port"

export IRIS_WEBPORT="iris-webport"

export IRIS_HOST="iris-host"

Examples:

Set/Save the value Hello to the global/object greeting:

{

"operation": "set_global",

"global_value": "Hello",

"global_name": "greeting"

}

Get the global value greeting:

{

"operation": "get_global",

"global_name": "greeting"

}

Kill/Delete the global greeting:

{

"operation": "kill_global",

"global_name": "greeting"

}

Describe the class Account:

{

"operation": "describe",

"class_name": "Account"

}

List server/intersystems iris monitoring/metrics:

{

"operation": "list_metrics"

}

List server/intersystems iris files on namespace USER with file name Portal:

{

"operation": "list_files",

"namespace": "USER",

"filename": "Portal"

}

Where is intersystems iris installed?:

{

"operation": "install_path"

}

Return namespace information from the USER:

{

"operation": "get_namespace",

"namespace": "USER"

}

Return information about intersystems iris server:

{

"operation": "server_info"

}

List the jobs on namespace USER:

{

"operation": "list_jobs"

"query": "USER"

}

List the CSP/Web Applications on namespace USER:

{

"operation": "list_csp"

"query": "USER"

}

Query contacts:

{

"operation": "query",

"query": "SELECT TOP 5 Id, Name, Email FROM Contact"

}

Create new contact:

{

"operation": "create",

"object_name": "Contact",

"record_data": {"LastName": "Smith", "Email": "smith@example.com"}

}

"""

name: str = "intersystems_iris"

description: str = (

"Tool for interacting with InterSystems IRIS"

)

args_schema: Type[BaseModel] = InterSystemsIRISInput

_iris: iris.IRIS = PrivateAttr()

_conn: iris.IRISConnection = PrivateAttr()

_username: str = PrivateAttr()

_password: str = PrivateAttr()

_namespace: str = PrivateAttr()

_host: str = PrivateAttr()

_port: int = PrivateAttr()

_webport: int = PrivateAttr()

def__init__(

self,

username: str,

password: str,

hostname: str,

port: int,

webport: int,

namespace: str,

) -> None:"""Initialize iris connection."""

super().__init__()

self._conn = iris.connect(hostname + ":" + str(port) + "/" + namespace, username=username, password=password, sharedmemory=False)

self._iris = iris.createIRIS(self._conn)

self._username = username

self._password = password

self._namespace = namespace

self._host = hostname

self._port = port

self._webport = webport

def_run(

self,

operation: str,

global_name: Optional[str] = None,

global_value: Optional[Any] = None,

query: Optional[str] = None,

filename: Optional[str] = None,

class_name: Optional[str] = None,

namespace: Optional[str] = "%SYS",

record_data: Optional[Dict[str, Any]] = None,

record_id: Optional[str] = None,

run_manager: Optional[CallbackManagerForToolRun] = None,

) -> Union[str, Dict[str, Any], List[Dict[str, Any]]]:"""Execute InterSystems IRIS operation."""try:

baseurl = "http://" + self._host + ":" + str(self._webport)

if operation == "get_global":

ifnot global_name:

raise ValueError("Global name is required for 'get_global' operation")

return self._iris.get(global_name)

elif operation == "set_global":

ifnot global_name:

raise ValueError("Global name and global value are required for 'set_global' operation")

return self._iris.set(global_value, global_name)

elif operation == "kill_global":

ifnot global_name:

raise ValueError("Global name is required for 'kill_global' operation")

return self._iris.kill(global_name)

elif operation == "query":

ifnot query:

raise ValueError("Query string is required for 'query' operation")

cursor = self._conn.cursor()

cursor.execute(query)

return cursor.fetchall()

elif operation == "install_path":

return self._iris.classMethodString('%SYSTEM.Util', 'InstallDirectory')

elif operation == "get_namespace":

if global_name:

namespace = global_name

return self.getStudioApiResponse(baseurl, '/api/atelier/v1/' + urllib.parse.quote(namespace), self._username, self._password)

elif operation == "list_metrics":

return self.getStudioApiResponse(baseurl, '/api/monitor/metrics', self._username, self._password)

elif operation == "list_jobs":

return self.getStudioApiResponse(baseurl, '/api/atelier/v1/' + urllib.parse.quote(namespace) +'/jobs', self._username, self._password)

elif operation == "list_files":

if filename:

print("lista arquivos " + filename + " no namespace " + namespace)

return self.getStudioApiResponse(baseurl, '/api/atelier/v1/' + urllib.parse.quote(namespace) +'/docnames/CLS?filter=' + filename, self._username, self._password)

else:

return self.getStudioApiResponse(baseurl, '/api/atelier/v1/' + urllib.parse.quote(namespace) +'/docnames/CLS', self._username, self._password)

elif operation == "server_info":

return self.getStudioApiResponse(baseurl, '/api/atelier/', self._username, self._password)

elif operation == "list_csp":

return self.getStudioApiResponse(baseurl, '/api/atelier/v1/' + urllib.parse.quote(namespace) +'/cspapps', self._username, self._password)

elif operation == "describe":

ifnot class_name:

raise ValueError("Class name is required for 'describe' operation")

return self._iris.classMethodVoid(class_name, '%ClassName')

else:

raise ValueError(f"Unsupported operation: {operation}")

except Exception as e:

returnf"Error performing Intersystems IRIS operation: {str(e)}"asyncdef_arun(

self,

operation: str,

global_name: Optional[str] = None,

global_value: Optional[str] = None,

query: Optional[str] = None,

filename: Optional[str] = None,

class_name: Optional[str] = None,

namespace: Optional[str] = "%SYS",

record_data: Optional[Dict[str, Any]] = None,

record_id: Optional[str] = None,

run_manager: Optional[CallbackManagerForToolRun] = None,

) -> Union[str, Dict[str, Any], List[Dict[str, Any]]]:"""Async implementation of Intersystems IRIS operations."""# Intersystems IRIS doesn't have native async support,# so we just call the sync versionreturn self._run(

operation, global_name, global_value, query, filename, class_name, namespace, record_data, record_id, run_manager

)

definvoke(

self,

input: Union[str, Dict[str, Any], ToolCall],

config: Optional[RunnableConfig] = None,

**kwargs: Any,

) -> Any:"""Run the tool."""if input isNone:

raise ValueError("Unsupported input type: <class 'NoneType'>")

if isinstance(input, str):

raise ValueError("Input must be a dictionary")

if hasattr(input, "args") and hasattr(input, "id") and hasattr(input, "name"):

input_dict = cast(Dict[str, Any], input.args)

else:

input_dict = cast(Dict[str, Any], input)

ifnot isinstance(input_dict, dict):

raise ValueError(f"Unsupported input type: {type(input)}")

if"operation"notin input_dict:

raise ValueError("Input must be a dictionary with an 'operation' key")

return self._run(**input_dict)

asyncdefainvoke(

self,

input: Union[str, Dict[str, Any], ToolCall],

config: Optional[RunnableConfig] = None,

**kwargs: Any,

) -> Any:"""Run the tool asynchronously."""if input isNone:

raise ValueError("Unsupported input type: <class 'NoneType'>")

if isinstance(input, str):

raise ValueError("Input must be a dictionary")

if hasattr(input, "args") and hasattr(input, "id") and hasattr(input, "name"):

input_dict = cast(Dict[str, Any], input.args)

else:

input_dict = cast(Dict[str, Any], input)

ifnot isinstance(input_dict, dict):

raise ValueError(f"Unsupported input type: {type(input)}")

if"operation"notin input_dict:

raise ValueError("Input must be a dictionary with an 'operation' key")

returnawait self._arun(**input_dict)

@staticmethoddefgetStudioApiResponse(baseurl, path, username, password):

credentials = (username, password)

response = requests.get(baseurl + path, auth=credentials)

status = response.status_code

if status == 200:

if"monitor/metrics"notin path:

return yaml.dump(response.json())

else:

return response.text

else:

returnNoneAbout Streamlit:

The streamlit solution it is used to develop frontends using python language. This application has a streamlit chat application to interact with the Ollama, Langchain and IRIS and get relevant responses:

from langchain_iris_tool.tools import InterSystemsIRISTool

from langchain_ollama import ChatOllama

import streamlit as st

## LANGCHAIN OLLAMA WRAPPER#

username = "_system"

password = "SYS"

hostname = "iris"

port = 1972

webport = 52773

namespace = "USER"## STREAMLIT APP#

st.title("Langchain IRIS Tool Agent")

with st.popover("Settings"):

username = st.text_input("Username:", username)

password = st.text_input("Password:", password)

hostname = st.text_input("Hostname:", hostname)

port = int(st.text_input("Port:", port))

webport = int(st.text_input("Web port:", webport))

namespace = st.text_input("Namespace:", namespace)

# User query input

query = st.text_input(label="Enter your query")

# Submit buttonif st.button(label="Ask IRIS", type="primary"):

tool = InterSystemsIRISTool(

username=username, password=password, hostname=hostname,

port=port, webport=webport, namespace=namespace)

llm = ChatOllama(

base_url="http://ollama:11434",

model="mistral",

temperature=0,

).bind_tools([tool])

with st.container(border=True):

with st.spinner(text="Generating response"):

# Get response from llm

response = llm.invoke(query)

st.code(tool.invoke(response.tool_calls[0]["args"]), language="yaml")Enjoy the app!